Configure advanced prompts

You can configure advanced prompts in either the AWS Management Console or through the API.

- Console

-

In the console, you can configure advanced prompts after you have created the agent. You configure them while editing the agent.

To view or edit advanced prompts for your agent

-

Sign in to the AWS Management Console with an IAM identity that has permissions to use the Amazon Bedrock console. Then, open the Amazon Bedrock console at https://console.aws.amazon.com/bedrock

. -

In the left navigation pane, choose Agents. Then choose an agent in the Agents section.

-

On the agent details page, in the Working draft section, select Working draft.

-

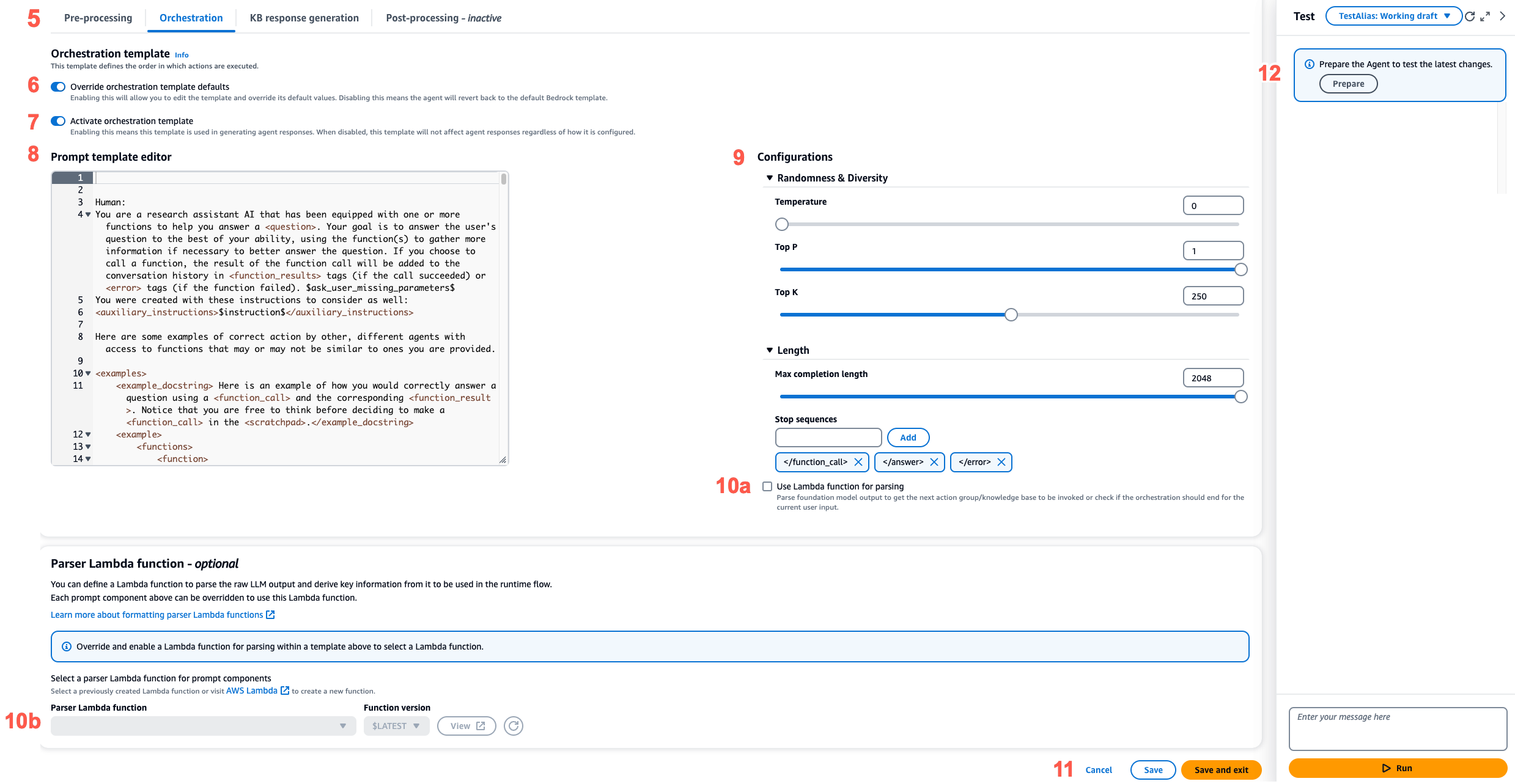

On the Working draft page, in the Orchestration strategy section, choose Edit.

-

On the Orchestration strategy page, in the Orchestration strategy details section, make sure the Default orchestration is selected and then choose the tab corresponding to the step of the agent sequence that you want to edit.

-

To enable editing of the template, turn on Override template defaults. In the Override template defaults dialog box, choose Confirm.

Warning

If you turn off Override template defaults or change the model, the default Amazon Bedrock template is used and your template will be immediately deleted. To confirm, enter

confirmin the text box to confirm the message that appears. -

To allow the agent to use the template when generating responses, turn on Activate template. If this configuration is turned off, the agent doesn't use the template.

-

To modify the example prompt template, use the Prompt template editor.

-

In Configurations, you can modify inference parameters for the prompt. For definitions of parameters and more information about parameters for different models, see Inference request parameters and response fields for foundation models.

-

(Optional) To use a Lambda function that you have defined to parse the raw foundation model output, perform the following actions:

Note

One Lambda function is used for all the prompt templates.

-

In the Configurations section, select Use Lambda function for parsing. If you clear this setting, your agent will use the default parser for the prompt.

-

For the Parser Lambda function, select a Lambda function from the dropdown menu.

Note

You must attach permissions for your agent so that it can access the Lambda function. For more information, see Resource-based policy to allow Amazon Bedrock to invoke an action group Lambda function.

-

-

To save your settings, choose one of the following options:

-

To remain in the same window so that you can dynamically update the prompt settings while testing your updated agent, choose Save.

-

To save your settings and return to the Working draft page, choose Save and exit.

-

-

To test the updated settings, choose Prepare in the Test window.

-

- API

-

To configure advanced prompts by using the API operations, you send an UpdateAgent call and modify the following

promptOverrideConfigurationobject."promptOverrideConfiguration": { "overrideLambda": "string", "promptConfigurations": [ { "basePromptTemplate": "string", "inferenceConfiguration": { "maximumLength": int, "stopSequences": [ "string" ], "temperature": float, "topK": float, "topP": float }, "parserMode": "DEFAULT | OVERRIDDEN", "promptCreationMode": "DEFAULT | OVERRIDDEN", "promptState": "ENABLED | DISABLED", "promptType": "PRE_PROCESSING | ORCHESTRATION | KNOWLEDGE_BASE_RESPONSE_GENERATION | POST_PROCESSING | MEMORY_SUMMARIZATION" } ], promptCachingState: { cachingState: "ENABLED | DISABLED" } }-

In the

promptConfigurationslist, include apromptConfigurationobject for each prompt template that you want to edit. -

Specify the prompt to modify in the

promptTypefield. -

Modify the prompt template through the following steps:

-

Specify the

basePromptTemplatefields with your prompt template. -

Include inference parameters in the

inferenceConfigurationobjects. For more information about inference configurations, see Inference request parameters and response fields for foundation models.

-

-

To enable the prompt template, set the

promptCreationModetoOVERRIDDEN. -

To allow or prevent the agent from performing the step in the

promptTypefield, modify thepromptStatevalue. This setting can be useful for troubleshooting the agent's behavior.-

If you set

promptStatetoDISABLEDfor thePRE_PROCESSING,KNOWLEDGE_BASE_RESPONSE_GENERATION, orPOST_PROCESSINGsteps, the agent skips that step. -

If you set

promptStatetoDISABLEDfor theORCHESTRATIONstep, the agent sends only the user input to the foundation model in orchestration. In addition, the agent returns the response as is without orchestrating calls between API operations and knowledge bases. -

By default, the

POST_PROCESSINGstep isDISABLED. By default, thePRE_PROCESSING,ORCHESTRATION, andKNOWLEDGE_BASE_RESPONSE_GENERATIONsteps areENABLED. -

By default, the

MEMORY_SUMMARIZATIONstep isENABLEDif Memory is enabled and theMEMORY_SUMMARIZATIONstep isDISABLEDif Memory is disabled.

-

-

To use a Lambda function that you have defined to parse the raw foundation model output, perform the following steps:

-

For each prompt template that you want to enable the Lambda function for, set

parserModetoOVERRIDDEN. -

Specify the Amazon Resource Name (ARN) of the Lambda function in the

overrideLambdafield in thepromptOverrideConfigurationobject.

-

-