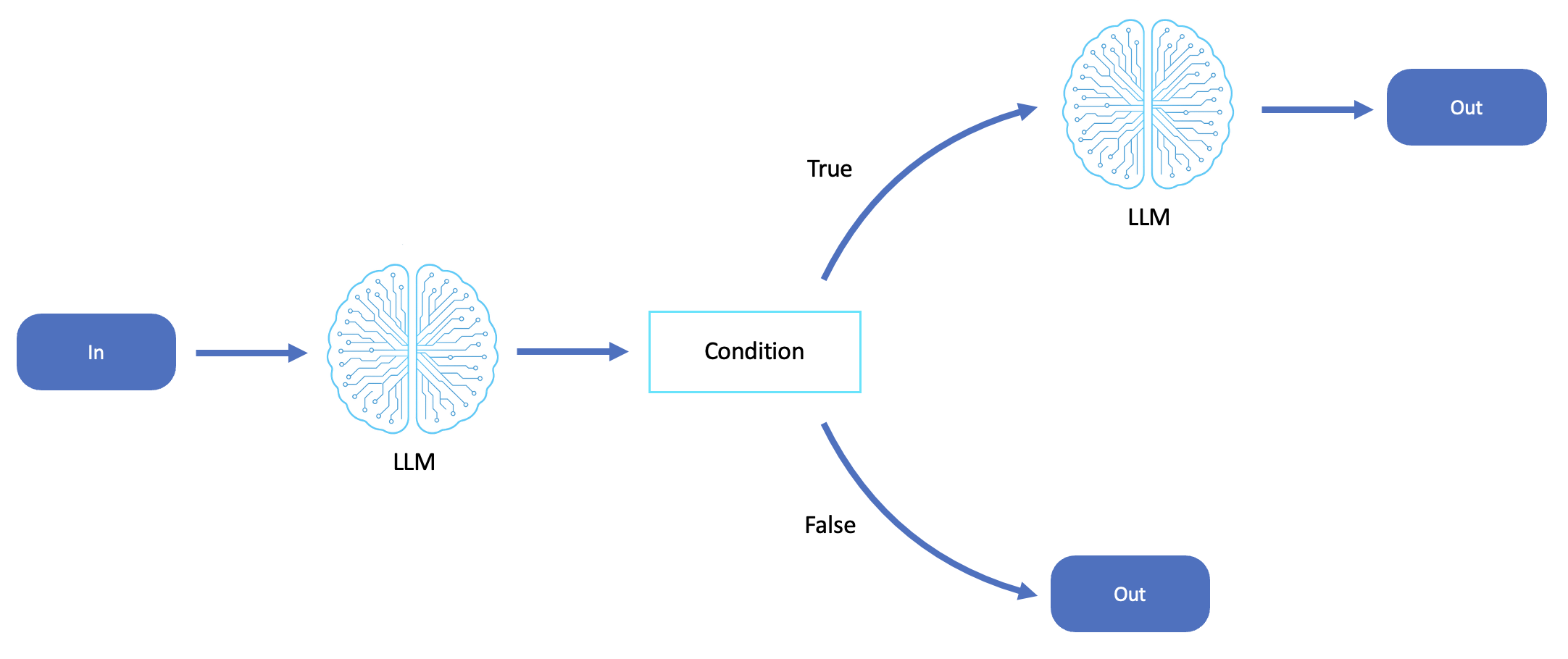

Workflow for prompt chaining

Prompt chaining decomposes complex tasks into a sequence of steps, where each step is a discrete LLM invocation that processes or builds upon the output of the previous one.

The prompt chaining workflow is suited for scenarios where tasks can be logically divided into sequential reasoning steps, and where intermediate outputs inform the next stage. It excels in workflows that require structured thinking, progressive transformation, or layered analysis, such as document review, code generation, knowledge extraction, and content refinement.

Description

-

The complexity of the task exceeds the context window or reasoning depth of a single LLM call.

-

Outputs from one step (for example, analysis, summarization, or planning) become inputs for a follow-up decision or generation phase.

-

You need transparency and control across reasoning stages (for example, auditable intermediate results).

-

You want to plug in external validation, filtering, or enrichment logic between steps.

-

It's ideal for agents operating in pipeline-style reasoning loops, such as research agents, editorial assistants, planning systems, and multistage copilots.

Capabilities

-

Linear or branching chains of LLM calls

-

Intermediate results passed as structured input or embedded into follow-up prompts

-

Can be orchestrated with AWS Step Functions, AWS Lambda, or agent-specific runners

Common use cases

-

Multistep reasoning tasks (for example, "summarize critique rewrite")

-

Research assistants synthesizing layered outputs (for example, "search extract facts answer question")

-

Code generation pipelines ("generate plan write code test code explain output")