Starting a conversation stream to a Amazon Lex V2 bot

You use the StartConversation operation to

start a stream between the user and the Amazon Lex V2 bot in your

application. The POST request from the application

establishes a connection between your application and the Amazon Lex V2

bot. This enables your application and the bot to start exchanging

information with each other through events.

Note

When using StartConversation, if the utterance audio duration exceeds the configured value for max-length-ms, cuts off the audio at the specified duration.

The StartConversation operation is supported only in

the following SDKs:

The first event your application must send to the Amazon Lex V2 bot is a ConfigurationEvent. This event includes information such as the response type format. The following are the parameters that you can use in a configuration event:

-

responseContentType – Determines whether the bot responds to user input with text or speech.

-

sessionState – Information relating to the streaming session with the bot such as predetermined intent or dialog state.

-

welcomeMessages – Specifies the welcome messages that play for the user at the beginning of their conversation with a bot. These messages play before the user provides any input. To activate a welcome message, you must also specify values for the

sessionStateanddialogActionparameters. -

disablePlayback – Determines whether the bot should wait for a cue from the client before it starts listening for caller input. By default, playback is activated, so the value of this field is

false. -

requestAttributes – Provides additional information for the request.

For information about how to specify values for the preceding parameters, see the ConfigurationEvent data type of the StartConversation operation.

Each stream between a bot and your application can only have one configuration event. After your application has sent a configuration event, the bot can take additional communication from your application.

If you've specified that your user is using audio to communicate with the Amazon Lex V2 bot, your application can send the following events to the bot during that conversation:

-

AudioInputEvent – Contains an audio chunk that has maximum size of 320 bytes. Your application must use multiple audio input events to send a message from the server to the bot. Every audio input event in the stream must have the same audio format.

-

DTMFInputEvent – Sends a DTMF input to the bot. Each DTMF key press corresponds to a single event.

-

PlaybackCompletionEvent – Informs the server that a response from the user's input has been played back to them. You must use a playback completion event if you're sending an audio response to the user. If

disablePlaybackof your configuration event istrue, you can't use this feature. -

DisconnectionEvent – Informs the bot that the user has disconnected from the conversation.

If you've specified that the user is using text to communicate with the bot, your application can send the following events to the bot during that conversation:

-

TextInputEvent – Text that is sent from your application to the bot. You can have up to 512 characters in a text input event.

-

PlaybackCompletionEvent – Informs the server that a response from the user's input has been played back to them. You must use this event if you're playing audio back to the user. If

disablePlaybackof your configuration event istrue, you can't use this feature. -

DisconnectionEvent – Informs the bot that the user has disconnected from the conversation.

You must encode every event that you send to an Amazon Lex V2 bot in the correct format. For more information, see Event stream encoding.

Every event has an event ID. To help troubleshoot any issues that might occur in the stream, assign a unique event ID to each input event. You can then troubleshoot any processing failures with the bot.

Amazon Lex V2 also uses timestamps for each event. You can use these timestamps in addition to the event ID to help troubleshoot any network transmission issues.

During the conversation between the user and the Amazon Lex V2 bot, the bot can send the following outbound events in response to the user:

-

IntentResultEvent – Contains the intent that Amazon Lex V2 determined from the user utterance. Each internal result event includes:

-

inputMode – The type of user utterance. Valid values are

Speech,DTMF, orText. -

interpretations – Interpretations that Amazon Lex V2 determines from the user utterance.

-

requestAttributes – If you haven't modified the request attributes by using a lambda function, these are the same attributes that were passed at the start of the conversation.

-

sessionId – Session identifier used for the conversation.

-

sessionState – The state of the user's session with Amazon Lex V2.

-

-

TranscriptEvent – If the user provides an input to your application, this event contains the transcript of the user's utterance to the bot. Your application does not receive a

TranscriptEventif there's no user input.The value of the transcript event sent to your application depends on whether you've specified audio (speech and DMTF) or text as a conversation mode:

-

Transcript of speech input – If the user is speaking with the bot, the transcript event is the transcription of the user's audio. It's a transcript of all the speech from the time the user begins speaking to the time they end speaking.

-

Transcript of DTMF input – If the user is typing on a keypad, the transcript event contains all the digits the user pressed in their input.

-

Transcript of text input – If the user is providing text input, the transcript event contains all of the text in the user's input.

-

-

TextResponseEvent – Contains the bot response in text format. A text response is returned by default. If you've configured Amazon Lex V2 to return an audio response, this text is used to generate an audio response. Each text response event contains an array of message objects that the bot returns to the user.

-

AudioResponseEvent – Contains the audio response synthesized from the text generated in the

TextResponseEvent. To receive audio response events, you must configure Amazon Lex V2 to provide an audio response. All audio response events have the same audio format. Each event contains audio chunks of no more than 100 bytes. Amazon Lex V2 sends an empty audio chunk with thebytesfield set tonullto indicate that the end of the audio response event to your application. -

PlaybackInterruptionEvent – When a user interrupts a response that the bot has sent to your application, Amazon Lex V2 triggers this event to stop the playback of the response.

-

HeartbeatEvent – Amazon Lex V2 sends this event back periodically to keep the connection between your application and the bot from timing out.

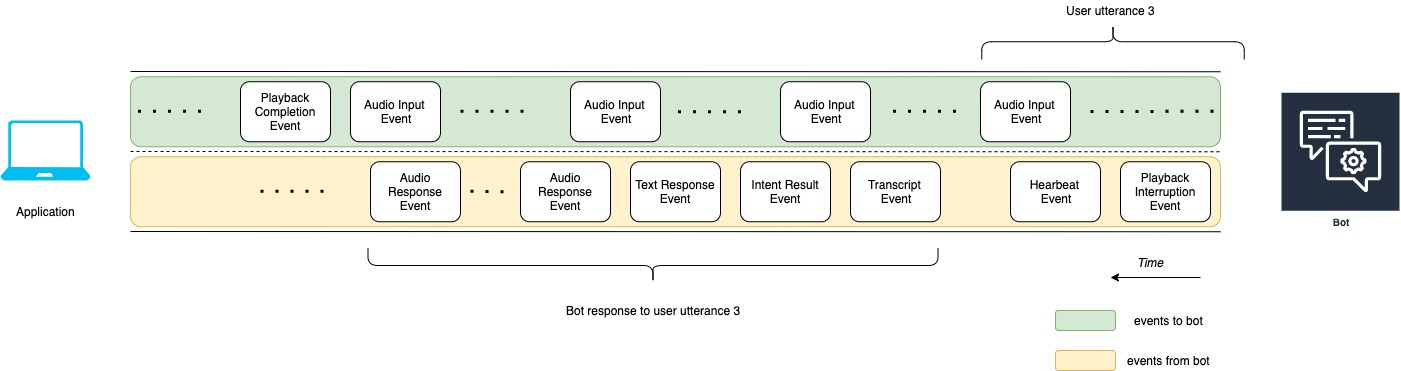

Time sequence of events for an audio conversation when using a Amazon Lex V2 bot

The following diagrams show a streaming audio conversation between a user and an Amazon Lex V2 bot. The application continuously streams audio to the bot, and the bot looks for user input from the audio. In this example, both the user and the bot are using speech to communicate. Each diagram corresponds to a user utterance and the response of the bot to that utterance.

The following diagram shows the beginning of a conversation between the application and the bot. The stream begins at time zero (t0).

The following list describes the events of the preceding diagram.

-

t0: The application sends a configuration event to the bot to start the stream.

-

t1: The application streams audio data. This data is broken into a series of input events from the application.

-

t2: For user utterance 1, the bot detects an audio input event when the user begins speaking.

-

t2: While the user is speaking, the bot sends a heartbeat event to maintain the connection. It sends these events intermittently to make sure the connection doesn't time out.

-

t3: The bot detects the end of the user's utterance.

-

t4: The bot sends back a transcript event that contains a transcript of the user's speech to the application. This is the beginning of Bot response to user utterance 1.

-

t5: The bot sends an intent result event to indicate the action that the user wants to perform.

-

t6: The bot begins providing its response as text in a text response event.

-

t7: The bot sends a series of audio response events to the application to play for the user.

-

t8: The bot sends another heartbeat event to intermittently maintain the connection.

The following diagram is a continuation of the previous diagram. It shows the application sending a playback completion event to the bot to indicate that it has stopped playing the audio response for the user. The application plays back Bot response to user utterance 1 to the user. The user responds to Bot response to user utterance 1 with User utterance 2.

The following list describes the events of the preceding diagram:

-

t10: The application sends a playback completion event to indicate that it has finished playing the bot's message to the user.

-

t11: The application sends the user response back to the bot as User utterance 2.

-

t12: For Bot response to user utterance 2, the bot waits for the user to stop speaking and then begins to provide an audio response.

-

t13: While the bot sends Bot response to user utterance 2 to the application, the bot detects the start of User utterance 3. The bot stops Bot response to user utterance 2 and sends a playback interruption event.

-

t14: The bot sends a playback interruption event to the application to signal that the user has interrupted the prompt.

The following diagram shows the Bot response to user utterance 3, and that the conversation continues after the bot responds to the user utterance.