Deploy a Compiled Model Using the Console

You must satisfy the

prerequisites section if the model was compiled using AWS SDK for Python (Boto3), the

AWS CLI, or the Amazon SageMaker AI console. Follow the steps below to create and deploy a SageMaker AI

Neo-compiled model using the SageMaker AI

consolehttps://console.aws.amazon.com/ SageMaker AI

Topics

Deploy the Model

After you have satisfied the prerequisites, use the following steps to deploy a model compiled with Neo:

-

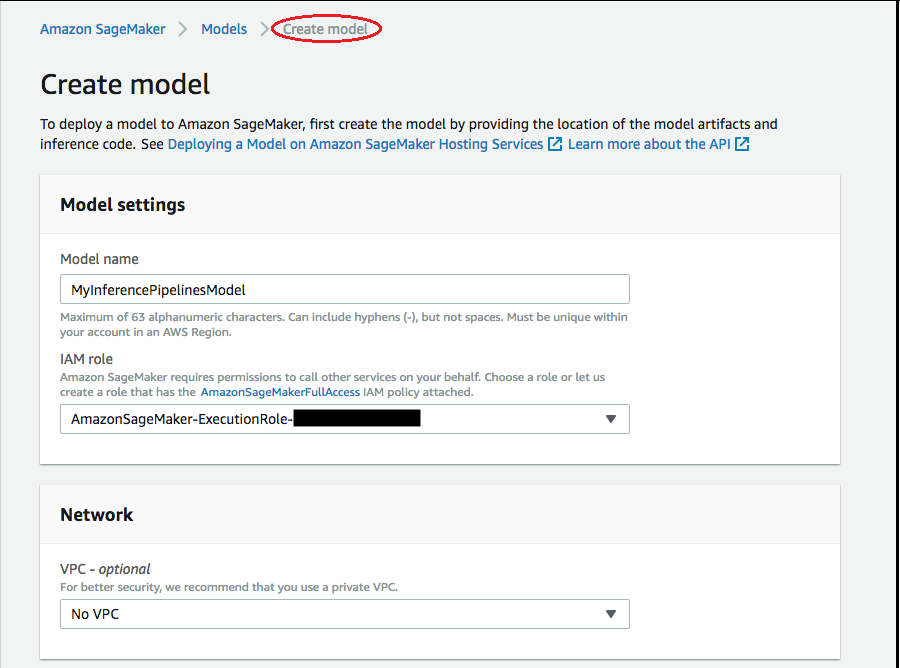

Choose Models, and then choose Create models from the Inference group. On the Create model page, complete the Model name, IAM role, and VPC fields (optional), if needed.

-

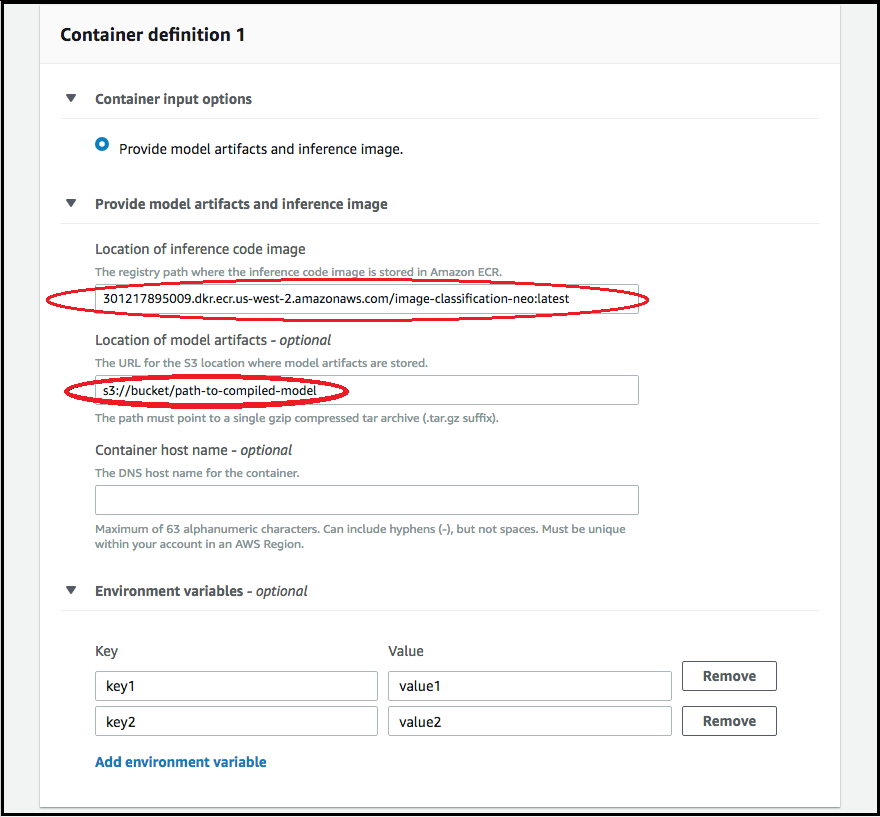

To add information about the container used to deploy your model, choose Add container container, then choose Next. Complete the Container input options, Location of inference code image, and Location of model artifacts, and optionally, Container host name, and Environmental variables fields.

-

To deploy Neo-compiled models, choose the following:

-

Container input options: Choose Provide model artifacts and inference image.

-

Location of inference code image: Choose the inference image URI from Neo Inference Container Images, depending on the AWS Region and kind of application.

-

Location of model artifact: Enter the Amazon S3 bucket URI of the compiled model artifact generated by the Neo compilation API.

-

Environment variables:

-

Leave this field blank for SageMaker XGBoost.

-

If you trained your model using SageMaker AI, specify the environment variable

SAGEMAKER_SUBMIT_DIRECTORYas the Amazon S3 bucket URI that contains the training script. -

If you did not train your model using SageMaker AI, specify the following environment variables:

Key Values for MXNet and PyTorch Values TensorFlow SAGEMAKER_PROGRAM inference.py inference.py SAGEMAKER_SUBMIT_DIRECTORY /opt/ml/model/code /opt/ml/model/code SAGEMAKER_CONTAINER_LOG_LEVEL 20 20 SAGEMAKER_REGION <your region> <your region> MMS_DEFAULT_RESPONSE_TIMEOUT 500 Leave this field blank for TF

-

-

-

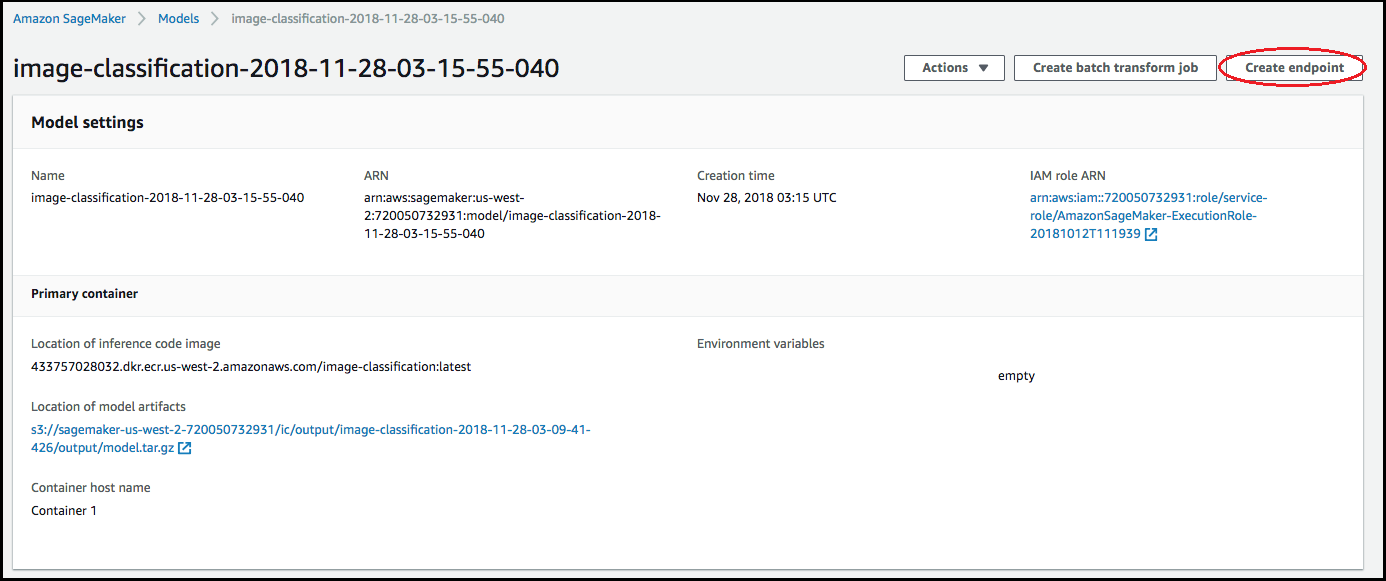

Confirm that the information for the containers is accurate, and then choose Create model. On the Create model landing page, choose Create endpoint.

-

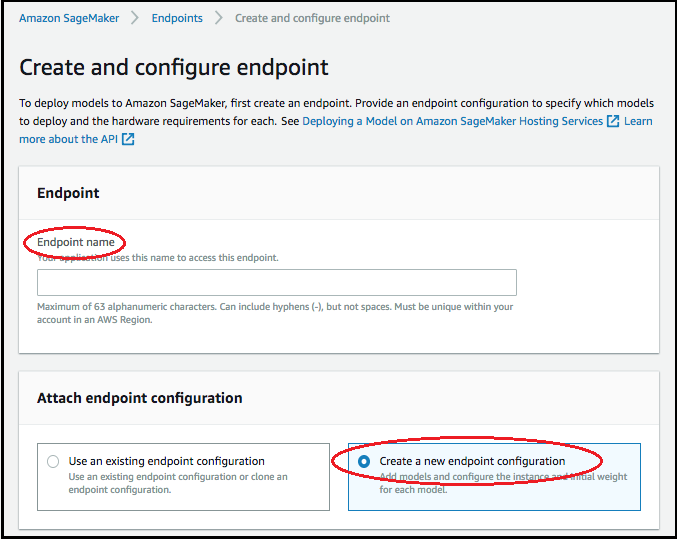

In Create and configure endpoint diagram, specify the Endpoint name. For Attach endpoint configuration, choose Create a new endpoint configuration.

-

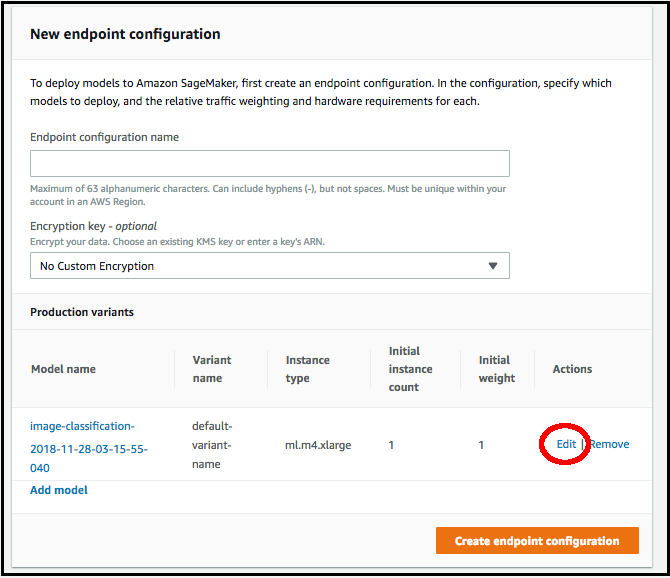

In New endpoint configuration page, specify the Endpoint configuration name.

-

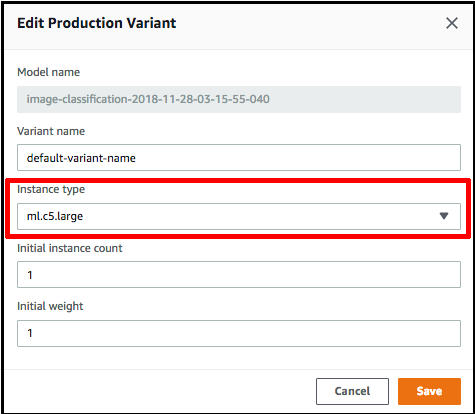

Choose Edit next to the name of the model and specify the correct Instance type on the Edit Production Variant page. It is imperative that the Instance type value match the one specified in your compilation job.

-

Choose Save.

-

On the New endpoint configuration page, choose Create endpoint configuration, and then choose Create endpoint.