ML lifecycle phase – Monitoring

The model monitoring system must capture data, compare that data to the training set, define rules to detect issues, and send alerts. This process repeats on a defined schedule, when initiated by an event, or when initiated by human intervention. The issues detected in the monitoring phase include: data quality, model quality, bias drift, and feature attribution drift.

Figure 18: Post deployment monitoring - main components

Figure 18 lists key components of monitoring, including:

-

Model explainability - Monitoring system uses explainability to evaluate the soundness of the model and if the predictions can be trusted.

-

Detect drift - Monitoring system detects data and concept drifts, initiates an alert, and sends it to the alarm manager system. Data drift is significant changes to the data distribution compared to the data used for training. Concept drift is when the properties of the target variables change. Any kind of drift results in model performance degradation.

-

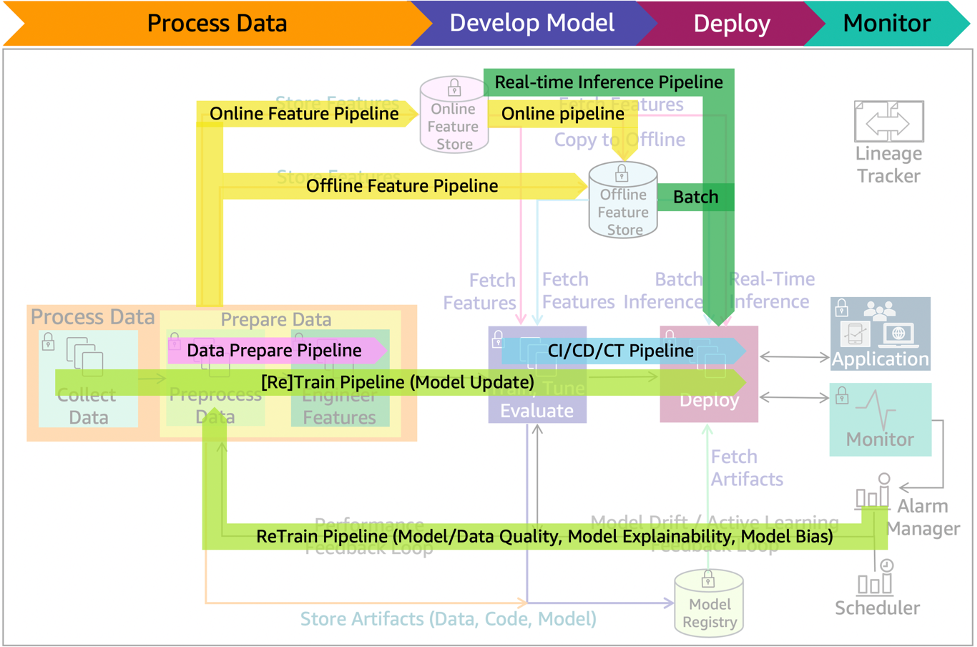

Model update pipeline - If the alarm manager identifies any violations, it launches the model update pipeline for a re-train. This can be seen in Figure 19. The Data prepare, CI/CD/CT, and Feature pipelines will also be active during this process.

Figure 19: ML lifecycle with model update re-train and batch/real-time inference pipelines