Powering Multiple Contact Centers with GenAI Using Amazon Bedrock

Publication date: October 4, 2023 (Diagram history)

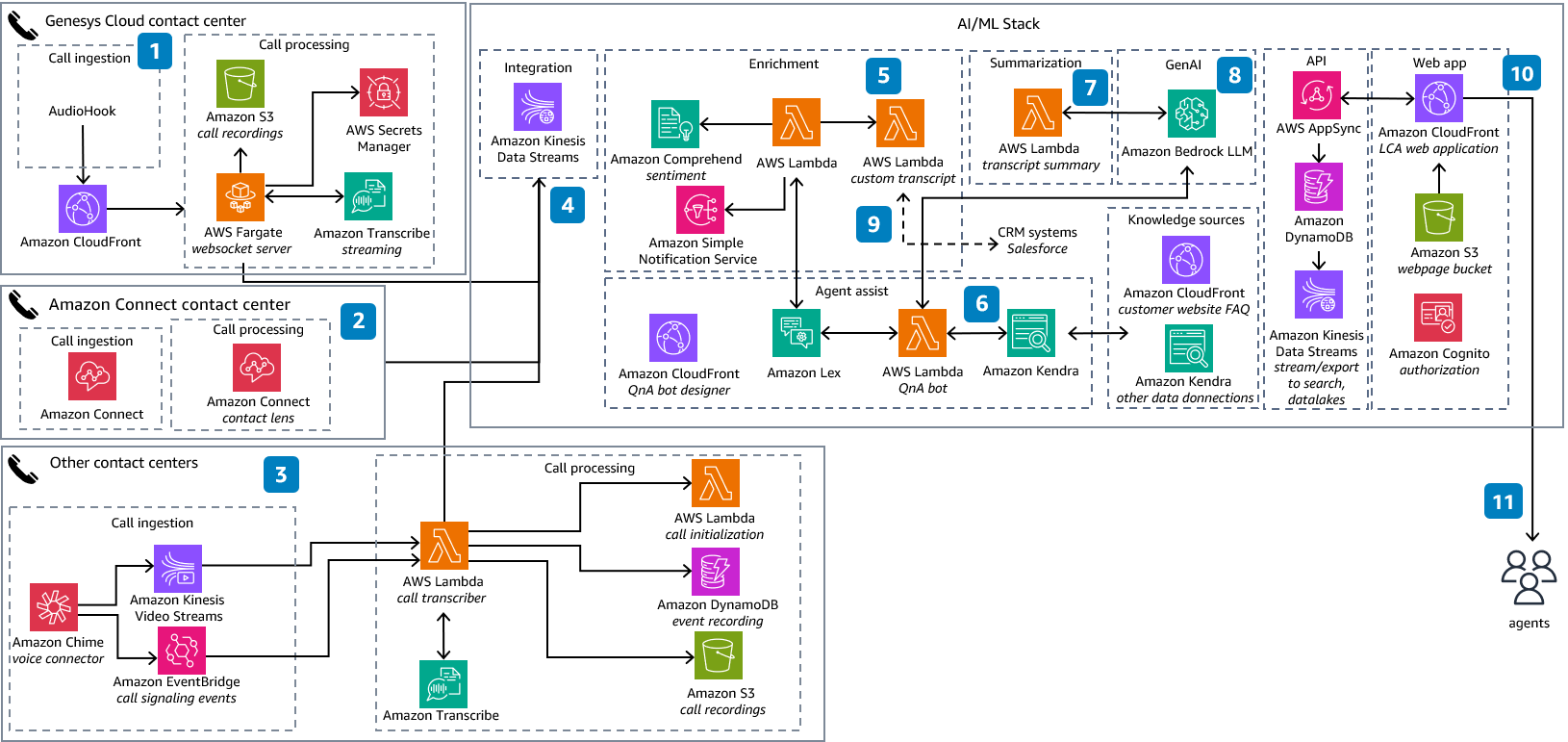

Many contact center operators have a hybrid setup of using multiple vendors, where each contact center has its own artificial intelligence and machine learning (AI/ML) support. This architecture is designed to consolidate this support to a single AI/ML stack for multiple contact center instances, powering multiple contact centers by a single large language model (LLM) using Amazon Bedrock, thus reducing cost and improving efficiency.

Powering Multiple Contact Centers with GenAI Using Amazon Bedrock Diagram

-

Call ingestion from a Genesys cloud contact center is achieved using an AudioHook websocket; call processing is handled using Amazon Transcribe.

-

Amazon Connect is an end-to-end cloud based contact center solution with built-in AI/ML capabilities. Call processing is done by using Amazon Connect Contact Lens.

-

Any other contact center based on session recording protocol (SIPREC) ingestion can be done by using Amazon Chime Voice Connector, with call processing by Amazon Transcribe.

-

Amazon Kinesis Data Streams streams all call transcripts simultaneously from all contact center instances.

-

AWS Lambda is used to initiate Amazon Comprehend sentiment analysis, which determines agent and caller sentiment. Lambda also initiates agent assist and transcript summarization.

-

Agent assist is based on Amazon Lex and Amazon Kendra. Amazon Lex is the conversational interface and uses Lambda to activate Amazon Kendra to provide intelligent search.

-

The event call processor Lambda function invokes the transcript summarization Lambda function when the call ends to generate a summary of the call from full transcript.

-

The LLM hosted in Amazon Bedrock leverages retrieval-augmented generation (RAG) with Amazon Kendra to securely ingest enterprise data into LLMs and fine tune it.

-

The post call summary Lambda hook that the LCA call event/transcript processor will invoke after the call summary is processed. This updates the call summary to a CRM system like Salesforce.

-

The web application establishes a secure GraphQL connection to the AWS AppSync API and subscribes to receive real-time events, such as new calls and call status changes for the calls list page, and new or updated transcription segments and computed analytics for the call details page.

-

Amazon CloudFront hosts a custom dashboard application for agents.

Download editable diagram

To customize this reference architecture diagram based on your business needs, download the ZIP file which contains an editable PowerPoint.

Create a free AWS account

Sign up for an AWS account. New accounts include 12 months of AWS Free Tier

Further reading

For additional information, refer to

Contributors

Contributors to this reference architecture diagram include:

-

Ninad Joshi, AI/ML Partner Solutions Architect, Amazon Web Services

Diagram history

To be notified about updates to this reference architecture diagram, subscribe to the RSS feed.

| Change | Description | Date |

|---|---|---|

Initial publication | Reference architecture diagram first published. | October 4, 2023 |

Note

To subscribe to RSS updates, you must have an RSS plugin enabled for the browser you are using.