This whitepaper is for historical reference only. Some content might be outdated and some links might not be available.

Purpose-built integration service

Amazon AppFlow: Introduction

Amazon

AppFlow

Amazon AppFlow automatically encrypts data in motion, and

optionally allows you to restrict data from flowing over the

public internet for SaaS applications that are integrated

with AWS PrivateLink

Architecture overview

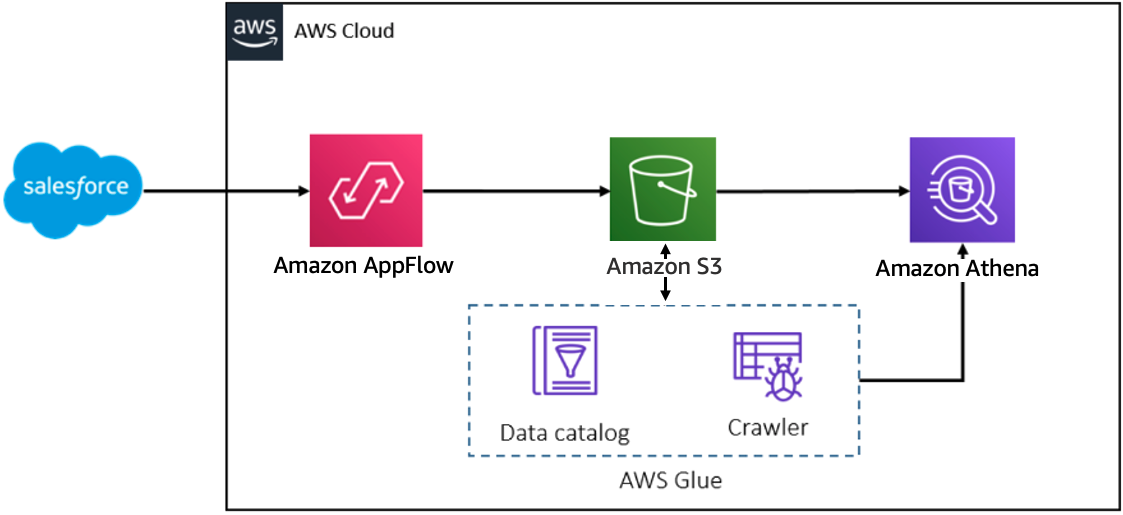

The following diagram depicts the architecture of the solution where data from

Salesforce is ingested into Amazon S3 using Amazon AppFlow. Once the data is ingested in Amazon S3,

you can use an AWS Glue

crawler to populate the AWS Glue Data Catalog

with tables and start consuming this data using SQL in Amazon Athena

Amazon AppFlow-based data ingestion pattern

Usage patterns

Because Amazon AppFlow can connect to many SaaS applications and is a low-code/no-code approach, this makes it very appealing for those who would want a quick and easy mechanism to ingest data from these SaaS applications.

Some use cases are as follows:

-

Create a copy of a Salesforce object (for example, opportunity, case, campaign) in Amazon S3.

-

Send case tickets from Zendesk to Amazon S3.

-

Hydrate an Amazon S3 data lake with transactional data from SAP S/4HANA enterprise resource planning (ERP).

-

Send logs, metrics, and dashboards from Datadog to Amazon S3, to create monthly reports or perform other analyses automatically, instead of doing this manually.

-

Send Marketo data, like new leads or email responses, in Amazon S3.

Considerations

If a SaaS application you want to get data from is not

supported out of the box, you can now build your own connector

using the Amazon AppFlow custom connector SDK. AWS has

released the

python

custom connector SDK

If any of the following scenarios apply, other ingestion patterns discussed in this paper may be a better fit for your type of ingestion:

-

A supported application is heavily customized.

-

Your use case exceeds any of the application-specific limitations.

For every SaaS application that Amazon AppFlow supports, there are a set of limitations included. For example, if you are transferring more than one million Salesforce records, you cannot choose any Salesforce compound field. Before using Amazon AppFlow, look for the limitations for the application that you are planning to connect, evaluate your use case against those limitations, and see if the service is still a good fit for what you are trying to do.

SaaS applications are sometimes heavily customized, so it’s always good to make sure the edge cases can be solved with Amazon AppFlow. You can find the list of known limitations and considerations in the notes section of the Amazon AppFlow documentation. For example, the known limitations for Salesforce as a source are listed here.

Also, consider the Amazon AppFlow service quotas to ensure your use case fits well within those limitations.