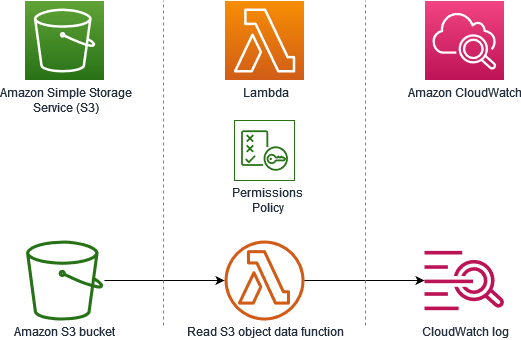

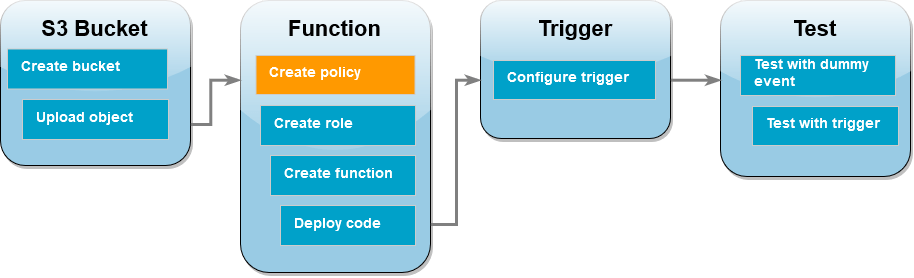

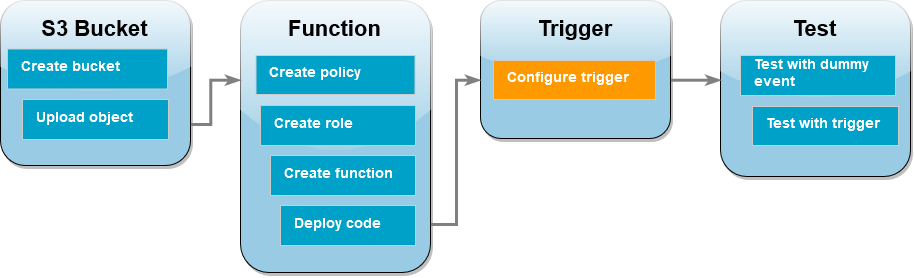

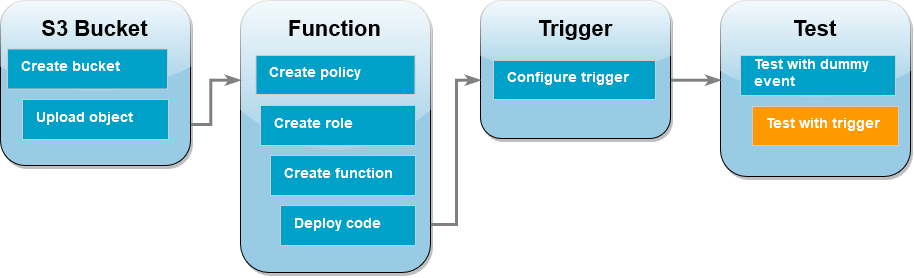

Tutorial: Using an Amazon S3 trigger to invoke a Lambda function

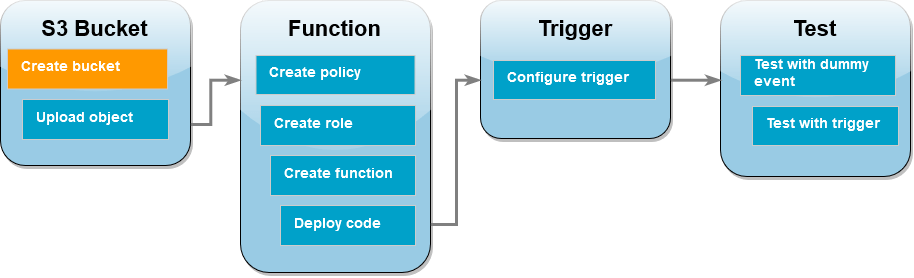

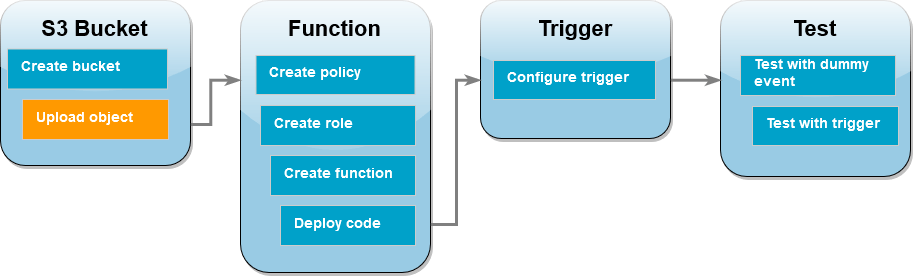

In this tutorial, you use the console to create a Lambda function and configure a trigger for an Amazon Simple Storage Service (Amazon S3) bucket. Every time that you add an object to your Amazon S3 bucket, your function runs and outputs the object type to Amazon CloudWatch Logs.

This tutorial demonstrates how to:

-

Create an Amazon S3 bucket.

-

Create a Lambda function that returns the object type of objects in an Amazon S3 bucket.

-

Configure a Lambda trigger that invokes your function when objects are uploaded to your bucket.

-

Test your function, first with a dummy event, and then using the trigger.

By completing these steps, you’ll learn how to configure a Lambda function to run whenever objects are added to or deleted from an Amazon S3 bucket. You can complete this tutorial using only the AWS Management Console.

Create an Amazon S3 bucket

To create an Amazon S3 bucket

-

Open the Amazon S3 console

and select the General purpose buckets page. -

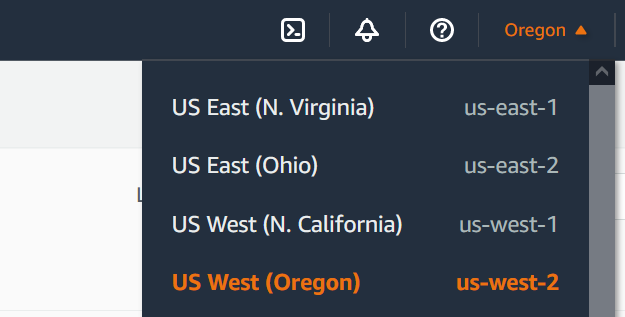

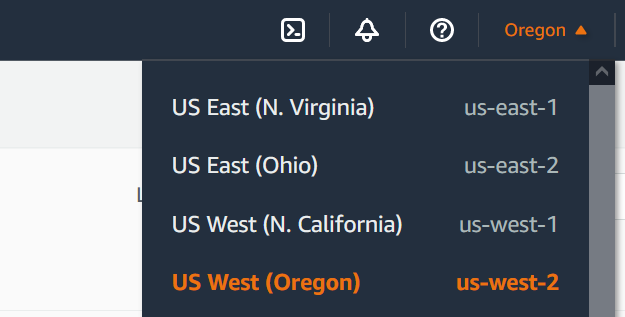

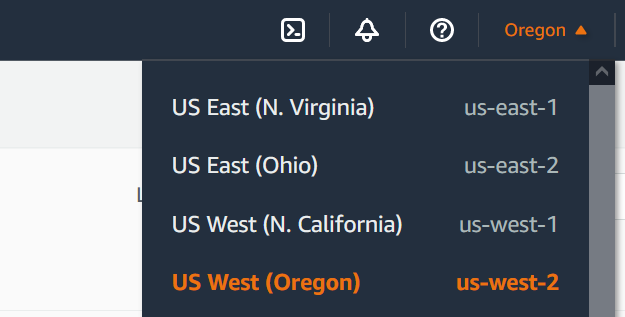

Select the AWS Region closest to your geographical location. You can change your region using the drop-down list at the top of the screen. Later in the tutorial, you must create your Lambda function in the same Region.

-

Choose Create bucket.

-

Under General configuration, do the following:

-

For Bucket type, ensure General purpose is selected.

-

For Bucket name, enter a globally unique name that meets the Amazon S3 Bucket naming rules. Bucket names can contain only lower case letters, numbers, dots (.), and hyphens (-).

-

-

Leave all other options set to their default values and choose Create bucket.

Upload a test object to your bucket

To upload a test object

-

Open the Buckets

page of the Amazon S3 console and choose the bucket you created during the previous step. -

Choose Upload.

-

Choose Add files and select the object that you want to upload. You can select any file (for example,

HappyFace.jpg). -

Choose Open, then choose Upload.

Later in the tutorial, you’ll test your Lambda function using this object.

Create a permissions policy

Create a permissions policy that allows Lambda to get objects from an Amazon S3 bucket and to write to Amazon CloudWatch Logs.

To create the policy

-

Open the Policies page

of the IAM console. -

Choose Create Policy.

-

Choose the JSON tab, and then paste the following custom policy into the JSON editor.

-

Choose Next: Tags.

-

Choose Next: Review.

-

Under Review policy, for the policy Name, enter

s3-trigger-tutorial. -

Choose Create policy.

Create an execution role

An execution role is an AWS Identity and Access Management (IAM) role that grants a Lambda function permission to access AWS services and resources. In this step, create an execution role using the permissions policy that you created in the previous step.

To create an execution role and attach your custom permissions policy

-

Open the Roles page

of the IAM console. -

Choose Create role.

-

For the type of trusted entity, choose AWS service, then for the use case, choose Lambda.

-

Choose Next.

-

In the policy search box, enter

s3-trigger-tutorial. -

In the search results, select the policy that you created (

s3-trigger-tutorial), and then choose Next. -

Under Role details, for the Role name, enter

lambda-s3-trigger-role, then choose Create role.

Create the Lambda function

Create a Lambda function in the console using the Python 3.13 runtime.

To create the Lambda function

-

Open the Functions page

of the Lambda console. -

Make sure you're working in the same AWS Region you created your Amazon S3 bucket in. You can change your Region using the drop-down list at the top of the screen.

-

Choose Create function.

-

Choose Author from scratch

-

Under Basic information, do the following:

-

For Function name, enter

s3-trigger-tutorial -

For Runtime, choose Python 3.13.

-

For Architecture, choose x86_64.

-

-

In the Change default execution role tab, do the following:

-

Expand the tab, then choose Use an existing role.

-

Select the

lambda-s3-trigger-roleyou created earlier.

-

-

Choose Create function.

Deploy the function code

This tutorial uses the Python 3.13 runtime, but we’ve also provided example code files for other runtimes. You can select the tab in the following box to see the code for the runtime you’re interested in.

The Lambda function retrieves the key name of the uploaded object and the name of the bucket from the event parameter it receives

from Amazon S3. The function then uses the get_object

To deploy the function code

-

Choose the Python tab in the following box and copy the code.

-

In the Code source pane on the Lambda console, paste the code into the code editor, replacing the code that Lambda created.

-

In the DEPLOY section, choose Deploy to update your function's code:

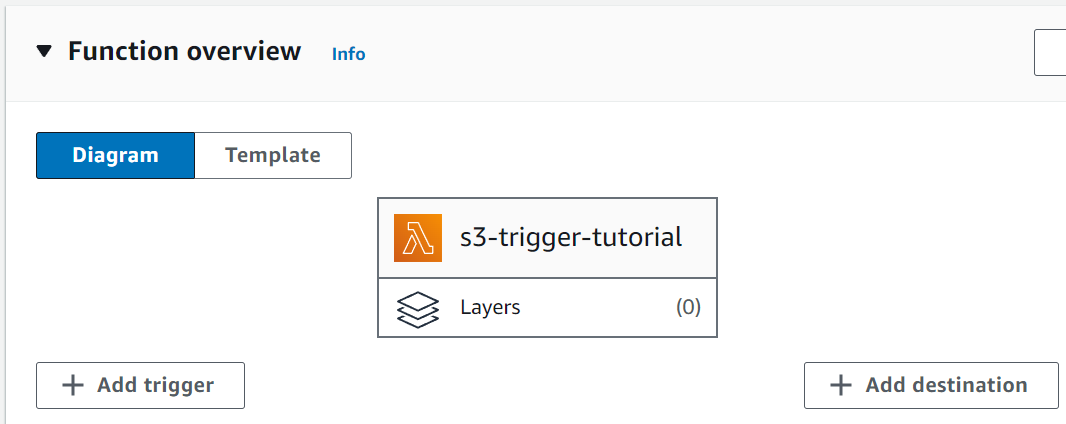

Create the Amazon S3 trigger

To create the Amazon S3 trigger

-

In the Function overview pane, choose Add trigger.

-

Select S3.

-

Under Bucket, select the bucket you created earlier in the tutorial.

-

Under Event types, be sure that All object create events is selected.

-

Under Recursive invocation, select the check box to acknowledge that using the same Amazon S3 bucket for input and output is not recommended.

-

Choose Add.

Note

When you create an Amazon S3 trigger for a Lambda function using the Lambda console, Amazon S3 configures an event notification on the bucket you specify. Before configuring this event notification, Amazon S3 performs a series of checks to confirm that the event destination exists and has the required IAM policies. Amazon S3 also performs these tests on any other event notifications configured for that bucket.

Because of this check, if the bucket has previously configured event destinations for resources that no longer exist, or for resources that don't have the required permissions policies, Amazon S3 won't be able to create the new event notification. You'll see the following error message indicating that your trigger couldn't be created:

An error occurred when creating the trigger: Unable to validate the following destination configurations.You can see this error if you previously configured a trigger for another Lambda function using the same bucket, and you have since deleted the function or modified its permissions policies.

Test your Lambda function with a dummy event

To test the Lambda function with a dummy event

-

In the Lambda console page for your function, choose the Test tab.

-

For Event name, enter

MyTestEvent. -

In the Event JSON, paste the following test event. Be sure to replace these values:

-

Replace

us-east-1with the region you created your Amazon S3 bucket in. -

Replace both instances of

amzn-s3-demo-bucketwith the name of your own Amazon S3 bucket. -

Replace

test%2FKeywith the name of the test object you uploaded to your bucket earlier (for example,HappyFace.jpg).

{ "Records": [ { "eventVersion": "2.0", "eventSource": "aws:s3", "awsRegion": "us-east-1", "eventTime": "1970-01-01T00:00:00.000Z", "eventName": "ObjectCreated:Put", "userIdentity": { "principalId": "EXAMPLE" }, "requestParameters": { "sourceIPAddress": "127.0.0.1" }, "responseElements": { "x-amz-request-id": "EXAMPLE123456789", "x-amz-id-2": "EXAMPLE123/5678abcdefghijklambdaisawesome/mnopqrstuvwxyzABCDEFGH" }, "s3": { "s3SchemaVersion": "1.0", "configurationId": "testConfigRule", "bucket": { "name": "amzn-s3-demo-bucket", "ownerIdentity": { "principalId": "EXAMPLE" }, "arn": "arn:aws:s3:::amzn-s3-demo-bucket" }, "object": { "key": "test%2Fkey", "size": 1024, "eTag": "0123456789abcdef0123456789abcdef", "sequencer": "0A1B2C3D4E5F678901" } } } ] } -

-

Choose Save.

-

Choose Test.

-

If your function runs successfully, you’ll see output similar to the following in the Execution results tab.

Response "image/jpeg" Function Logs START RequestId: 12b3cae7-5f4e-415e-93e6-416b8f8b66e6 Version: $LATEST 2021-02-18T21:40:59.280Z 12b3cae7-5f4e-415e-93e6-416b8f8b66e6 INFO INPUT BUCKET AND KEY: { Bucket: 'amzn-s3-demo-bucket', Key: 'HappyFace.jpg' } 2021-02-18T21:41:00.215Z 12b3cae7-5f4e-415e-93e6-416b8f8b66e6 INFO CONTENT TYPE: image/jpeg END RequestId: 12b3cae7-5f4e-415e-93e6-416b8f8b66e6 REPORT RequestId: 12b3cae7-5f4e-415e-93e6-416b8f8b66e6 Duration: 976.25 ms Billed Duration: 977 ms Memory Size: 128 MB Max Memory Used: 90 MB Init Duration: 430.47 ms Request ID 12b3cae7-5f4e-415e-93e6-416b8f8b66e6

Test the Lambda function with the Amazon S3 trigger

To test your function with the configured trigger, upload an object to your Amazon S3 bucket using the console. To verify that your Lambda function ran as expected, use CloudWatch Logs to view your function’s output.

To upload an object to your Amazon S3 bucket

-

Open the Buckets

page of the Amazon S3 console and choose the bucket that you created earlier. -

Choose Upload.

-

Choose Add files and use the file selector to choose an object you want to upload. This object can be any file you choose.

-

Choose Open, then choose Upload.

To verify the function invocation using CloudWatch Logs

-

Open the CloudWatch

console. -

Make sure you're working in the same AWS Region you created your Lambda function in. You can change your Region using the drop-down list at the top of the screen.

-

Choose Logs, then choose Log groups.

-

Choose the log group for your function (

/aws/lambda/s3-trigger-tutorial). -

Under Log streams, choose the most recent log stream.

-

If your function was invoked correctly in response to your Amazon S3 trigger, you’ll see output similar to the following. The

CONTENT TYPEyou see depends on the type of file you uploaded to your bucket.2022-05-09T23:17:28.702Z 0cae7f5a-b0af-4c73-8563-a3430333cc10 INFO CONTENT TYPE:image/jpeg

Clean up your resources

You can now delete the resources that you created for this tutorial, unless you want to retain them. By deleting AWS resources that you're no longer using, you prevent unnecessary charges to your AWS account.

To delete the Lambda function

-

Open the Functions page

of the Lambda console. -

Select the function that you created.

-

Choose Actions, Delete.

-

Type

confirmin the text input field and choose Delete.

To delete the execution role

-

Open the Roles page

of the IAM console. -

Select the execution role that you created.

-

Choose Delete.

-

Enter the name of the role in the text input field and choose Delete.

To delete the S3 bucket

-

Open the Amazon S3 console.

-

Select the bucket you created.

-

Choose Delete.

-

Enter the name of the bucket in the text input field.

-

Choose Delete bucket.

Next steps

In Tutorial: Using an Amazon S3 trigger to create thumbnail images, the Amazon S3 trigger invokes a function that creates a thumbnail image for each image file that is uploaded to a bucket. This tutorial requires a moderate level of AWS and Lambda domain knowledge. It demonstrates how to create resources using the AWS Command Line Interface (AWS CLI) and how to create a .zip file archive deployment package for the function and its dependencies.