Decompose monoliths into microservices by using CQRS and event sourcing

Rodolfo Jr. Cerrada, Dmitry Gulin, and Tabby Ward, Amazon Web Services

Summary

This pattern combines two patterns, using both the command query responsibility separation (CQRS) pattern and the event sourcing pattern. The CQRS pattern separates responsibilities of the command and query models. The event sourcing pattern takes advantage of asynchronous event-driven communication to improve the overall user experience.

You can use CQRS and Amazon Web Services (AWS) services to maintain and scale each data model independently while refactoring your monolith application into microservices architecture. Then you can use the event sourcing pattern to synchronize data from the command database to the query database.

This pattern uses example code that includes a solution (*.sln) file that you can open using the latest version of Visual Studio. The example contains Reward API code to showcase how CQRS and event sourcing work in AWS serverless and traditional or on-premises applications.

To learn more about CQRS and event sourcing, see the Additional information section.

Prerequisites and limitations

Prerequisites

An active AWS account

Amazon CloudWatch

Amazon DynamoDB tables

Amazon DynamoDB Streams

AWS Identity and Access Management (IAM) access key and secret key; for more information, see the video in the Related resources section

AWS Lambda

Familiarity with Visual Studio

Familiarity with AWS Toolkit for Visual Studio; for more information, see the AWS Toolkit for Visual Studio demo video in the Related resources section

Product versions

.NET Core 3.1. This component is an option in the Visual Studio installation. To include .NET Core during installation, select NET Core cross-platform development.

Limitations

The example code for a traditional on-premises application (ASP.NET Core Web API and data access objects) does not come with a database. However, it comes with the

CustomerDatain-memory object, which acts as a mock database. The code provided is enough for you to test the pattern.

Architecture

Source technology stack

ASP.NET Core Web API project

IIS Web Server

Data access object

CRUD model

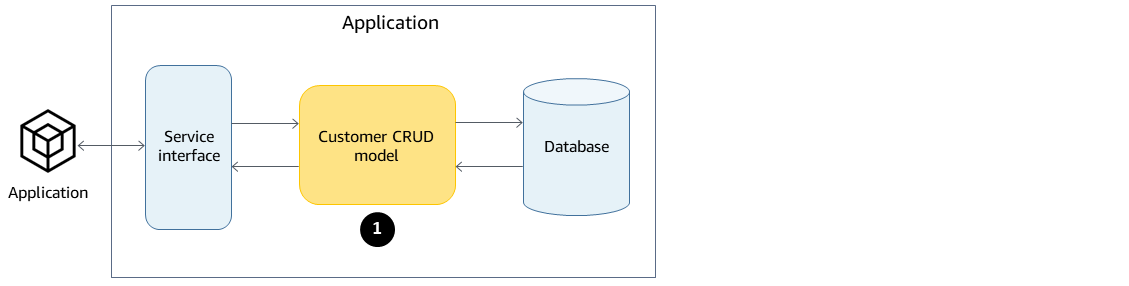

Source architecture

In the source architecture, the CRUD model contains both command and query interfaces in one application. For example code, see CustomerDAO.cs (attached).

Target technology stack

Amazon DynamoDB

Amazon DynamoDB Streams

AWS Lambda

(Optional) Amazon API Gateway

(Optional) Amazon Simple Notification Service (Amazon SNS)

Target architecture

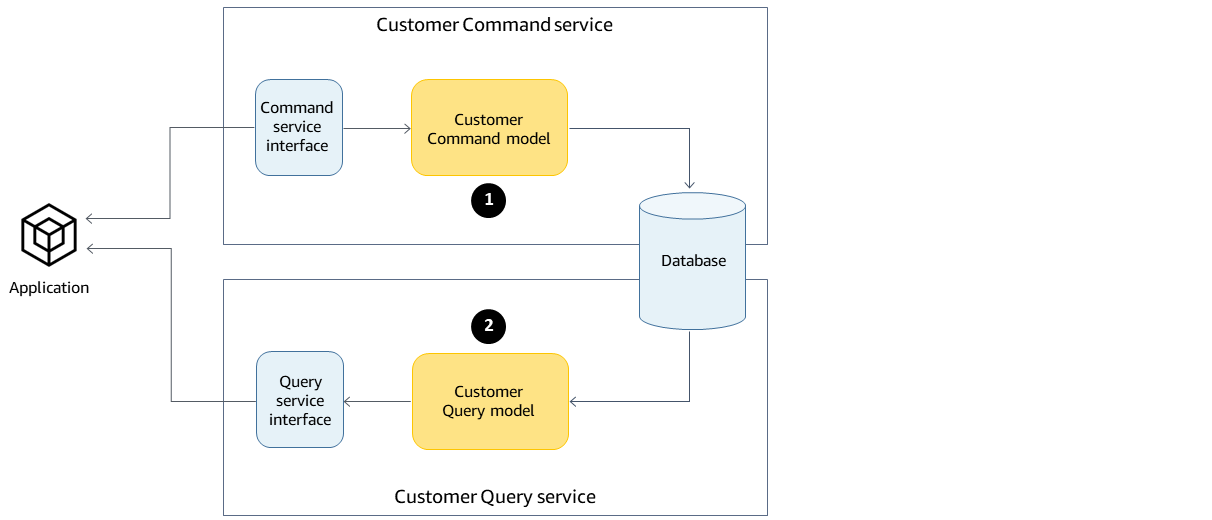

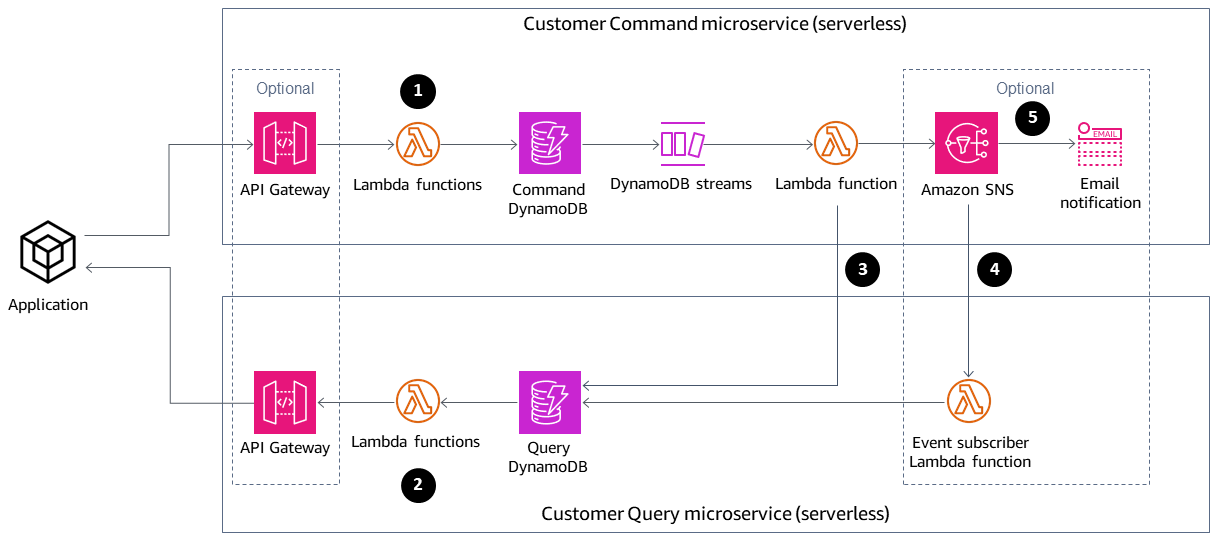

In the target architecture, the command and query interfaces are separated. The architecture shown in the following diagram can be extended with API Gateway and Amazon SNS. For more information, see the Additional information section.

Command Lambda functions perform write operations, such as create, update, or delete, on the database.

Query Lambda functions perform read operations, such as get or select, on the database.

This Lambda function processes the DynamoDB streams from the Command database and updates the Query database for the changes.

Tools

Tools

Amazon DynamoDB – Amazon DynamoDB is a fully managed NoSQL database service that provides fast and predictable performance with seamless scalability.

Amazon DynamoDB Streams – DynamoDB Streams captures a time-ordered sequence of item-level modifications in any DynamoDB table. It then stores this information in a log for up to 24 hours. Encryption at rest encrypts the data in DynamoDB streams.

AWS Lambda – AWS Lambda is a compute service that supports running code without provisioning or managing servers. Lambda runs your code only when needed and scales automatically, from a few requests per day to thousands per second. You pay only for the compute time that you consume—there is no charge when your code is not running.

AWS Management Console – The AWS Management Console is a web application that comprises a broad collection of service consoles for managing AWS services.

Visual Studio 2019 Community Edition

– Visual Studio 2019 is an integrated development environment (IDE). The Community Edition is free for open-source contributors. In this pattern, you will use Visual Studio 2019 Community Edition to open, compile, and run example code. For viewing only, you can use any text editor or Visual Studio Code. AWS Toolkit for Visual Studio – The AWS Toolkit for Visual Studio is a plugin for the Visual Studio IDE. The AWS Toolkit for Visual Studio makes it easier for you to develop, debug, and deploy .NET applications that use AWS services.

Code

The example code is attached. For instructions on deploying the example code, see the Epics section.

Epics

| Task | Description | Skills required |

|---|---|---|

Open the solution. |

| App developer |

Build the solution. | Open the context (right-click) menu for the solution, and then choose Build Solution. This will build and compile all the projects in the solution. It should compile successfully. Visual Studio Solution Explorer should show the directory structure.

| App developer |

| Task | Description | Skills required |

|---|---|---|

Provide credentials. | If you don't have an access key yet, see the video in the Related resources section.

| App developer, Data engineer, DBA |

Build the project. | To build the project, open the context (right-click) menu for the AwS.APG.CQRSES.Build project, and then choose Build. | App developer, Data engineer, DBA |

Build and populate the tables. | To build the tables and populate them with seed data, open the context (right-click) menu for the AwS.APG.CQRSES.Build project, and then choose Debug, Start New Instance. | App developer, Data engineer, DBA |

Verify the table construction and the data. | To verify, navigate to AWS Explorer, and expand Amazon DynamoDB. It should display the tables. Open each table to display the example data. | App developer, Data engineer, DBA |

| Task | Description | Skills required |

|---|---|---|

Build the CQRS project. |

| App developer, Test engineer |

Build the event-sourcing project. |

| App developer, Test engineer |

Run the tests. | To run all tests, choose View, Test Explorer, and then choose Run All Tests In View. All tests should pass, which is indicated by a green check mark icon. | App developer, Test engineer |

| Task | Description | Skills required |

|---|---|---|

Publish the first Lambda function. |

| App developer, DevOps engineer |

Verify the function upload. | (Optional) You can verify that the function was successfully loaded by navigating to AWS Explorer and expanding AWS Lambda. To open the test window, choose the Lambda function (double-click). | App developer, DevOps engineer |

Test the Lambda function. |

All CQRS Lambda projects are found under the | App developer, DevOps engineer |

Publish the remaining functions. | Repeat the previous steps for the following projects:

| App developer, DevOps engineer |

| Task | Description | Skills required |

|---|---|---|

Publish the Customer and Reward Lambda event handlers. | To publish each event handler, follow the steps in the preceding epic. The projects are under the | App developer |

Attach the event-sourcing Lambda event listener. |

After the listener is successfully attached to the DynamoDB table, it will be displayed on the Lambda designer page. | App developer |

Publish and attach the EventSourceReward Lambda function. | To publish and attach the | App developer |

| Task | Description | Skills required |

|---|---|---|

Test the stream and the Lambda trigger. |

| App developer |

Validate, using the DynamodDB reward query table. |

| App developer |

Validate, using CloudWatch Logs. |

| App developer |

Validate the EventSourceCustomer trigger. | To validate the | App developer |

Related resources

References

Videos

Additional information

CQRS and event sourcing

CQRS

The CQRS pattern separates a single conceptual operations model, such as a data access object single CRUD (create, read, update, delete) model, into command and query operations models. The command model refers to any operation, such as create, update, or delete, that changes the state. The query model refers to any operation that returns a value.

The Customer CRUD model includes the following interfaces:

Create Customer()UpdateCustomer()DeleteCustomer()AddPoints()RedeemPoints()GetVIPCustomers()GetCustomerList()GetCustomerPoints()

As your requirements become more complex, you can move from this single-model approach. CQRS uses a command model and a query model to separate the responsibility for writing and reading data. That way, the data can be independently maintained and managed. With a clear separation of responsibilities, enhancements to each model do not impact the other. This separation improves maintenance and performance, and it reduces the complexity of the application as it grows.

Interfaces in the Customer Command model:

Create Customer()UpdateCustomer()DeleteCustomer()AddPoints()RedeemPoints()

Interfaces in the Customer Query model:

GetVIPCustomers()GetCustomerList()GetCustomerPoints()GetMonthlyStatement()

For example code, see Source code directory.

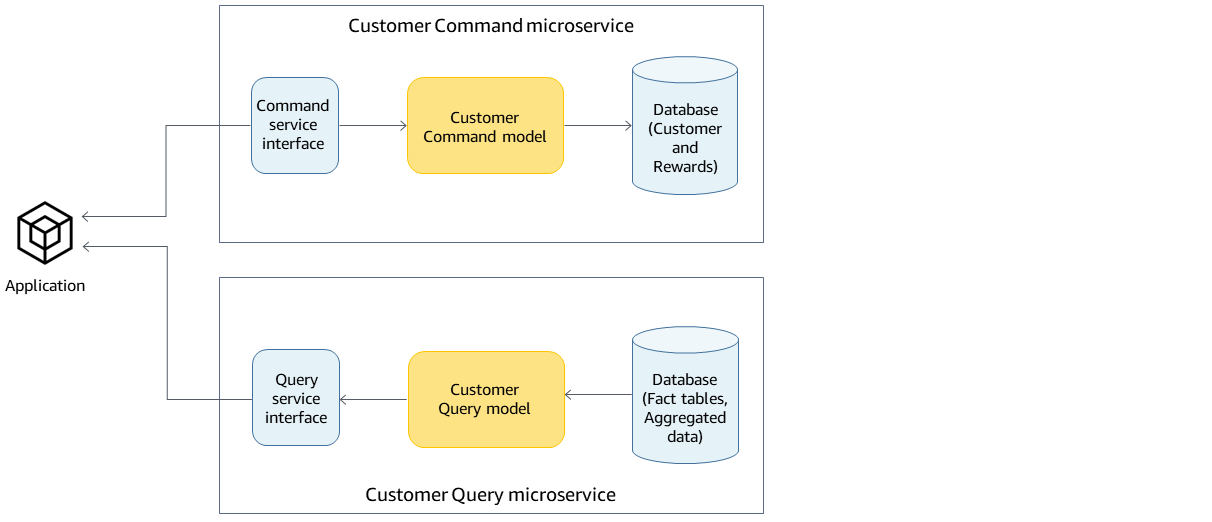

The CQRS pattern then decouples the database. This decoupling leads to the total independence of each service, which is the main ingredient of microservice architecture.

Using CQRS in the AWS Cloud, you can further optimize each service. For example, you can set different compute settings or choose between a serverless or a container-based microservice. You can replace your on-premises caching with Amazon ElastiCache. If you have an on-premises publish/subscribe messaging, you can replace it with Amazon Simple Notification Service (Amazon SNS). Additionally, you can take advantage of pay-as-you-go pricing and the wide array of AWS services that you pay only for what you use.

CQRS includes the following benefits:

Independent scaling – Each model can have its scaling strategy adjusted to meet the requirements and demand of the service. Similar to high-performance applications, separating read and write enables the model to scale independently to address each demand. You can also add or reduce compute resources to address the scalability demand of one model without affecting the other.

Independent maintenance – Separation of query and command models improves the maintainability of the models. You can make code changes and enhancements to one model without affecting the other.

Security – It's easier to apply the permissions and policies to separate models for read and write.

Optimized reads – You can define a schema that is optimized for queries. For example, you can define a schema for the aggregated data and a separate schema for the fact tables.

Integration – CQRS fits well with event-based programming models.

Managed complexity – The separation into query and command models is suited to complex domains.

When using CQRS, keep in mind the following caveats:

The CQRS pattern applies only to a specific portion of an application and not the whole application. If implemented on a domain that does not fit the pattern, it can reduce productivity, increase risk, and introduce complexity.

The pattern works best for frequently used models that have an imbalance read and write operations.

For read-heavy applications, such as large reports that take time to process, CQRS gives you the option to select the right database and create a schema to store your aggregated data. This improves the response time of reading and viewing the report by processing the report data only one time and dumping it in the aggregated table.

For the write-heavy applications, you can configure the database for write operations and allow the command microservice to scale independently when the demand for write increases. For examples, see the

AWS.APG.CQRSES.CommandRedeemRewardLambdaandAWS.APG.CQRSES.CommandAddRewardLambdamicroservices.

Event sourcing

The next step is to use event sourcing to synchronize the query database when a command is run. For example, consider the following events:

A customer reward point is added that requires the customer total or aggregated reward points in the query database to be updated.

A customer's last name is updated in the command database, which requires the surrogate customer information in the query database to be updated.

In the traditional CRUD model, you ensure consistency of data by locking the data until it finishes a transaction. In event sourcing, the data are synchronized through publishing a series of events that will be consumed by a subscriber to update its respective data.

The event-sourcing pattern ensures and records a full series of actions taken on the data and publishes it through a sequence of events. These events represent a set of changes to the data that subscribers of that event must process to keep their record updated. These events are consumed by the subscriber, synchronizing the data on the subscriber's database. In this case, that's the query database.

The following diagram shows event sourcing used with CQRS on AWS.

Command Lambda functions perform write operations, such as create, update, or delete, on the database.

Query Lambda functions perform read operations, such as get or select, on the database.

This Lambda function processes the DynamoDB streams from the Command database and updates the Query database for the changes. You can also use this function also to publish a message to Amazon SNS so that its subscribers can process the data.

(Optional) The Lambda event subscriber processes the message published by Amazon SNS and updates the Query database.

(Optional) Amazon SNS sends email notification of the write operation.

On AWS, the query database can be synchronized by DynamoDB Streams. DynamoDB captures a time-ordered sequence of item-level modifications in a DynamobDB table in near-real time and durably stores the information within 24 hours.

Activating DynamoDB Streams enables the database to publish a sequence of events that makes the event sourcing pattern possible. The event sourcing pattern adds the event subscriber. The event subscriber application consumes the event and processes it depending on the subscriber's responsibility. In the previous diagram, the event subscriber pushes the changes to the Query DynamoDB database to keep the data synchronized. The use of Amazon SNS, the message broker, and the event subscriber application keeps the architecture decoupled.

Event sourcing includes the following benefits:

Consistency for transactional data

A reliable audit trail and history of the actions, which can be used to monitor actions taken in the data

Allows distributed applications such as microservices to synchronize their data across the environment

Reliable publication of events whenever the state changes

Reconstructing or replaying of past states

Loosely coupled entities that exchange events for migration from a monolithic application to microservices

Reduction of conflicts caused by concurrent updates; event sourcing avoids the requirement to update objects directly in the data store

Flexibility and extensibility from decoupling the task and the event

External system updates

Management of multiple tasks in a single event

When using event sourcing, keep in mind the following caveats:

Because there is some delay in updating data between the source subscriber databases, the only way to undo a change is to add a compensating event to the event store.

Implementing event sourcing has a learning curve since its different style of programming.

Test data

Use the following test data to test the Lambda function after successful deployment.

CommandCreate Customer

{ "Id":1501, "Firstname":"John", "Lastname":"Done", "CompanyName":"AnyCompany", "Address": "USA", "VIP":true }

CommandUpdate Customer

{ "Id":1501, "Firstname":"John", "Lastname":"Doe", "CompanyName":"Example Corp.", "Address": "Seattle, USA", "VIP":true }

CommandDelete Customer

Enter the customer ID as request data. For example, if the customer ID is 151, enter 151 as request data.

151

QueryCustomerList

This is blank. When it is invoked, it will return all customers.

CommandAddReward

This will add 40 points to customer with ID 1 (Richard).

{ "Id":10101, "CustomerId":1, "Points":40 }

CommandRedeemReward

This will deduct 15 points to customer with ID 1 (Richard).

{ "Id":10110, "CustomerId":1, "Points":15 }

QueryReward

Enter the ID of the customer. For example, enter 1 for Richard, 2 for Arnav, and 3 for Shirley.

2

Source code directory

Use the following table as a guide to the directory structure of the Visual Studio solution.

CQRS On-Premises Code Sample solution directory

Customer CRUD model

CQRS On-Premises Code Sample\CRUD Model\AWS.APG.CQRSES.DAL project

CQRS version of the Customer CRUD model

Customer command:

CQRS On-Premises Code Sample\CQRS Model\Command Microservice\AWS.APG.CQRSES.CommandprojectCustomer query:

CQRS On-Premises Code Sample\CQRS Model\Query Microservice\AWS.APG.CQRSES.Queryproject

Command and Query microservices

The Command microservice is under the solution folder CQRS On-Premises Code Sample\CQRS Model\Command Microservice:

AWS.APG.CQRSES.CommandMicroserviceASP.NET Core API project acts as the entry point where consumers interact with the service.AWS.APG.CQRSES.Command.NET Core project is an object that hosts command-related objects and interfaces.

The query microservice is under the solution folder CQRS On-Premises Code Sample\CQRS Model\Query Microservice:

AWS.APG.CQRSES.QueryMicroserviceASP.NET Core API project acts as the entry point where consumers interact with the service.AWS.APG.CQRSES.Query.NET Core project is an object that hosts query-related objects and interfaces.

CQRS AWS Serverless code solution directory

This code is the AWS version of the on-premises code using AWS serverless services.

In C# .NET Core, each Lambda function is represented by one .NET Core project. In this pattern's example code, there is a separate project for each interface in the command and query models.

CQRS using AWS services

You can find the root solution directory for CQRS using AWS serverless services is in the CQRS AWS Serverless\CQRSfolder. The example includes two models: Customer and Reward.

The command Lambda functions for Customer and Reward are under CQRS\Command Microservice\Customer and CQRS\Command Microservice\Reward folders. They contain the following Lambda projects:

Customer command:

CommandCreateLambda,CommandDeleteLambda, andCommandUpdateLambdaReward command:

CommandAddRewardLambdaandCommandRedeemRewardLambda

The query Lambda functions for Customer and Reward are found under the CQRS\Query Microservice\Customer and CQRS\QueryMicroservice\Rewardfolders. They contain the QueryCustomerListLambda and QueryRewardLambda Lambda projects.

CQRS test project

The test project is under the CQRS\Tests folder. This project contains a test script to automate testing the CQRS Lambda functions.

Event sourcing using AWS services

The following Lambda event handlers are initiated by the Customer and Reward DynamoDB streams to process and synchronize the data in query tables.

The

EventSourceCustomerLambda function is mapped to the Customer table (cqrses-customer-cmd) DynamoDB stream.The

EventSourceRewardLambda function is mapped to the Reward table (cqrses-reward-cmd) DynamoDB stream.

Attachments

To access additional content that is associated with this document, unzip the following file: attachment.zip