Service consumers operating on premises

This section discusses connectivity options between SaaS workloads in the AWS Cloud and the on-premises data centers. Many consumers with on-premises requirements, especially at the enterprise level, see the cloud as an extension of their physical network, and they want to reflect that in their architecture. That means private connectivity to the SaaS offering in the cloud, either through logical tunnels or even through a private physical connection. Other consumers will accept connectivity through the public internet, which is also discussed in this section.

This section discusses the following network access approaches:

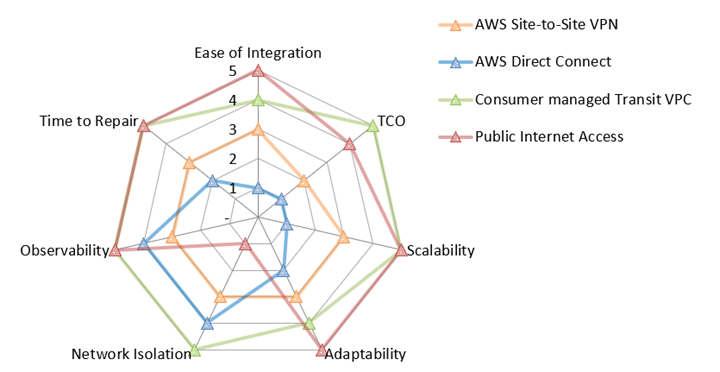

The following networking value map summarizes how each of these options scores for each evaluation metric. For more information about the evaluation metrics, see Evaluation metrics in this guide. In the map, a five represents the best score, such as the lowest TCO, best network isolation, or lowest time to repair. For more information about how to read this radar chart, see Networking value map in this guide.

Note

The provider-managed transit VPC option is excluded because the scores heavily depend on which services are being operated.

The radar chart shows the following values.

Evaluation metric |

AWS Site-to-Site VPN |

AWS Direct Connect |

Consumer-managed transit VPC |

Public internet access |

|---|---|---|---|---|

Ease of integration |

3 |

1 |

4 |

5 |

TCO |

2 |

1 |

5 |

4 |

Scalability |

3 |

1 |

5 |

5 |

Adaptability |

3 |

2 |

4 |

5 |

Network isolation |

3 |

4 |

5 |

1 |

Observability |

3 |

4 |

5 |

5 |

Time to repair |

3 |

2 |

5 |

5 |

Connecting with AWS Site-to-Site VPN

AWS Site-to-Site VPN connections can terminate on either a virtual private gateway or a transit gateway. A virtual private gateway is the VPN endpoint on the AWS side of your Site-to-Site VPN connection that can be attached to a single VPC. A transit gateway is a transit hub that can be used to interconnect multiple VPCs and on-premises networks. It can also be used as a VPN endpoint for the AWS side of the Site-to-Site VPN connection. This section discusses both options.

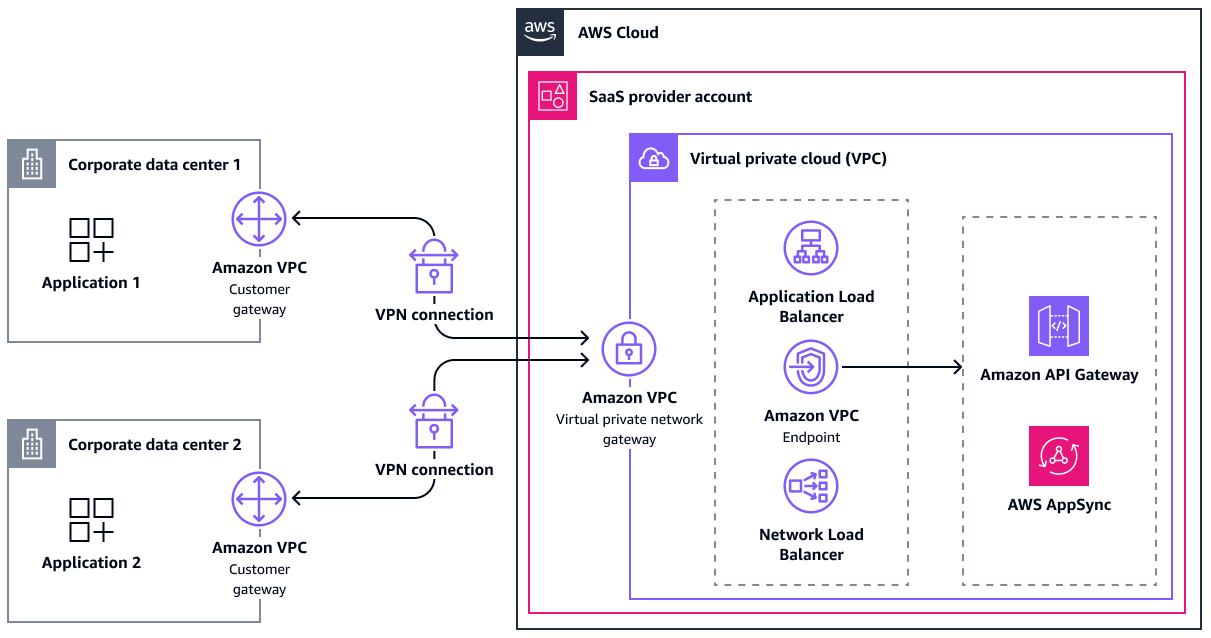

Connection through a virtual private gateway

After you create a virtual private gateway, you attach it to the VPC that contains your SaaS offering. Then, you enable route propagation to propagate the VPN routes to the VPC route table. Those routes can be either static or BGP-advertised dynamic routes.

For high availability, an Site-to-Site VPN connection has two VPN tunnels that terminate in two Availability Zones on the AWS side. If one becomes unavailable, the second tunnel can take over. A single tunnel allows a maximum bandwidth of 1.25 Gbps. Because virtual private gateways do not support equal-cost multi-path routing (ECMP), you can use only one tunnel at a time.

To increase fault tolerance, you can set up a second VPN connection to a second physical customer gateway. After the connection is established, the consumer can reach resources in the SaaS provider's VPC.

The following diagram shows this architecture.

The following are the benefits of this approach:

-

Time to repair: Managed failover to secondary VPN tunnel

-

Observability: Integration for managed active monitoring by using Network Synthetic Monitor

-

Ease of integration: Dynamic routing support through BGP

-

Adaptability: Compatibility with most on-premises networking equipment

-

Adaptability: IPv6 support

-

TCO: AWS Site-to-Site VPN is a fully managed service, so it requires less operational effort

-

TCO: No cost for virtual gateways, although there are charges for the two public IPv4 addresses on each

-

Network isolation: Enables secure private communication through the internet

The following are the drawbacks of this approach:

-

Ease of integration: The consumer must configure their customer gateway

-

Scalability: Lack of ECMP support limits bandwidth to 1.25 Gbps per virtual gateway

-

Scalability: Limited scaling due to increased network complexity and operational overhead

-

Adaptability: IPv6 support only for the inside IP addresses of the VPN tunnels

-

Adaptability: No transitive routing

-

TCO: Operational overhead to maintain, manage, and configure numerous VPN connections for the SaaS provider

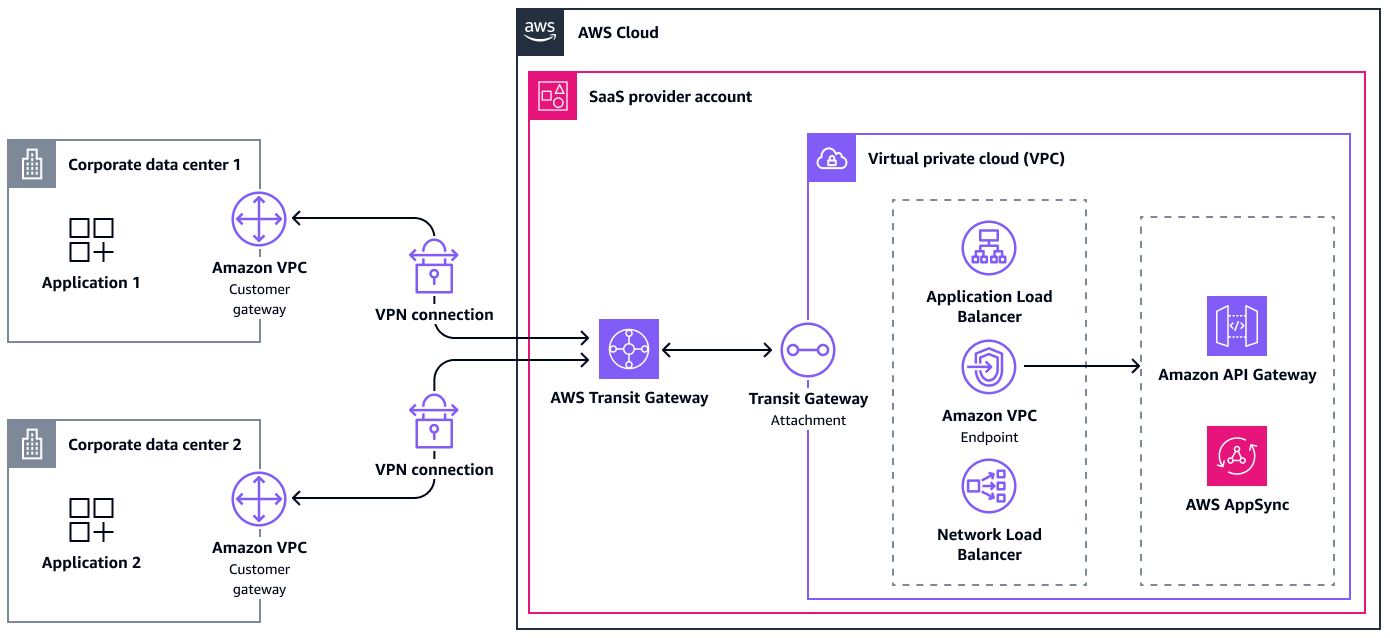

Connection through a transit gateway

Connections through transit gateways are similar to virtual gateways. However, there are a few differences to keep in mind.

First, routes for the VPN attachment can be automatically propagated within the transit gateway route table, but you must manually add the routes to the attached VPCs.

Compared to a virtual gateway, Transit Gateway supports ECMP. If the customer gateway supports ECMP, it can use both tunnels to achieve a total maximum throughput of 2.5 Gbps. You can establish multiple connections between the same on-premises network to the transit gateway. Using this approach, you can increase the maximum bandwidth by up to 2.5 Gbps per connection.

The following diagram shows this architecture.

The following are the benefits of this approach:

-

Time to repair: Managed failover to secondary VPN tunnel

-

Observability: Integration for managed active monitoring by using Network Synthetic Monitor

-

Ease of integration: Dynamic routing support through BGP

-

Scalability: ECMP support allows scaling VPN throughput

to satisfy large bandwidth requirements -

Scalability: Large number of VPN connections supported by a single transit gateway (up to almost 5,000)

-

Scalability: One place to manage and monitor all the VPN connections

-

Adaptability: Compatibility with most on-premises networking equipment

-

Adaptability: IPv6 support

-

Adaptability: Inherit flexibility of AWS Transit Gateway

-

TCO: AWS Transit Gateway is a fully managed service, so it requires less operational effort

-

TCO: No cost for virtual gateways, although there are charges for the two public IPv4 addresses on each

-

Network isolation: Enables secure private communication through the internet

The following are the drawbacks of this approach:

-

Ease of integration: The consumer must configure their customer gateway

-

Scalability: Limited scaling due to increased network complexity and operational overhead

-

Adaptability: IPv6 support only for the inside IP addresses of the VPN tunnels

-

TCO: Operational overhead to maintain, manage, and configure numerous VPN connections for the SaaS provider

-

TCO: Extra charges for use of AWS Transit Gateway

-

TCO: Additional complexity managing the transit gateway route tables

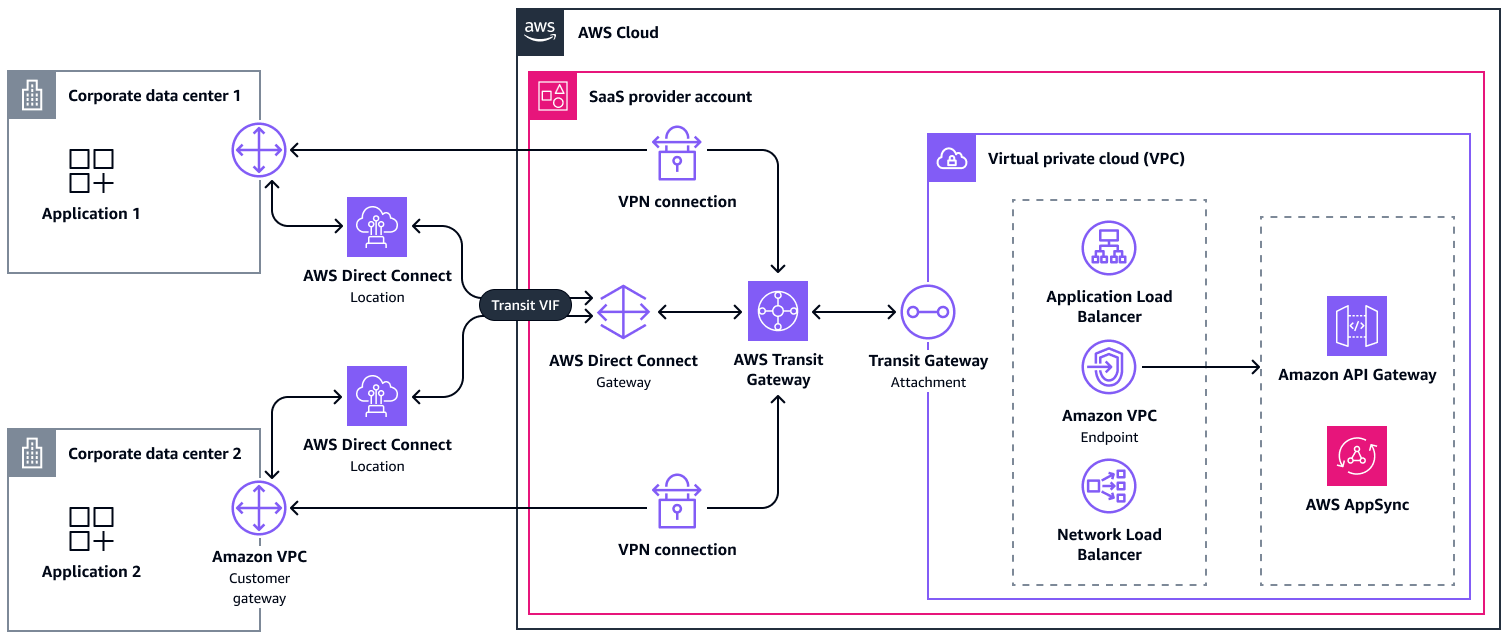

Connecting with AWS Direct Connect

AWS Direct Connect links your internal network to a AWS Direct Connect location over a standard Ethernet fiber-optic cable. Unlike the other architecture options, a dedicated connection cannot be established in a few minutes. Instead, this process can take up to several days if all requirements are met. If not, it might take longer. Therefore, we suggest that you reach out to your AWS account team or AWS Support for help with this approach. Optionally, you can choose a hosted connection that is provided by an AWS Partner and shared with other customers. The architecture is the same regardless. You might choose AWS Direct Connect because it reduces latency, improves bandwidth, or complies with regulatory requirements.

To use the AWS Direct Connect connection, consumers must create either a public, private, or transit virtual interface. There are different architecture options available. The most flexible one to connect multiple on-premises locations to the AWS Cloud is a transit virtual interface connected to an AWS Direct Connect gateway. An AWS Direct Connect gateway is a global, logical component that allows the service provider to connect up to six transit gateways to it. Furthermore, you can connect up to 30 virtual interfaces to the gateway. For scale, you can create additional AWS Direct Connect gateways. In the SaaS provider account, the transit gateways then attach to the VPCs, as described previously.

Consumers can connect using one to four AWS Direct Connect connections from a total of one

or two AWS Direct Connect

locations

The following are the benefits of this approach:

-

Observability: Integration for managed active monitoring by using Network Synthetic Monitor

-

Scalability: Support for increased bandwidth throughput

-

Adaptability: IPv6 support

-

TCO: Potential to reduce data transfer

-

TCO: Consistent network experience

-

Network isolation: Private connectivity that can fulfill regulatory requirements

The following are the drawbacks of this approach:

-

Ease of integration: Time and manual effort to set up

-

Scalability: Limited scalability beyond tens of AWS Direct Connect connections because there are multiple quotas to track

-

Adaptability: Configuration options depend on the available AWS Direct Connect locations

-

TCO: Scheduled AWS Direct Connect maintenance can cause downtime that requires action

Connecting with a transit VPC architecture

Transit VPC is an architecture option that gives flexibility to the consumers for how to connect to AWS, and it allows SaaS providers to benefit from having unified access to their service through AWS PrivateLink. The consumer connects from on premises to a transit VPC that contains only an entry point (such as a virtual private gateway) and an interface VPC endpoint, which is an AWS PrivateLink resource. The transit VPCs should either be owned by the SaaS provider or by the consumers. This section discusses both options.

You can create the transit VPC and subnets with CIDR ranges that are compatible with the on-premises data center. If they require private connectivity, consumers can connect to that VPC through AWS Direct Connect or AWS Site-to-Site VPN. You can also configure access to the transit account from the public internet by using an Application Load Balancer or Network Load Balancer that points to the VPC endpoint.

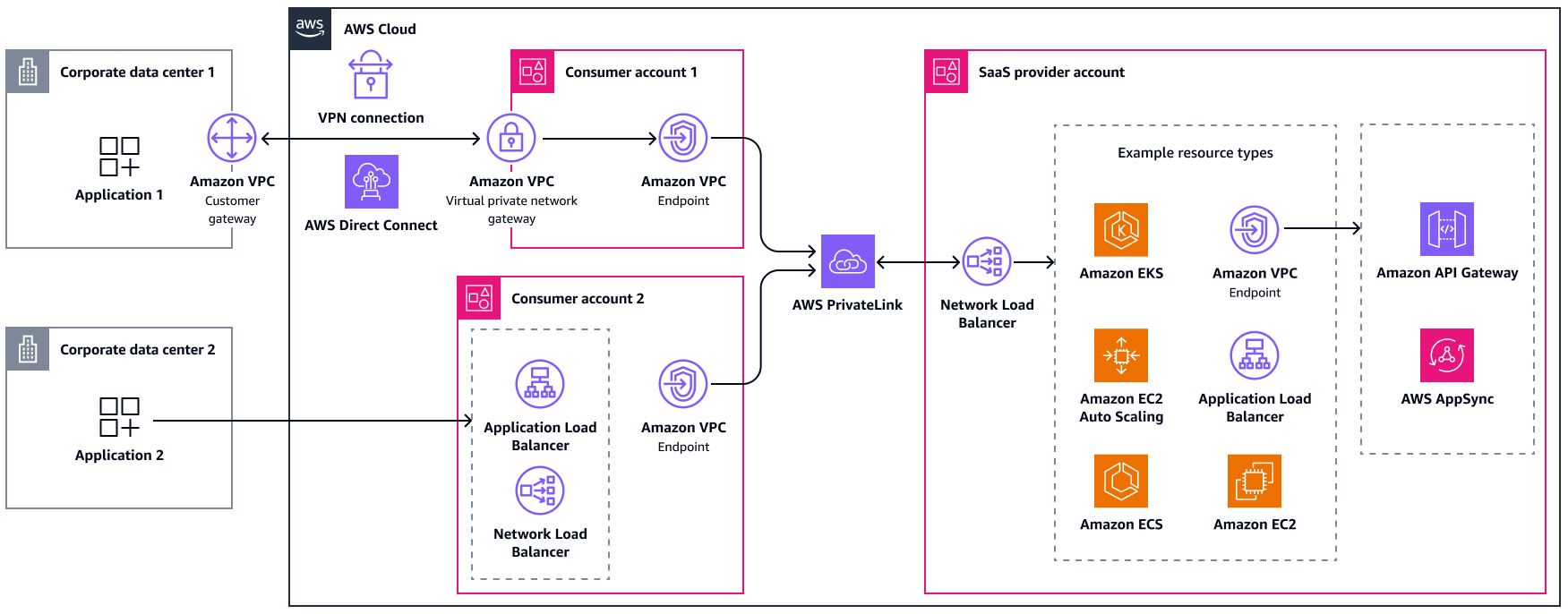

Consumer-managed transit VPC

In this approach, the SaaS provider leaves management of the transit VPCs up to the consumers. From a technical point of view, the SaaS provider's architecture is the same as when connecting to consumers in the AWS Cloud through AWS PrivateLink. From sales and product perspective, it is additional effort because some consumers don't have AWS accounts yet. They might be hesitant to open and operate an account. The SaaS provider should give guidance to their consumers about how to create AWS accounts and connect their on-premises data center. The following diagram shows a mix of public and private access, where the consumers own the transit VPCs.

The following are the benefits of this approach:

-

Time to repair: Operational overhead is largely offloaded to SaaS consumers

-

Adaptability: SaaS consumers can choose from different access options

-

Adaptability: No CIDR range conflicts, even when using Site-to-Site VPN or AWS Direct Connect

-

All metrics: Service provider inherits AWS PrivateLink benefits

The following are the drawbacks of this approach:

-

Ease of integration: SaaS consumers require at least one AWS account

-

TCO: A transit VPC is an architecture, not a fully managed service, so it requires more operational effort

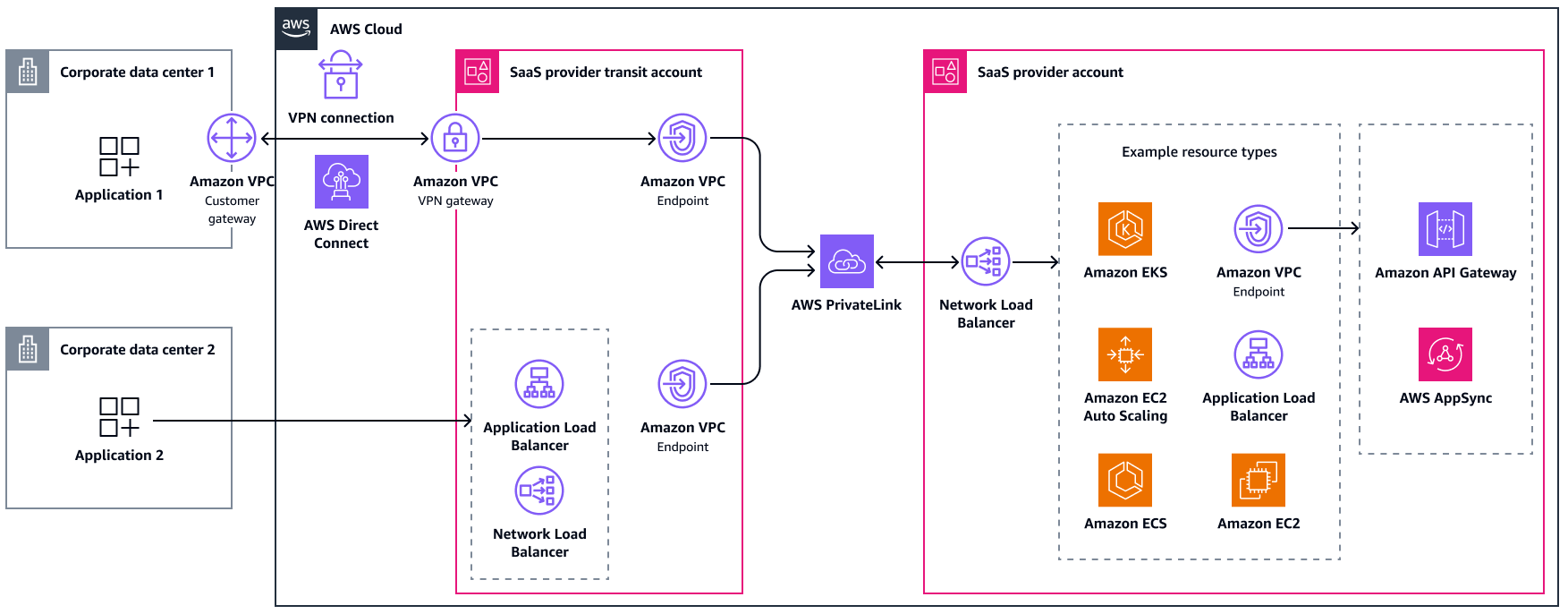

Provider-managed transit VPC

This approach uses the same technologies, but the account boundaries and responsibilities change. Here, the SaaS provider owns the transit VPCs, preferably in a separate account from the SaaS offering. This decoupling reduces costs, reduces risks, and allows the transit account to scale independently. For environments that require a high degree of isolation, you can create additional separation between tenants by using a subnet or by creating a separate transit VPC for each consumer. The consumers can then choose how to connect to the transit VPC. This approach provides more options to expand the total addressable market, but it has a higher TCO for the SaaS provider due to the need to operate and monitor additional architectural components.

The following are the benefits of this approach:

-

Adaptability: SaaS consumers can choose from different access options

-

Adaptability: SaaS consumers don't need to have an AWS account

-

Adaptability: No CIDR range conflicts, even when using Site-to-Site VPN or AWS Direct Connect

The following are the drawbacks of this approach:

-

TCO: A transit VPC is an architecture, not a fully managed service, so it requires more operational effort

-

TCO: SaaS provider needs to operate and monitor additional architectural components

Connecting through the public internet

Public internet access is also a valid option for providing access to a SaaS offering, although it does not offer private connectivity in the traditional sense. Some consumers might still prefer a public access approach because it requires no additional networking infrastructure between them and the SaaS provider. It reduces complexity, cost, and integration time in exchange for an increased attack surface. Strong authentication and authorization mechanisms can help mitigate the increased threat level, and you should always encrypt traffic. It is still recommended that you have an additional layer of security in this scenario, such as by using AWS WAF.

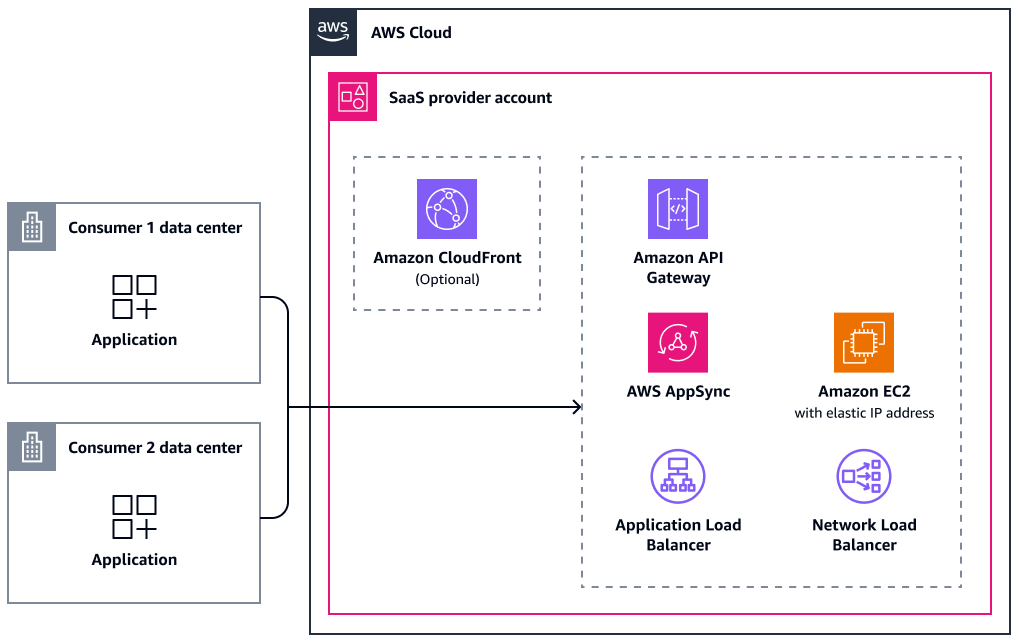

The architecture in this scenario is straightforward. The consumer connects to a public host (the SaaS provider) through the internet. The application can be hosted directly on a public Amazon Elastic Compute Cloud (Amazon EC2) instance with an Elastic IP address. The preferred option is to host it behind an Application Load Balancer or similar service. For better performance and caching static assets, you can use a content delivery network, such as Amazon CloudFront. To serve an application with minimum latency over two global static Anycast IP addresses, you can place AWS Global Accelerator in front of an Amazon EC2 instance, Network Load Balancer, or Application Load Balancer. In addition, CloudFront, Application Load Balancers, AWS AppSync, and Amazon API Gateway all integrate with AWS WAF. The following diagram provides an overview of the public internet access connectivity options.

The following table describes supported protocols and integrations for this scenario.

Service or resource |

IPv6 |

AWS WAF integration |

Can be a Global Accelerator endpoint |

|---|---|---|---|

Amazon CloudFront |

Supported |

Supported |

Not supported |

Amazon API Gateway |

Supported |

Supported |

Not supported |

AWS AppSync |

Partially supported |

Supported |

Not supported |

Amazon EC2 with an Elastic IP address |

Supported |

Not supported |

Supported |

Application Load Balancer |

Supported |

Supported |

Supported |

Network Load Balancer |

Supported |

Not supported |

Supported |

The following are the benefits of this approach:

-

Ease of integration: Simplicity and accessibility

-

Scalability: Unlimited scale

-

Adaptability: No CIDR range conflicts possible

-

Adaptability: CloudFront support

The following are the drawbacks of this approach:

-

Network isolation: No private connectivity

-

Network isolation: Strong security measures required

Other benefits and drawbacks apply, depending on the services that you choose.