Security OU - Log Archive account

| Influence the future of the AWS Security Reference Architecture (AWS SRA) by taking a short survey |

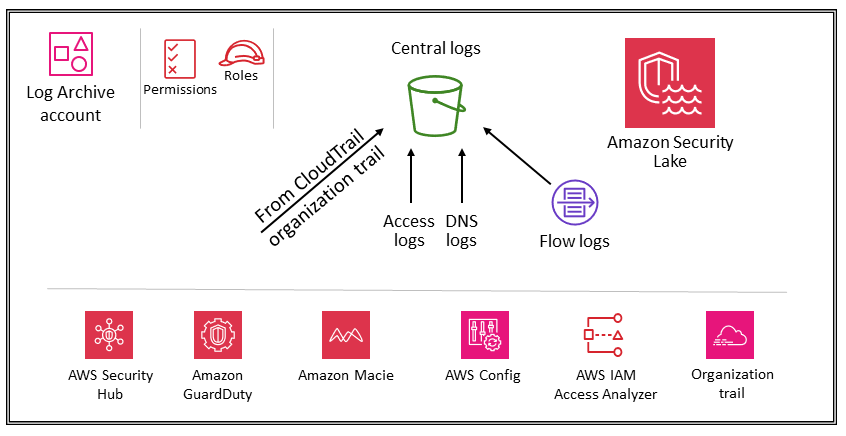

The following diagram illustrates the AWS security services that are configured in the Log Archive account.

The Log Archive account is dedicated to ingesting and archiving all security-related logs and backups. With centralized logs in place, you can monitor, audit, and alert on Amazon S3 object access, unauthorized activity by identities, IAM policy changes, and other critical activities performed on sensitive resources. The security objectives are straightforward: This should be immutable storage, accessed only by controlled, automated, and monitored mechanisms, and built for durability (for example, by using the appropriate replication and archival processes). Controls can be implemented at depth to protect the integrity and availability of the logs and log management process. In addition to preventive controls, such as assigning least privilege roles to be used for access and encrypting logs with a controlled AWS KMS key, use detective controls such as AWS Config to monitor (and alert and remediate) this collection of permissions for unexpected changes.

Design consideration

-

Operational log data used by your infrastructure, operations, and workload teams often overlaps with the log data used by security, audit, and compliance teams. We recommend that you consolidate your operational log data into the Log Archive account. Based on your specific security and governance requirements, you might need to filter operational log data saved to this account. You might also need to specify who has access to the operational log data in the Log Archive account.

Types of logs

The primary logs shown in the AWS SRA include CloudTrail (organization trail), Amazon VPC flow logs, access logs from Amazon CloudFront and AWS WAF, and DNS logs from Amazon Route 53. These logs provide an audit of actions taken (or attempted) by a user, role, AWS service, or network entity (identified, for example, by an IP address). Other log types (for example, application logs or database logs) can be captured and archived as well. For more information about log sources and logging best practices, see the security documentation for each service.

Amazon S3 as central log store

Many AWS services log information in Amazon S3—either by default or exclusively. AWS CloudTrail, Amazon VPC Flow Logs, AWS Config, and Elastic Load Balancing are some examples of services that log information in Amazon S3. This means that log integrity is achieved through S3 object integrity; log confidentiality is achieved through S3 object access controls; and log availability is achieved through S3 Object Lock, S3 object versions, and S3 Lifecycle rules. By logging information in a dedicated and centralized S3 bucket that resides in a dedicated account, you can manage these logs in just a few buckets and enforce strict security controls, access, and separation of duties.

In the AWS SRA, the primary logs stored in Amazon S3 come from CloudTrail, so this section describes how to protect those objects. This guidance also applies to any other S3 objects created either by your own applications or by other AWS services. Apply these patterns whenever you have data in Amazon S3 that needs high integrity, strong access control, and automated retention or destruction.

All new objects (including CloudTrail logs) that are uploaded to S3 buckets are encrypted by default by using Amazon server-side encryption with Amazon S3-managed encryption keys (SSE-S3). This helps protect the data at rest, but access control is controlled exclusively by IAM policies. To provide an additional managed security layer, you can use server-side encryption with AWS KMS keys that you manage (SSE-KMS) on all security S3 buckets. This adds a second level of access control. To read log files, a user must have both Amazon S3 read permissions for the S3 object and an IAM policy or role applied that allows them permissions to decrypt by the associated key policy.

Two options help you protect or verify the integrity of CloudTrail log objects that are stored in Amazon S3. CloudTrail provides log file integrity validation to determine whether a log file was modified or deleted after CloudTrail delivered it. The other option is S3 Object Lock.

In addition to protecting the S3 bucket itself, you can adhere to the principle of least

privilege for the logging services (for example, CloudTrail) and the Log Archive account. For

example, users with permissions granted by the AWS managed IAM policy

AWSCloudTrail_FullAccess can disable or reconfigure the most sensitive and

important auditing functions in their AWS accounts. Limit the application of this IAM policy

to as few individuals as possible.

Use detective controls, such as those delivered by AWS Config and AWS IAM Access Analyzer, to monitor (and alert and remediate) this broader collective of preventive controls for unexpected changes.

For a deeper discussion of security best practices for S3 buckets, see the Amazon S3 documentation, online tech talks

Implementation example

The AWS SRA

code library

Amazon Security Lake

AWS SRA recommends that you use the Log Archive account as the delegated administrator account for Amazon Security Lake. When you do this, Security Lake collects supported logs in dedicated S3 buckets in the same account as other SRA-recommended security logs.

To protect the availability of the logs and the log management process, the S3 buckets for Security Lake should be accessed only by the Security Lake service or by IAM roles that are managed by Security Lake for sources or subscribers. In addition to using preventive controls—such as assigning least-privilege roles for access, and encrypting logs with a controlled AWS Key Management Services (AWS KMS) key—use detective controls such as AWS Config to monitor (and alert and remediate) this collection of permissions for unexpected changes.

The Security Lake administrator can enable log collection across your AWS organization. These logs are stored in regional S3 buckets in the Log Archive account. Additionally, to centralize logs and facilitate easier storage and analysis, the Security Lake administrator can choose one or more rollup Regions where logs from all the regional S3 buckets are consolidated and stored. Logs from supported AWS services are automatically converted into a standardized open-source schema called Open Cybersecurity Schema Framework (OCSF) and saved in Apache Parquet format in Security Lake S3 buckets. With OCSF support, Security Lake efficiently normalizes and consolidates security data from AWS and other enterprise security sources to create a unified and reliable repository of security-related information.

Security Lake can collect logs that are associated with AWS CloudTrail management events and CloudTrail data events for Amazon S3 and AWS Lambda. To collect CloudTrail management events in Security Lake, you must have at least one CloudTrail multi-Region organization trail that collects read and write CloudTrail management events. Logging must be enabled for the trail. A multi-Region trail delivers log files from multiple Regions to a single S3 bucket for a single AWS account. If the Regions are in different countries, consider data export requirements to determine whether multi-Region trails can be enabled.

AWS Security Hub CSPM is a supported native data source in Security Lake, and you should add Security Hub CSPM findings to Security Lake. Security Hub CSPM generates findings from many different AWS services and third-party integrations. These findings help you get an overview of your compliance posture and whether you're following security recommendations for AWS and AWS Partner solutions.

To gain visibility and actionable insights from logs and events, you can query the data by using tools such as Amazon Athena, Amazon OpenSearch Service, Amazon Quicksight, and third-party solutions. Users who require access to the Security Lake log data shouldn’t access the Log Archive account directly. They should access data only from the Security Tooling account. Or they can use other AWS accounts or on-premises locations that provide analytics tools such as OpenSearch Service, QuickSight, or third-party tools such as security information and event management (SIEM) tools. To provide access to the data, the administrator should configure Security Lake subscribers in the Log Archive account and configure the account that needs access to the data as a query access subscriber. For more information, see Amazon Security Lake in the Security OU – Security Tooling account section of this guide.

Security Lake provides an AWS managed policy to help you manage administrator access to the service. For more information, see the Security Lake User Guide. As a best practice, we recommend that you restrict the configuration of Security Lake through development pipelines and prevent configuration changes through the AWS consoles or the AWS Command Line Interface (AWS CLI). Additionally, you should set up strict IAM policies and service control policies (SCPs) to provide only necessary permissions to manage Security Lake. You can configure notifications to detect any direct access to these S3 buckets.