REL09-BP04 Perform periodic recovery of the data to verify backup integrity and processes

Validate that your backup process implementation meets your recovery time objectives (RTO) and recovery point objectives (RPO) by performing a recovery test.

Using AWS, you can stand up a testing environment and restore your backups to assess RTO and RPO capabilities, and run tests on data content and integrity.

Additionally, Amazon RDS and Amazon DynamoDB allow point-in-time recovery (PITR). Using continuous backup, you can restore your dataset to the state it was in at a specified date and time.

Desired Outcome: Data from backups is periodically recovered using well-defined mechanisms to ensure that recovery is possible within the established recovery time objective (RTO) for the workload. Verify that restoration from a backup results in a resource that contains the original data without any of it being corrupted or inaccessible, and with data loss within the recovery point objective (RPO).

Common anti-patterns:

-

Restoring a backup, but not querying or retrieving any data to ensure that the restoration is usable.

-

Assuming that a backup exists.

-

Assuming that the backup of a system is fully operational and that data can be recovered from it.

-

Assuming that the time to restore or recover data from a backup falls within the RTO for the workload.

-

Assuming that the data contained on the backup falls within the RPO for the workload

-

Restoring ad hoc, without using a runbook, or outside of an established automated procedure.

Benefits of establishing this best practice: Testing the recovery of the backups ensures data can be restored when needed without having any worry that data might be missing or corrupted, that the restoration and recovery is possible within the RTO for the workload, and any data loss falls within the RPO for the workload.

Level of risk exposed if this best practice is not established: Medium

Implementation guidance

Testing backup and restore capability increases confidence in the ability to perform these actions during an outage. Periodically restore backups to a new location and run tests to verify the integrity of the data. Some common tests that should be performed are checking

if all the data is available, is not corrupted, is accessible, and any data loss falls within the RPO for the workload. Such tests can also help ascertain if recovery mechanisms are fast enough to accommodate the workload's RTO.

-

Identify data sources that are currently being backed up and where these backups are being stored. Refer to REL09-BP01 Identify and back up all data that needs to be backed up, or reproduce the data from sources for guidance on how to implement this.

-

Establish criteria for data validation for each data source. Different types of data will have different properties which might require different validation mechanisms. Consider how this data might be validated before you are confident to use it in production. Some common ways to validate data are using data and backup properties such as data type, format, checksum, size, or a combination of these with custom validation logic. For example, this might be a comparison of the checksum values between the restored resource and the data source at the time the backup was created.

-

Establish RTO and RPO for restoring the data based on data criticality. Refer to REL13-BP01 Define recovery objectives for downtime and data loss for guidance on how to implement this.

-

Assess your recovery capability. Review your backup and restore strategy to understand if it can meet your RTO and RPO, and adjust the strategy as necessary. Using AWS Resilience Hub, you can run an assessment of your workload. The assessment evaluates your application configuration against the resiliency policy and reports if your RTO and RPO targets can be met.

-

Do a test restore using currently established processes used in production for data restoration. These processes depend on how the original data source was backed up, the format and storage location of the backup itself, or if the data is reproduced from other sources. For example, if you are using a managed service such as AWS Backup, this might be as simple as restoring the backup into a new resource. If you used AWS Elastic Disaster Recovery you can launch a recovery drill.

-

Validate data recovery from the restored resource (from the previous step) based on criteria you previously established for data validation in step 2. Does the restored and recovered data contain the most recent record/item at the time of backup? Does this data fall within the RPO for the workload?

-

Measure time required for restore and recovery and compare it to RTO established earlier in step 3. Does this process fall within the RTO for the workload? For example, compare the timestamps from when the restoration process started and when the recovery validation completed to calculate how long this process takes. All AWS API calls are timestamped and this information is available in AWS CloudTrail. While this information can provide details on when the restore process started, the end timestamp for when the validation was completed should be recorded by your validation logic. If using an automated process, then services like Amazon DynamoDB

can be used to store this information. Additionally, many AWS services provide an event history which provides timestamped information when certain actions occurred. Within AWS Backup, backup and restore actions are referred to as Jobs, and these Jobs contain timestamp information as part of its metadata which can be used to measure time required for restoration and recovery. -

Notify stakeholders if data validation fails, or if the time required for restoration and recovery exceeds the established RTO for the workload. When implementing automation to do this, such as in this lab

, services like Amazon Simple Notification Service (Amazon SNS) can be used to send push notifications such as email or SMS to stakeholders. These messages can also be published to messaging applications such as Amazon Chime, Slack, or Microsoft Teams or used to create tasks as OpsItems using AWS Systems Manager OpsCenter. -

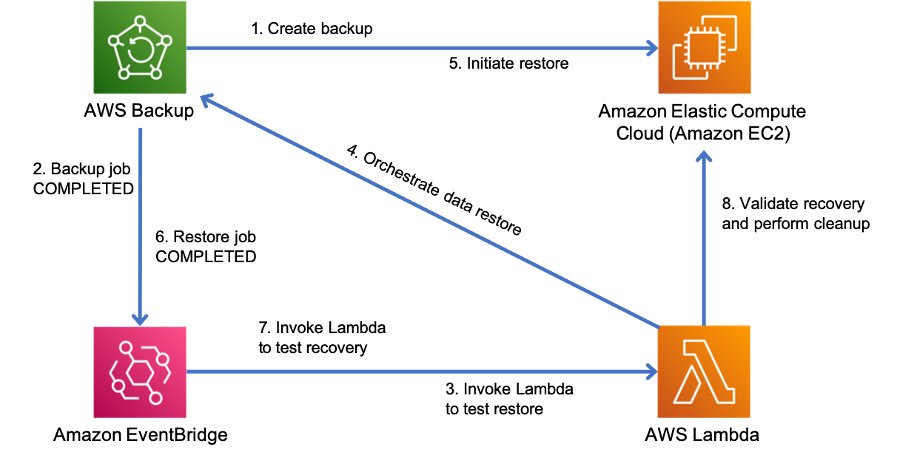

Automate this process to run periodically. For example, services like AWS Lambda or a State Machine in AWS Step Functions can be used to automate the restore and recovery processes, and Amazon EventBridge can be used to trigger this automation workflow periodically as shown in the architecture diagram below. Learn how to Automate data recovery validation with AWS Backup

. Additionally, this Well-Architected lab provides a hands-on experience on one way to do automation for several of the steps here.

Figure 9. An automated backup and restore process

Level of effort for the Implementation Plan: Moderate to high depending on the complexity of the validation criteria.

Resources

Related documents:

Related examples: