CloudFront configuration best practices

You can further improve performance and resilience of your media workloads by following the configuration best practices outlined below.

Cache Hit Ratio

The Cache Hit Ratio (CHR) is a key metric for CDNs. The CHR is defined as:

requests served directly from the cache CHR = --------------------------------------- number of total requests

The CHR metric in CloudFront considers only requests with response status HIT

(see x-edge-result-type field for additional response status types), as

requests served directly from the cache. The CHR is expressed as a percentage over a

measurement interval. Maximizing the CHR has a direct performance improvement as it reduces

response latency. Poor CHR results in more cache-miss occurrences and brings a cost in terms

of overall performance as well as increased origin load.

You can further maximize the CHR value using the following recommendations:

General settings

-

Optimize settings of the cache key by making use of the CloudFront policies:

-

Cache policies: specify headers, query parameters or cookies that must be part of the cache key

-

Origin request policies: specify headers, query parameters or cookies that should be forwarded to the origin but do not need to be part of the cache key

-

Response header policies: specify which headers CloudFront should add or remove in the responses it sends to viewers, such as CORS headers

-

-

Use cache policies optimized for a specific origin. Elemental MediaPackage cache policy is optimized for VoD workloads that use MediaPackage as a CloudFront origin

-

If managing cache-control directives is too complicated on the origin level, CloudFront provides the possibility to set a Minimum, Default and Maximum TTL.

-

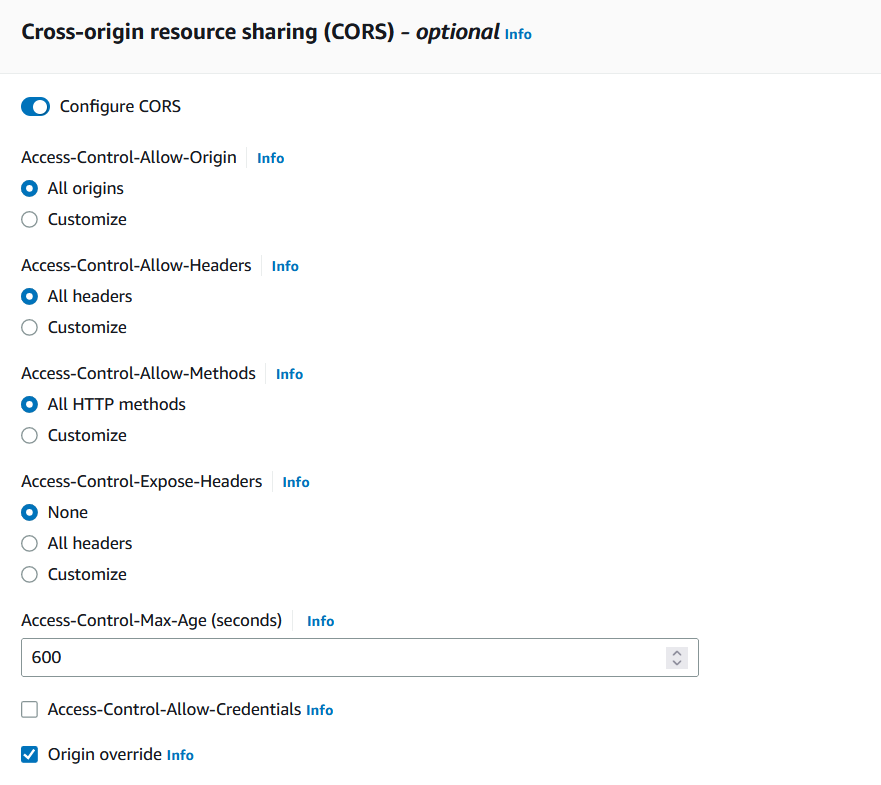

Avoid forwarding CORS headers to the origin. Instead, choose from a number of managed response header policies that include preconfigured values for CORS and security header values. You can also optionally configure a custom response header policy.

Figure 4 – Example of CloudFront CORS Response Header Policy

-

Remove the Accept-Encoding header from the cache key associated with the video content by disabling compression support in cache policy. The video content is compressed in the encoding process at the origin with an adequate compression format for this type of content

Settings for live streaming

-

Configure separate cache behaviors for video manifests and segments:

-

Manifest cache behavior (applicable to HLS media playlist manifest): configure a cache policy with Min TTL set to 1 second and Default TTL close to half the segment duration. If the workflow includes SSAI, manifest caching must be disabled by setting all TTL values to 0.

-

Segment cache behavior: configure a cache policy with Min TTL to 1 second and Default TTL to greater than twice the segment duration, typically based on the event duration

-

-

For LL-HLS, you can further optimize content delivery by following these additional recommendations:

-

Add LL-HLS query string parameters to the cache key for playlist manifest files. LL-HLS introduces special query string parameters which are added to the URL of a playlist The client can request a playlist manifest with a specific partial segment included using a

…/playlist.m3u8?_HLS_msn=10&_HLS_part=2URL, for example. This request instructs CloudFront and the origin not to respond until a playlist is available that contains part 2 of segment 10. Therefore,_HLS_msnand_HLS_partquery parameters should be added to the cache key and the max-age value expected to be set close to the segment length. -

Prevent caching playlist manifest delta updates for LL-HLS. The client can request to only send certain number of segments from the live edge by specifying the query parameter

_HLS_skip=YES. To make sure these delta manifests are delivered directly from the origin, the query parameter_HLS_skipshould be added to the cache key andCache-Controldirective should be set tono-cache, no-storeorprivatein the response directly on the origin or by using Lambda@Edge with the origin response trigger.

-

Origin settings

Optimize origin settings to help further improve origin security, reduce latency and increase availability using the following guidelines.

General settings

-

Use Origin Access Control (OAC) to restrict access to an S3 origin. OAC is a managed access control feature in Amazon CloudFront to help insure that your S3 bucket origin is not directly exposed to the internet.

-

Enable origin failover by creating an origin group. Verify the response codes in failure scenarios when configuring failover criteria.

Settings for live streaming

-

Enable Origin Shield in the AWS Region closest to your origin location. There are also other scenarios discussed in this whitepaper where Origin Shield can help reduce the load on your origin. However, Origin Shield is highly recommended for live streaming events due to a high number of concurrent requests

-

Set connection and response timeouts to be between half a segment and segment duration. The same settings are recommended for an origin hosting ad content

-

Set

keep-alivetimeout to be longer than a segment duration. The same settings are recommended for an origin hosting advertisements. -

Set the number of connection attempts and connection timeout to be between half a segment and segment duration. For example, if the segment duration is 4 seconds, set the connection attempts to 2 and the connection timeout to 2 seconds. The same settings are recommended for an origin hosting ad content

Long tail content

VOD content tends to be viewed most often when it is new. The frequency of requests from viewers usually reduces over time, until the point where the content is not usually present in the cache. This is known as long tail content.

Long tail content can still benefit from the same CloudFront acceleration as dynamic content. You can optimize your architecture for long tail content by replicating the origin to multiple regions and then routing requests to the nearest Region. This can be done in different ways according to the origin type:

-

For Amazon S3 origins, you can use Lambda@Edge to process origin requests to detect in which Region it's being executed, and route the request to the nearest Region based on a static mapping table.

-

For non-Amazon S3 origins, you can configure a domain name in Amazon Route 53 with a latency policy pointing to different Regions. Then you can configure an origin in CloudFront with this domain name.

If you choose a multi-CDN strategy, you can improve the cache-hit ratio by serving similar requests from the same cache, either by reducing the number of CDNs for long tail content, or by implementing content-based sharding across CDNs, where each CDN serves a different subset of the VOD library.

Troubleshooting playback issues

During playback, the player can encounter various issues that are critical enough to interrupt the process and have the player stop on encountering an error. The reasons for playback errors can differ. They can be caused by poor network conditions, temporary issues at the origin, a badly tuned player or CDN misconfiguration. Whatever the reason, an error results in bad viewer experience. Viewers most likely have to reload the web page or application upon receiving such an error. You can review your architecture and make configuration improvements to reduce playback errors:

-

Offering lower bitrate versions of the stream to the player so it can maintain the playback state during temporary periods of poor network conditions.

-

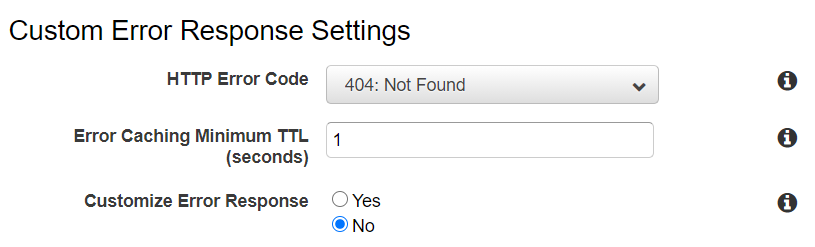

Reducing Error Caching Minimum TTL. This value in CloudFront configuration dictates how long the error response generated by the origin is returned to the viewers before the cache host make another attempt to retrieve the same object. By default, this value is 10 seconds. Because some players tend to request video segments before they become available, the response can be a

403or404. This response would be served to other viewers as well for 10 seconds, if you rely on default settings.By knowing possible error codes that the origin could return, you can reduce this time setting appropriately.

Figure 5 – Example Custom Error Response Settings

-

Include the certificate of root certificate authority (CA) in the certificate chain associated with the domain name used for media delivery. For HTTPS-based delivery, some viewer device platforms can fail to set up a TLS connection when the root CA is missing. Note that you need to supply a certificate chain when importing a certificate into AWS Certificate Manager (ACM). Public certificates generated through ACM have root CA certificate included in the certificate chain.