Training a custom model for the AWS DeepComposer Music studio

You can train a custom model in the AWS DeepComposer console using a supported generative AI technique and algorithm. Then you can use the model in the AWS DeepComposer music studio to generate compositions.

AWS DeepComposer supports training custom models through the generative adversarial network (GAN) architecture. You can train a model using the MuseGAN or U-Net algorithm. Each algorithm supports different training datasets. To use the symphony, jazz, pop, or rock genre-based datasets, choose the MuseGAN algorithm. The U-Net algorithm is limited to a training dataset based on music by Johann Sebastian Bach.

After training a custom model, you can perform inference using an input track in the AWS DeepComposer music studio. When you perform inference, you use your custom trained model to generate a new composition.

After training a custom model, you can perform inference using an input track in the AWS DeepComposer Music studio. When you perform inference, you use your custom trained model to generate a new composition.

If you use the default values to train your custom model, training will take approximately 8 hours. To decrease the amount of time, you can decrease the number of training epochs. Decreasing the number of training epochs by too much, however, might result in a poorly trained model.

Note

Before proceeding further, make sure you have signed up for an AWS account and signed in to the AWS DeepComposer console.

To train a custom model

-

Open the AWS DeepComposer

console. -

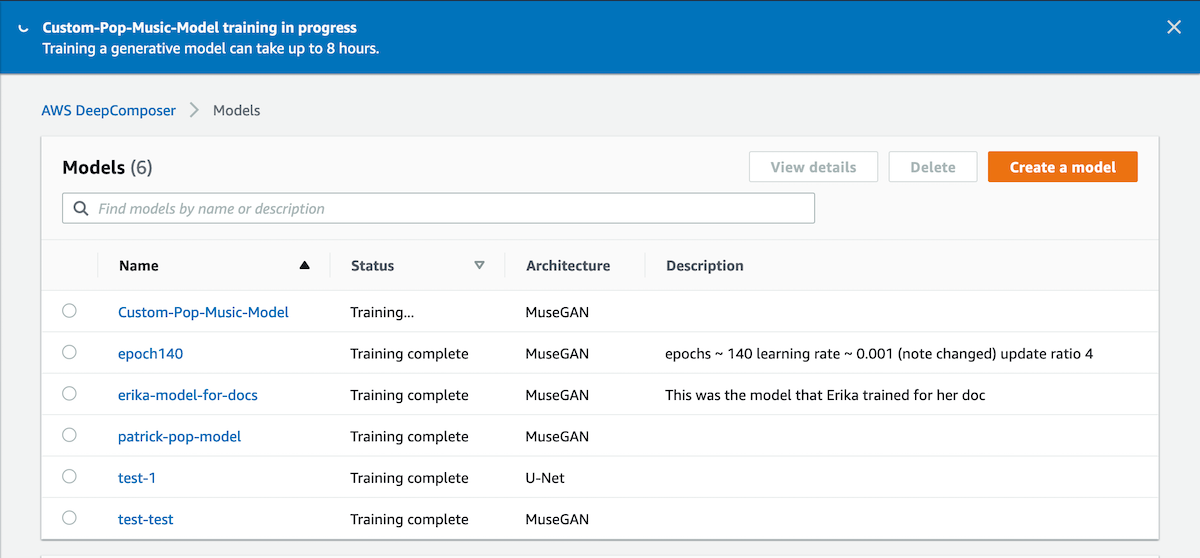

In the navigation pane, choose Models.

-

On the Models page, choose Create a model.

-

On the Train a model page, under Generative algorithm, choose MuseGAN.

Both the MuseGAN and U-Net algorithms are based on convolutional neural networks (CNNs). These CNNs generate a high-level image-based representation of music called a piano roll, which is used to generate the output tracks.

-

For Training dataset, choose jazz.

-

For Hyperparameters, choose the following values.

Hyperparameters are algorithm-dependent variables that you control. You can tune the hyperparameters to find the best fit for the specific problem that you are trying to model.

Hyperparameter Value 100

0.001

4

-

Under Model details, give your model a name and an optional description.

-

Choose Start training.

-

To check the status of training, in the navigation pane, choose Models. The status of the training appears under Status.

After the model has been trained successfully, the status changes to Training complete.

After training has completed, your model automatically appears in either AWS DeepComposer Music studio experience, where you can use it to create compositions.

To learn more about using your custom model, refer to the topic on creating compositions with a trained model in AWS DeepComposer.