End of support notice: On December 15, 2025, AWS will end support for AWS IoT Analytics. After December 15, 2025, you will no longer be able to access the AWS IoT Analytics console, or AWS IoT Analytics resources. For more information, see AWS IoT Analytics end of support.

What is AWS IoT Analytics?

AWS IoT Analytics automates the steps required to analyze data from IoT devices. AWS IoT Analytics filters,

transforms, and enriches IoT data before storing it in a time-series data store for analysis. You

can set up the service to collect only the data you need from your devices, apply mathematical

transforms to process the data, and enrich the data with device-specific metadata such as device

type and location before storing it. Then, you can analyze your data by running queries using the

built-in SQL query engine, or perform more complex analytics and machine learning inference. AWS IoT Analytics

enables advanced data exploration through integration with Jupyter Notebook

Traditional analytics and business intelligence tools are designed to process structured data. Raw IoT data often comes from devices that record less structured data (such as temperature, motion, or sound). As a result the data from these devices can have significant gaps, corrupted messages, and false readings that must be cleaned up before analysis can occur. Also, IoT data is often only meaningful in the context of other data from external sources. AWS IoT Analytics lets you to address these issues and collect large amounts of device data, process messages, and store them. You can then query the data and analyze it. AWS IoT Analytics includes pre-built models for common IoT use cases so that you can answer questions like which devices are about to fail or which customers are at risk of abandoning their wearable devices.

How to use AWS IoT Analytics

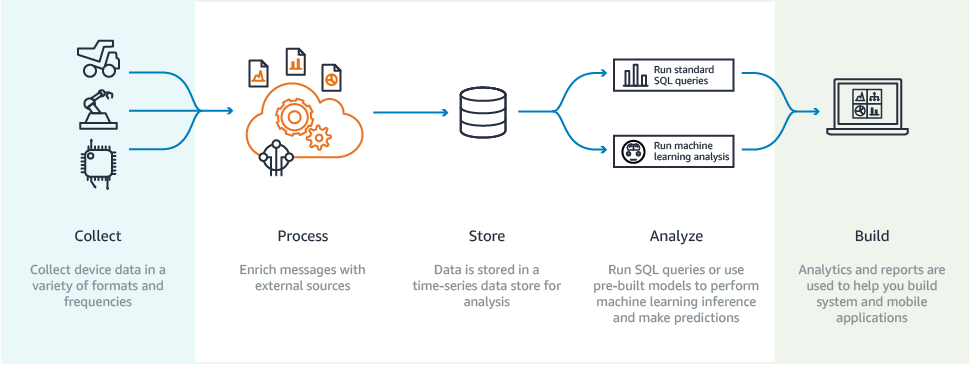

The following graphic shows an overview of how you can use AWS IoT Analytics.

Key features

- Collect

-

-

Integrated with AWS IoT Core—AWS IoT Analytics is fully integrated with AWS IoT Core so it can receive messages from connected devices as they stream in.

-

Use a batch API to add data from any source—AWS IoT Analytics can receive data from any source through HTTP. That means that any device or service that is connected to the internet can send data to AWS IoT Analytics. For more information, see BatchPutMessage in the AWS IoT Analytics API Reference.

-

Collect only the data you want to store and analyze—You can use the AWS IoT Analytics console to configure AWS IoT Analytics to receive messages from devices through MQTT topic filters in various formats and frequencies. AWS IoT Analytics validates that the data is within specific parameters you define and creates channels. Then, the service routes the channels to appropriate pipelines for message processing, transformation, and enrichment.

-

- Process

-

-

Cleanse and filter—AWS IoT Analytics lets you define AWS Lambda functions that are triggered when AWS IoT Analytics detects missing data, so you can run code to estimate and fill gaps. You can also define maximum and minimum filters and percentile thresholds to remove outliers in your data.

-

Transform—AWS IoT Analytics can transform messages using mathematical or conditional logic you define, so that you can perform common calculations like Celsius into Fahrenheit conversion.

-

Enrich—AWS IoT Analytics can enrich data with external data sources such as a weather forecast, and then route the data to the AWS IoT Analytics data store.

-

- Store

-

-

Time-series data store—AWS IoT Analytics stores the device data in an optimized time-series data store for faster retrieval and analysis. You can also manage access permissions, implement data retention policies and export your data to external access points.

-

Store processed and raw data—AWS IoT Analytics stores the processed data and also automatically stores the raw ingested data so you can process it at a later time.

-

- Analyze

-

-

Run Ad-hoc SQL queries—AWS IoT Analytics provides a SQL query engine so you can run ad-hoc queries and get results quickly. The service enables you to use standard SQL queries to extract data from the data store to answer questions like the average distance traveled for a fleet of connected vehicles or how many doors in a smart building are locked after 7pm. These queries can be re-used even if connected devices, fleet size, and analytic requirements change.

-

Time-series analysis—AWS IoT Analytics supports time-series analysis so you can analyze the performance of devices over time and understand how and where they are being used, continuously monitor device data to predict maintenance issues, and monitor sensors to predict and react to environmental conditions.

-

Hosted notebooks for sophisticated analytics and machine learning—AWS IoT Analytics includes support for hosted notebooks in Jupyter Notebook for statistical analysis and machine learning. The service includes a set of notebook templates that contain AWS-authored machine learning models and visualizations. You can use the templates to get started with IoT use cases related to device failure profiling, forecasting events such as low usage that might signal the customer will abandon the product, or segmenting devices by customer usage levels (for example heavy users, weekend users) or device health. After you author a notebook, you can containerize and execute it on a schedule that you specify. For more information, see Automating your workflow.

-

Prediction—You can do statistical classification through a method called logistic regression. You can also use Long-Short-Term Memory (LSTM), which is a powerful neural network technique for predicting the output or state of a process that varies over time. The pre-built notebook templates also support the K-means clustering algorithm for device segmentation, which clusters your devices into cohorts of like devices. These templates are typically used to profile device health and device state such as HVAC units in a chocolate factory or wear and tear of blades on a wind turbine. Again, these notebook templates can be contained and executed on a schedule.

-

- Build and visualize

-

-

Quick Suite integration—AWS IoT Analytics provides a connector to Quick Suite so that you can visualize your data sets in a QuickSight dashboard.

-

Console integration—You can also visualize the results or your ad-hoc analysis in the embedded Jupyter Notebook in the AWS IoT Analytics' console.

-

AWS IoT Analytics components and concepts

- Channel

-

A channel collects data from an MQTT topic and archives the raw, unprocessed messages before publishing the data to a pipeline. You can also send messages to a channel directly using the BatchPutMessage API. The unprocessed messages are stored in an Amazon Simple Storage Service (Amazon S3) bucket that you or AWS IoT Analytics manage.

- Pipeline

-

A pipeline consumes messages from a channel and enables you to process the messages before storing them in a data store. The processing steps, called activities (Pipeline activities), perform transformations on your messages such as removing, renaming or adding message attributes, filtering messages based on attribute values, invoking your Lambda functions on messages for advanced processing or performing mathematical transformations to normalize device data.

- Data store

-

Pipelines store their processed messages in a data store. A data store is not a database, but it is a scalable and queryable repository of your messages. You can have multiple data stores for messages coming from different devices or locations, or filtered by message attributes depending on your pipeline configuration and requirements. As with unprocessed channel messages, a data store's processed messages are stored in an Amazon S3 bucket that you or AWS IoT Analytics manage.

- Data set

-

You retrieve data from a data store by creating a data set. AWS IoT Analytics enables you to create a SQL data set or a container data set.

After you have a data set, you can explore and gain insights into your data through integration using Quick Suite

. You can also perform more advanced analytical functions through integration with Jupyter Notebook . Jupyter Notebook provides powerful data science tools that can perform machine learning and a range of statistical analyses. For more information, see Notebook templates. You can send data set contents to an Amazon S3 bucket, enabling integration with your existing data lakes or access from in-house applications and visualization tools. You can also send data set contents as an input to AWS IoT Events, a service which enables you to monitor devices or processes for failures or changes in operation, and to trigger additional actions when such events occur.

- SQL data set

-

A SQL data set is similar to a materialized view from a SQL database. You can create a SQL data set by applying a SQL action. SQL data sets can be generated automatically on a recurring schedule by specifying a trigger.

- Container data set

-

A container data set enables you to automatically run your analysis tools and generate results. For more information, see Automating your workflow. It brings together a SQL data set as input, a Docker container with your analysis tools and needed library files, input and output variables, and an optional schedule trigger. The input and output variables tell the executable image where to get the data and store the results. The trigger can run your analysis when a SQL data set finishes creating its content or according to a time schedule expression. A container data set automatically runs, generates and then saves the results of the analysis tools.

- Trigger

-

You can automatically create a data set by specifying a trigger. The trigger can be a time interval (for example, create this data set every two hours) or when another data set's content has been created (for example, create this data set when

myOtherDatasetfinishes creating its content). Or, you can generate data set content manually by using CreateDatasetContent API. - Docker container

-

You can create your own Docker container to package your analysis tools or use options that SageMaker AI provides. For more information, see Docker container

. You can create your own Docker container to package your analysis tools or use options provided by SageMaker AI. You can store a container in an Amazon ECR registry that you specify so it is available to install on your desired platform. Docker containers are capable of running your custom analytical code prepared with Matlab, Octave, Wise.io, SPSS, R, Fortran, Python, Scala, Java, C++, and so on. For more information, see Containerizing a notebook. - Delta windows

-

Delta windows are a series of user-defined, non-overlapping and contiguous time intervals. Delta windows enable you to create the data set content with, and perform analysis on, new data that has arrived in the data store since the last analysis. You create a delta window by setting the

deltaTimein thefiltersportion of aqueryActionof a data set. For more information, see theCreateDatasetAPI. Usually, you'll want to create the data set content automatically by also setting up a time interval trigger (triggers:schedule:expression). This lets you filter messages that have arrived during a specific time window, so the data contained in messages from previous time windows doesn't get counted twice. For more information, see Example 6 -- creating a SQL dataset with a Delta window (CLI).

Access AWS IoT Analytics

As part of AWS IoT, AWS IoT Analytics provides the following interfaces to enable your devices to generate data and your applications to interact with the data they generate:

- AWS Command Line Interface (AWS CLI)

-

Run commands for AWS IoT Analytics on Windows, OS X, and Linux. These commands enable you to create and manage things, certificates, rules, and policies. To get started, see the AWS Command Line Interface User Guide. For more information about the commands for AWS IoT, see iot in the AWS Command Line Interface Reference.

Important

Use the

aws iotanalyticscommand to interact with AWS IoT Analytics. Use theaws iotcommand to interact with other parts of the IoT system. - AWS IoT API

-

Build your IoT applications using HTTP or HTTPS requests. These API actions enable you to create and manage things, certificates, rules, and policies. For more information, see Actions in the AWS IoT API Reference.

- AWS SDKs

-

Build your AWS IoT Analytics applications using language-specific APIs. These SDKs wrap the HTTP and HTTPS API and enable you to program in any of the supported languages. For more information, see AWS SDKs and tools

. - AWS IoT Device SDKs

-

Build applications that run on your devices that send messages to AWS IoT Analytics. For more information, see AWS IoT SDKs.

- AWS IoT Analytics Console

-

You can build the components to visualize the results in the AWS IoT Analytics console

.

Use cases

- Predictive maintenance

-

AWS IoT Analytics provides templates to build predictive maintenance models and apply them to your devices. For example, you can use AWS IoT Analytics to predict when heating and cooling systems are likely to fail on connected cargo vehicles so the vehicles can be rerouted to prevent shipment damage. Or, an auto manufacturer can detect which of its customers have worn brake pads and alert them to seek maintenance for their vehicles.

- Proactive replenishing of supplies

-

AWS IoT Analytics lets you build IoT applications that can monitor inventories in real time. For example, a food and drink company can analyze data from food vending machines and proactively reorder merchandise whenever the supply is running low.

- Process efficiency scoring

-

With AWS IoT Analytics, you can build IoT applications that constantly monitor the efficiency of different processes and take action to improve the process. For example, a mining company can increase the efficiency of its ore trucks by maximizing the load for each trip. With AWS IoT Analytics, the company can identify the most efficient load for a location or truck over time, and then compare any deviations from the target load in real time, and better plan leading guidelines to improve efficiency.

- Smart agriculture

-

AWS IoT Analytics can enrich IoT device data with contextual metadata using AWS IoT registry data or public data sources so that your analysis factors in time, location, temperature, altitude, and other environmental conditions. With that analysis, you can write models that output recommended actions for your devices to take in the field. For example, to determine when to water, irrigation systems might enrich humidity sensor data with data on rainfall, enabling more efficient water usage.