Rest API

REST APIs help create APIs that follow the

REST

architectural style

While designing a REST API, a key consideration is security. Use

least

privilege

Private endpoint type

To make APIs accessible only from Amazon VPCs, you can use REST APIs with the private endpoint type. The traffic to the APIs will not leave the AWS network. There are four options to invoke a private API through different domain name system (DNS) names:

-

Private DNS names

-

Custom domain names

-

Interface VPC endpoint public DNS hostnames

-

Amazon Route53 alias

While configuring private APIs, there are several key points to consider. The “DNS Names for Private APIs” section provides use cases, pros, and cons about each option.

DNS names for private APIs

Table 1 – Private API DNS names

| DNS names | Private DNS option on VPCs | Pros | Cons |

|---|---|---|---|

| Private DNS names | Enabled | Easy to set up | DNS issue with regional and edge-optimized APIs |

| Custom domain names | Enabled | Custom domain name for private APIs | Additional setup of a custom domain name in API Gateway |

| Interface VPC endpoint public DNS hostnames | Disabled | The domain name is publicly resolvable | Requires a Host or x-apigw-api-id header in requests |

| Route53 alias | Disabled |

The domain name is publicly resolvable. The host or x-apigw-api-id header is not. required |

Requires an interface VPC endpoint association with each private API |

Private DNS names

This option works when the private DNS option on an interface VPC endpoint is enabled. In addition, to resolve the name, AmazonProvidedDNS should be present in the DHCP options set for the clients in the VPC. Because those are the only requirements, this option is usually easy to use for a simple use case such as invoking a private API within a VPC.

However, if you use a custom DNS server, a conditional

forwarder must be set on the DNS that points to the

AmazonProvidedDNS or

Route53

Resolver. Because of the private DNS option enabled on

the interface VPC endpoint, DNS queries against

*.execute-api.amazonaws.com will be resolved to private IPs of

the endpoint. This causes issues when clients in the VPC try

to invoke regional or edge-optimized APIs, because those types

of APIs must be accessed over the internet. Traffic through

interface VPC endpoints is not allowed. The only workaround is

to use an edge-optimized custom domain name. Refer to

Why

do I get an HTTP 403 Forbidden error when connecting to my API Gateway APIs from a VPC?

Custom domain names

You can create a custom domain name for your private APIs. Use a custom domain name to provide API callers with a simpler and more intuitive URL. With a private custom domain name, you can reduce complexity, configure security measures during the TLS handshake, and control the certificate lifeycle of your custom domain name using AWS Certificate Manager (ACM).

You can share your custom domain name to another AWS account using AWS Resource Access Manager or API Gateway. AWS RAM helps you securely share your resources across AWS accounts and within your organization or organizational units (OUs). Because of this, you can consume a custom domain name from your own AWS account or from another AWS account. For more information, see Custom domain names for private APIs in API Gateway.

VPC endpoint public DNS hostnames

If your use case requires the private DNS option to be turned

off, consider using interface VPC endpoint public DNS

hostnames. When you create an interface VPC endpoint, it also

generates the public DNS hostname. When invoking a private API

through the hostname, you must pass a Host or x-apigw-api-id

header.

The header requirement can cause issues when the hostname is

used in a web application. For cross-origin, non-simple

requests, modern browsers send a

preflight

request

Amazon Route 53 alias

This Amazon Route 53

Each alias is generated after associating a VPC endpoint to a private API. The association is required every time you create new interface VPC endpoints and private APIs.

Resource-based policy

Resource-based policies are attached to a resource like a REST API in API Gateway. For resource-based policies, you can specify who has access to the resource and what actions are permitted.

Unlike Regional and edge-optimized endpoint types, private APIs

require the use of a resource policy. Deployments without a

resource policy will fail. For private

APIs, there are additional keys within the condition block you

can use in the resource policy, such as aws:sourceVpc and

aws:SourceVpce. The aws:sourceVpc policy allows traffic to

originate from specific VPCs, and aws:SourceVpce allows traffic

originating from interface VPC endpoints.

Private integration

Private integrations allow routing traffic from API Gateway to

customers’ VPCs. The integrations are based on VPC links, and rely

on a VPC endpoint service that is tied to NLBs for REST and

WebSocket APIs. VPC link integrations work in a similar way as

HTTP integrations. A common use case is to invoke

Amazon Elastic Compute Cloud

-

For existing applications with a Classic Load Balancer (CLB) or ALB:

-

Create an NLB in front of a CLB or ALB.

-

This creates an additional network hop and infrastructure cost.

-

-

Route traffic through NLB instead of CLB or ALB.

-

This requires migration from CLB or ALB to NLB to shift traffic and redesign the existing architecture. Refer to Migrate your Classic Load Balancer for the migration process.

-

-

-

NLB listener type

-

Transmission control protocol (TCP) (Secure Socket Layer (SSL) passthrough or non-SSL traffic)

-

Transport Layer Security (TLS) (ending the SSL connection on NLB)

-

Sample architecture patterns

When implementing a private API, using an authorizer such as

AWS Identity and Access Management

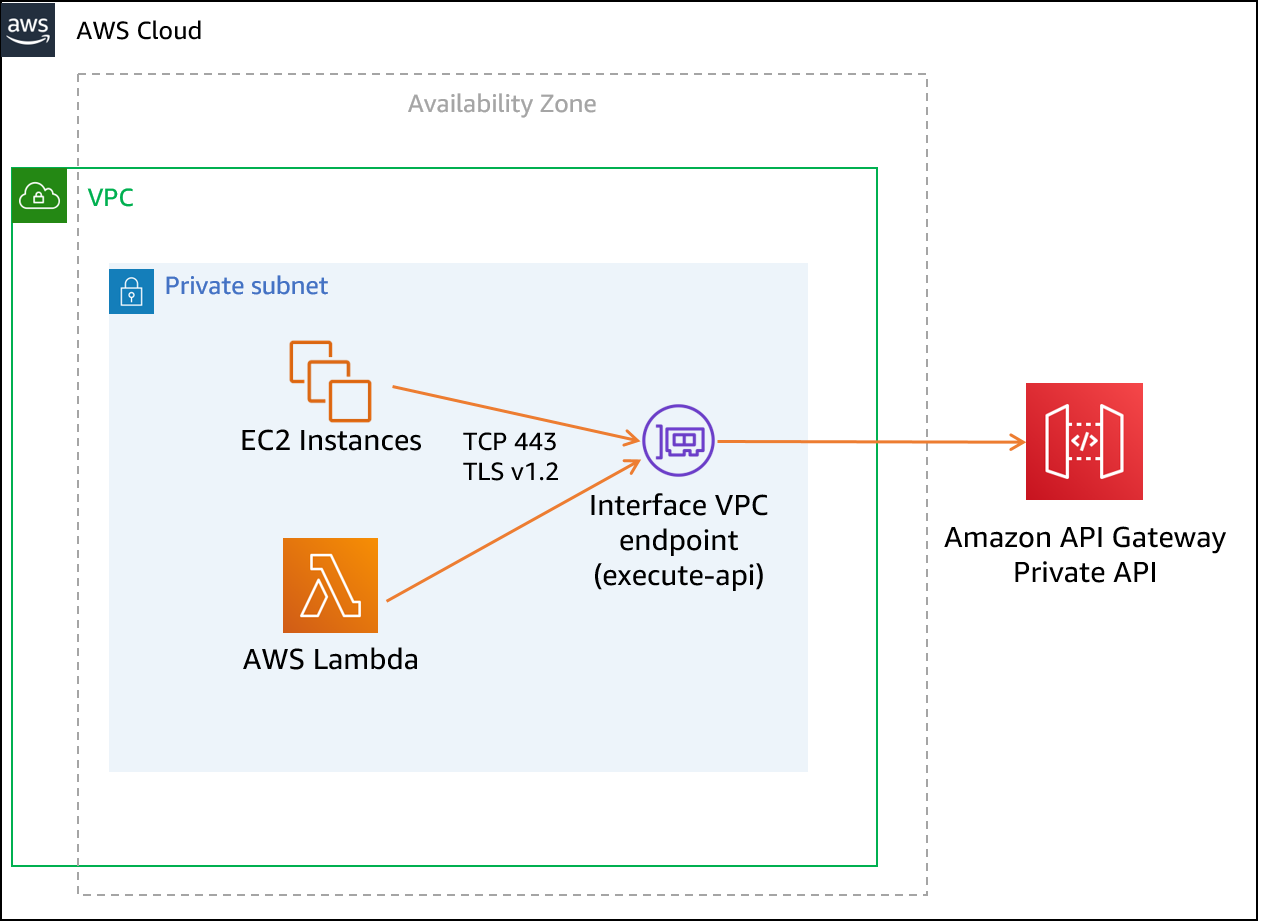

Basic architecture

In the basic architecture, Amazon EC2 instances and VPC-enabled

AWS Lambda

REST private API basic architecture

Cross-account architecture

If you want to allow access to a private API from other

accounts, an interface VPC endpoint in a different account can

be used to invoke the API. However, they both must exist in the

same

Region

REST private API cross-account architecture

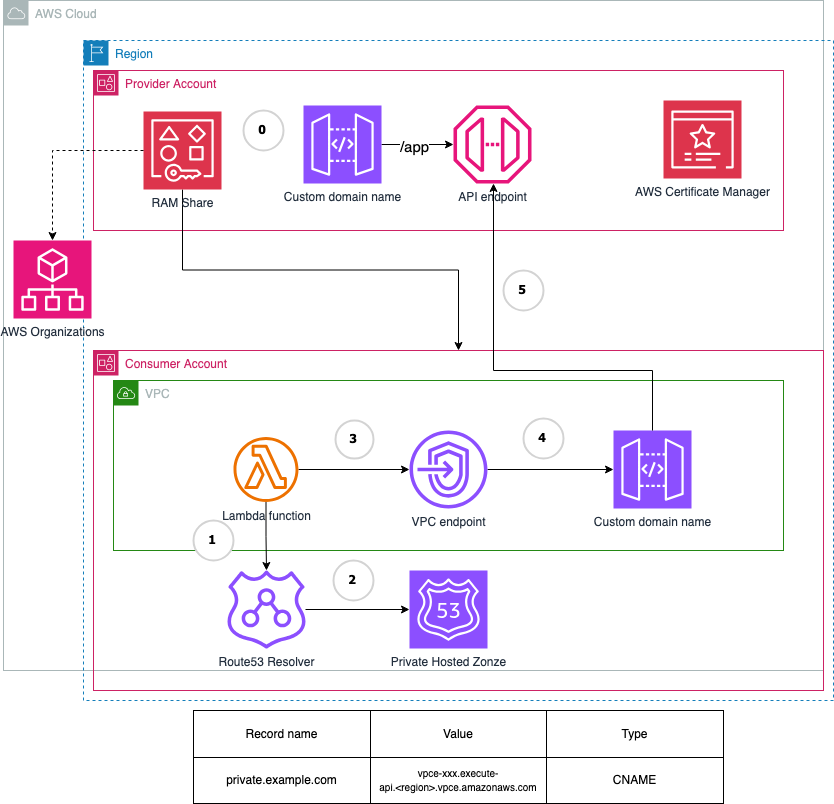

Cross-account architecture with a custom domain name

REST private API cross-account architecture with a custom domain name

The setup of this architecture is the following:

-

The API provider creates a custom domain name for a private API in the provider's account. This account and the consumer's account are both managed in AWS Organizations.

-

The provider account shares the private custom domain name using AWS RAM.

-

The provider updates both the resource policy attached to the private API and the private custom domain name to grant access to the consumer's VPC endpoint to invoke the endpoint.

-

A VPC-enabled Lambda function in the consumer's account invokes the private API using the custom domain name.

The numbers in the diagram correspond to the following:

A VPC-enabled Lambda function resolves a custom domain.

Route 53 private hosted zone has a record for the custom domain name.

-

The Lambda function uses the VPC endpoint for the custom domain name to make an API request.

The request reaches the API Gateway custom domain name.

-

The request is routed to the backend API Gateway endpoint.

On-premises architecture

If you have users accessing from on-premises locations, you will need a Direct Connect or VPN connection between the on-premises networks and your VPC. All requests must still go through interface VPC endpoints. For the on-premises architecture, VPC endpoint public DNS hostnames or Route 53 alias records are good options when invoking private APIs. If on-premises users access the network through a web application, Route 53 alias records are a better approach to avoid CORS issues. If the Route 53 alias record option does not work, one solution is to create a conditional forwarder on an on-premises DNS pointing to a Route 53 resolver. Refer to Resolving DNS queries between VPCs and your network.

The following diagram shows a sample architecture where on-premises clients access a web application hosted in the on-premises network. The web application uses a private API for its API endpoint. For the private API endpoint, a Route 53 alias is used. Because a Route 53 alias record is publicly resolvable, there is no need to set up a conditional forwarder on on-premises DNS servers to resolve the hostname.

REST private API on-premises architecture

Setup

-

The Private API is associated with the VPC endpoint vpce-0123abcd. This generates a Route 53 alias to invoke a private API.

-

The on-premises network and VPC are connected through Direct Connect.

-

On-premises users access a web application hosted in the on-premises network.

-

For non-simple requests, a web browser makes a preflight request

(OPTIONS)to the private API. -

When the preflight response includes the appropriate CORS headers such as

Access-Control-Origin;*, the web browser makes an HTTP request such as POST on the private API.

Multi-Region private API gateway

Customers want to build active-active or active-passive multi-Region API deployments for addressing requirements such as failover between Regions, reducing API latency when there are API clients in other Regions, and meeting data sovereignty requirements.

The core solution has private APIs configured with custom domain names for private APIs

associated with a certificate from AWS

Certificate Manager

-

Failover routing policy — This is used in an active-passive setup where the API Gateway primary Region receives the traffic in normal operation, and the API Gateway secondary Region receives the traffic only when there is a failure in primary Region. This requires a health check to be configured and enabled in Route 53.

-

Weighted routing policy — This is used in an active-active setup where a portion of traffic is always sent to the secondary Region. This can also be configured with a health check, similar to the failover policy, where traffic will only be routed to healthy Regions.

To resolve the custom domain name, there is an inbound resolver endpoint setup for both Regions, which provides two or more private IPs in each VPC across multiple availability zones to ensure high availability. This enables the resolution of the custom domain name using the VPC resolver.

The following diagram shows a sample architecture for on-premises clients to access private API Gateway APIs deployed across two AWS Regions. However, the solution described above can equally apply to clients accessing from another VPC or AWS account with appropriate DNS configurations on client VPC and appropriate resource policy on the private API. The on-premises DNS server is configured to forward the request for the private API domain name to the inbound resolver endpoint private IP addresses in the nearest region and a fallback IP address pointing to the farther Region.

The solution assumes mechanisms are in place to synchronize state (if any) across regions for the backend APIs and associated datastores.

Multi-Region API Gateway integrated with on-premise network via Route 53 Resolver

The numbers in the diagram correspond to the following:

-

The application server in the corporate data center needs to resolve an API Gateway custom domain name for private APIs. It sends the query to its pre-configured DNS server.

-

The DNS server in the corporate data center has a forwarding rule that forwards the DNS query for the specified domain name to the Route 53 Resolver inbound endpoint in Region A.

-

The Route 53 Resolver inbound endpoint uses the Route 53 Resolver to resolve the query.

-

The domain name is resolved to the interface VPC endpoint (execute-api) in one of the Regions based on the Route 53 routing policy.

-

The interface VPC endpoint points to the custom domain name.

-

The custom domain name is mapped to a private REST API.

-

API Gateway authenticates the request and sends it to the target service, such as Lambda.

There are also Route 53 inbound resolver endpoints in Region B for redundancy.

Private integration architecture with Amazon ECS

Amazon Elastic Container Service

Cross-VPC ECS access via private integration with private API

Private integration cross-account

Many customers want to use API Gateway with resources that exist

in a different AWS account. Although the VPC Link must exist in

the same account as the API Gateway API, it is still possible to

access resources in another account

using AWS PrivateLink

The following diagram shows a sample architecture where a PrivateLink (VPC Endpoint Service) connection has been established between the Central API Gateway Account and an ECS cluster in Resource Account A and an EC2 Auto Scaling Group (ASG) in Resource Account B. As this is a REST API Gateway, the VPC link uses an NLB to point to the private IP addresses of the VPC endpoint for each PrivateLink connection. API Gateway can invoke cross-account Lambda functions without the need for VPC link by using resource-based policies.

REST private cross-account integration using AWS PrivateLink

In this example, there is no private routing between the different account VPCs. PrivateLink provides a secure private connection to a single endpoint. Example use cases for this architecture include where there are overlapping Classless Inter-Domain Routing (CIDR) ranges between VPCs, or you wish to provide access to only a specific service or application rather than create a route to all resources in another VPC.

Many multi-account customers already have a cross-account VPC architecture in place using VPC peering or AWS Transit Gateway. In this case the NLB used for the VPC Link can be pointed directly to the private IP addresses of the resources in a different account, removing the need for the VPC endpoint and simplifying the architecture. This is shown in the following sample architecture.

REST private cross-account integration using VPC peering