This whitepaper is for historical reference only. Some content might be outdated and some links might not be available.

O-RAN architecture on AWS

As shown in the preceding figure, AWS can provide all required building blocks for O-RAN

development and deployment. While non-RT RIC is located in the Region to use all the benefit of

AWS data lakes and AI/ML gears, near-RT RIC and O-CU can be hosted at the edge site using the

AWS Outposts

O-RAN reference architecture

O-RAN components on AWS

RIC

Disintegration of RAN as defined by the O-RAN alliances provides an opportunity to use cloud concepts farther away from the radio stations. The RIC, near-RT and non-RT, provide mobility operators with the ability to customize their RAN network, automate their network operations and optimization, and use AI/ML capabilities. The following sections expand on how AWS services enable RIC architecture.

Radio network conditions are constantly changing based on users’ behaviors, environmental changes, interferences, and more. The randomness of the radio channels’ characterization and the random nature of events impacting signal quality requires a RAN that reacts to these events to maximize the quality of its users’ experience. Traditionally, CSPs tackle this problem through centralized self-organizing network (SON) capabilities, and network equipment provider (NEP)s features delivery and densification. The latter is often limited to individual NEPs, resulting in CSPs choosing radio equipment vendors for entire countries (or Regions) rather than mixing and matching based on the localized environmental behaviors.

This idea of customizing approaches based on localized environments is the foundational idea behind RIC. As such, the RIC architecture needs to support agility, innovation, and elasticity, while reducing the overall cost and complexity of managing a mobility network. These characteristics are aligned with cloud benefits enabled by AWS.

Near-RT RIC

The near-RT RIC has the following functions:

-

Mobility management.

-

Radio connection management.

-

Quality of Service (QoS) management.

-

Interference management.

-

Trained models.

-

Independent and extensible software plug-ins.

All these functions interact with one another, and are enabled by a common radio network information base. The latter provides near-RT RIC functions with an overview of the network RIC supports. This is illustrated in the following figure.

Near-RT RIC components

The near-RT RIC requires rapid low latency access to the network. This is enabled by AWS Outposts, which provides CSPs with a fully-managed service that offers the same AWS infrastructure, AWS services, APIs, and tools to their edge locations. AWS Outposts provides services such as Amazon EKS and Amazon ECS to support container-based applications, Amazon EMR clusters to support data analytics effort requiring immediate local processing, and Amazon RDS for relational databases.

Near-RT RIC ISVs can use Amazon EKS on Outposts to deliver RIC functions such as QoS managements. Amazon EKS enables non-RT RIC to scale with network conditions, upgrades RIC functions independently from one another, supports canary deployment of the new RIC version, and supports third-party RAN applications.

Near-RT RIC AWS architecture

Amazon ElastiCache

Amazon RDS provides a scalable, easy-to-set-up, and operationally efficient solution to support static and semi-static network configuration. Amazon RDS on Outposts supports mySQL and PostreSQL database engines.

Non-RT RIC

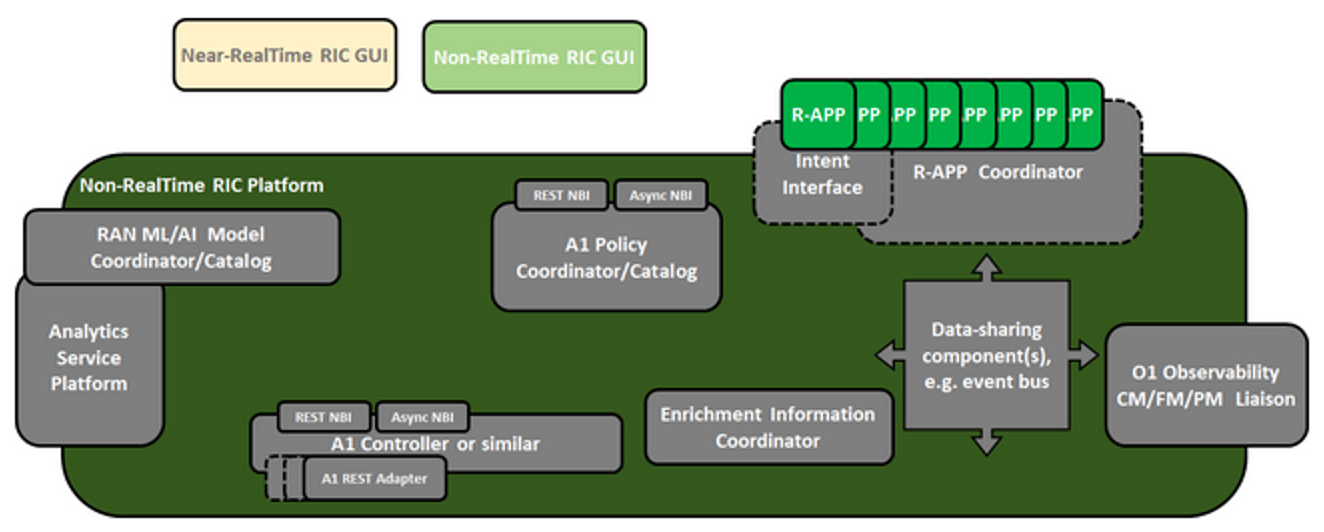

The non-RT RIC provides the required intelligence to perform optimization of the RAN networks, uses data from across the operation support system (OSS) stack, and has access to AI/ML resources to build a model that can be applied to a given near-RT RIC, or a set of near-RT RIC. As illustrated in the following figure, the non-RT RIC has three logical roles:

-

Ingestion of A1 messages.

-

Hosting of non-real time applications (R-APP).

-

Enrichment of A1 messages.

Non-RT RIC functional view (ONAP)

To support operators that plan for a one-to-many relationship

between non-RT RIC and near-RT-RIC, you can use AWS services

such as Amazon API Gateway, AWS Lambda, AWS Step Functions, and

Amazon Simple Queue Service

-

Amazon API Gateway

provides you with scalable services that make it easy to publish, maintain, and monitor the A1 interfaces between non-RT and near-RT RIC. -

AWS Lambda

, a serverless event-driven compute service, provides you with the ability to perform A1 enrichment without having to provision or manage dedicated servers. -

AWS Step Functions

provides you with a low-code visual workflow service that helps you orchestrate and automate A1 enrichment procedure. -

Amazon SQS

is a fully-managed queueing service that enables you to communicate between R-APP at scale, while allowing you to set different prioritization for given A1 messages, both native and enriched.

The following figure illustrates a reference architecture for developing Non-RT RIC on AWS:

Non-RT RIC on AWS

The AWS Cloud enables you to host modern R-APP applications, specially designed to run on the Non-RT RIC as code by using AWS Lambda. Similarly, Amazon EKS provides you with a managed container service to run and scale your Kubernetes-based R-APP applications.

SMO

Service Management and Orchestration (SMO) can be reduced to:

-

Ingestion of OAM data.

-

Applications hosting.

-

Inventory and configuration data storage.

-

API layer.

This section discusses how AWS services help you develop SMOs that benefit from scalability, performance, and reliability, and deploy them in minutes across your entire network. The following figure illustrates an SMO architecture on AWS.

SMO architecture on AWS

Ingestion of O1 messages is facilitated by

Amazon API Gateway

Use Amazon EKS

Use

AWS Step Functions

Use

Amazon EventBridge

AWS purpose-built database services for

Graph

DB

Amazon API Gateway enables you to integrate SMO with external applications. For example, a REST API can easily be exposed for a service orchestrator to initiate a configuration change. Amazon API Gateway, being a fully managed service, makes it easy for you to develop, create, publish, maintain, monitor, and scale the APIs required northbound and southbound of SMO.

O-CU

The O-CU hosts Radio Resource Control (RRC), Service Data

Adaptation Protocol (SDAP), and PDCP protocols, and consists of

the O-CU control plane (O-CU-CP) and the O-CU user plane

(O-CU-UP). Because O-CU communicates O-DU through the F1

interface and the Core Network through N2 (for Access and

Mobility Management Function (AMF)) and N3 (for UPF) in a low

latency, it should be located at the edge data center.

AWS Outposts

From the perspective of protocol stack, the O-Ran Central Unit control plane

(O-CU-CP) hosts the RRC and the control plane part of the PDCP protocol, while O-CU-UP hosts the user plane part of the PDCP protocol and the SDAP protocol. O-CU deals with upper layer protocol stacks, unlike O-DU and O-RU, which make it independent of the complex physical layer. This aspect enables you to virtualize and containerize O-CU easily, as you can with 5G Core Network function components.

Because O-CU has to cover an aggregated set of O-DUs, the

scalability, elasticity, and flexibility of using compute and

storage resources would bring a huge benefit in terms of

efficiency for resource utilization and cost optimization. To

maximize this benefit from the software architecture, people

often choose container-based implementation. For the

orchestration of containers, Kubernetes is often selected, not

only for 5G Core Network providers, but also O-RAN SW providers.

AWS provides

Amazon EKS

As with Core Network functions, O-CU is often required to

support

network

segmentation

For the container storage, the

Amazon EBS CSI driver provides a container storage interface

(CSI) interface that allows Amazon EKS clusters to manage the

lifecycle of Amazon EBS volumes for persistent volumes. In the

AWS environment, the O-CU container image and helm chart can be

stored and managed in

Amazon Elastic Container Registry

This full stack of AWS tools for building O-CU on AWS from the bottom layer (through AWS Outposts) to the top of O-CU application and additional monitoring and orchestration layers (through CloudFormation, CloudWatch) are shown in the following figure in a high-level view.

Full-stack view for O-CU design in AWS

O-DU and O-RU

The O-DU performs the Radio Link Control (RLC), Medium Access Control (MAC), and physical layer in the 3GPP specifications. The RLC builds information packets and recovers transmission losses by retransmitting lost packets. The MAC layer controls the physical layer, and allocates radio resources to transmit and receive packets over the air. The physical layer in the O-DU is responsible for link connectivity. The performance of physical layer dominates wireless link throughput and coverage where user experience would be mostly affected. The physical layer consumes heavy computational power for complex channel estimation and interference cancelling to improve the performance. To relieve its computational complexity, hardware acceleration techniques are commonly used in the O-DU.

AWS infrastructure and services bring significant advantages and

benefits to CSPs. AWS provides tightly integrated hardware

infrastructure and platform software by working with multiple

hardware and RAN vendors. AWS management control, programmable

APIs, and tools and services such as

Amazon CloudWatch

The O-DU can run on Amazon EKS Anywhere. AWS provides Amazon EKS Anywhere