This whitepaper is for historical reference only. Some content might be outdated and some links might not be available.

Workflow orchestration

ETL operations are the backbone of a data lake. ETL workflows often involve orchestrating and monitoring the execution of many sequential and parallel data processing tasks. As the volume of data grows, game developers find they need to move quickly to process this data to ensure they make faster, well-informed design and business decisions. To process data at scale, game developers need to elastically provision resources to manage the data coming from increasing diverse sources and often end up building complicated data pipelines.

AWS managed orchestration services such as

AWS Step Functions

AWS Step Functions

AWS Step Functions

Step Functions scales horizontally and provides fault-tolerant workflows. You can process data faster using parallel transformations or dynamic parallelism, and it lets you easily retry failed transformations, or choose a specific way to handle errors without the need to manage a complex process. Step Functions manages state, checkpoints, and restarts for you to make sure that your workflows run in order. Step Functions can be integrated with a wide variety of AWS services including:

-

Amazon Elastic Container Service

(Amazon ECS) -

Amazon Simple Queue Service

(Amazon SQS) -

Amazon Simple Notification Service

(Amazon SNS) -

Amazon DynamoDB

, and more

Step Functions has two workflow types:

-

Standard Workflows have exactly-once workflow transformation, and can run for up to one year.

-

Express Workflows have at-least-once workflow transformation, and can run for up to five minutes.

Standard Workflows are ideal for long-running, auditable workflows, as they show execution history and visual debugging. Express Workflows are ideal for high-event-rate workloads, such as streaming data processing. Your state machine transformations will behave differently, depending on which Type you select. Refer to Standard vs. Express Workflows for details.

Depending on your data processing needs, Step Functions directly integrates with other data processing services provided by AWS, such as AWS Batch for batch processing, Amazon EMR for big data processing, AWS Glue for data preparation, Athena for data analysis, and AWS Lambda for compute. Run ETL/ELT workflows using Amazon Redshift (Lambda, Amazon Redshift Data API) demonstrates how to use Step Functions and the Amazon Redshift Data API to run an ETL/ELT workflow that loads data into the Amazon Redshift data warehouse. Manage an Amazon EMR Job demonstrates Amazon EMR and AWS Step Functions integration.

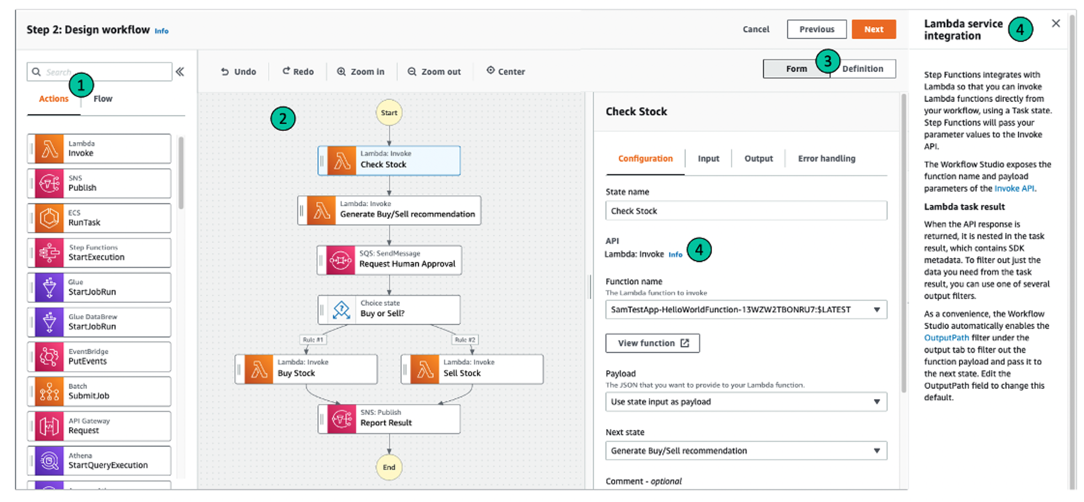

Workflow Studio for AWS Step Functions is a low-code visual workflow designer that lets you create serverless workflows by orchestrating AWS services. It makes it easy for game developers to build serverless workflows and empowers game developers to focus on building better gameplay while reducing the time spent writing configuration code for workflow definitions and building data transformations. Use drag-and-drop to create and edit workflows, control how input and output is filtered or transformed for each state, and configure error handling. As you create a workflow, Workflow Studio validates your work and generates code.

Sample design workflow

Amazon Managed Workflows for Apache Airflow (MWAA)

Apache

Airflow

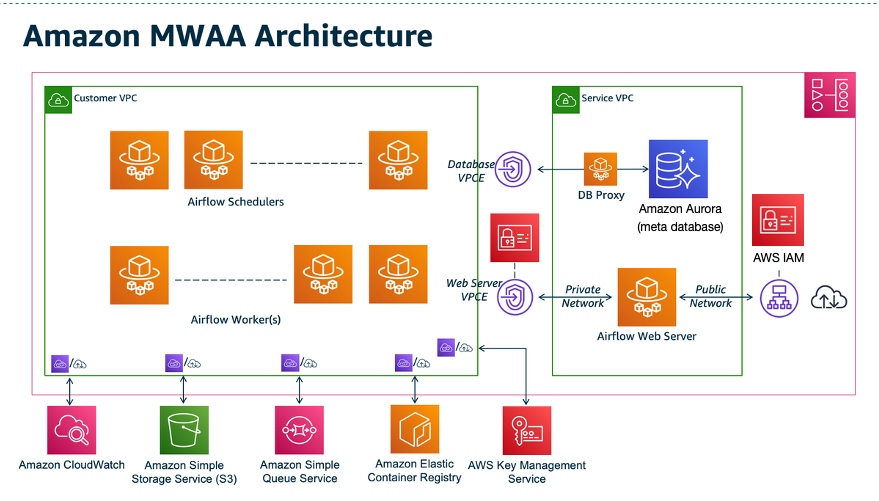

The auto scaling mechanism of MWAA automatically increases the number of Apache Airflow workers in response to queued tasks and disposes of extra workers when there are no more tasks queued or running. You can configure task parallelism, auto scaling, and concurrency settings directly on MWAA console.

Amazon MWAA orchestrates and schedules your workflows by using Directed Acyclic Graphs (DAGs) written in Python. To run DAGs in an Amazon MWAA environment, you copy your files to the Amazon S3, then let Amazon MWAA know where your DAGs and supporting files are located on the Amazon MWAA console. Amazon MWAA takes care of synchronizing the DAGs among workers, schedulers, and the web server.

All of the components contained in the MWAA section appear as a single Amazon MWAA environment in your account

Amazon MWAA supports open-source integrations with Amazon Athena, AWS Batch, Amazon CloudWatch, Amazon DynamoDB, AWS DataSync, Amazon EMR, AWS Fargate, Amazon EKS, Amazon Data Firehose, AWS Glue, AWS Lambda, Amazon Redshift, Amazon SQS, Amazon SNS, Amazon SageMaker AI, and Amazon S3, as well as hundreds of built-in and community-created operators and sensors and third-party tools such as Apache Hadoop, Presto, Hive, and Spark to perform data processing tasks.

Code examples are available for faster integration. For example, Using Amazon MWAA with Amazon EMR demonstrates how to enable an integration using Amazon EMR and Amazon MWAA. Creating a custom plugin with Apache Hive and Hadoop walks you through the steps to create a custom plugin using Apache Hive and Hadoop on an Amazon MWAA environment.