Create an API-driven resource orchestration framework using GitHub Actions and Terragrunt

Tamilselvan P, Abhigyan Dandriyal, Sandeep Gawande, and Akash Kumar, Amazon Web Services

Summary

This pattern leverages GitHub Actions workflows to automate resource provisioning through standardized JSON payloads, eliminating the need for manual configuration. This automated pipeline manages the complete deployment lifecycle and can seamlessly integrate with various frontend systems, from custom UI components to ServiceNow. The solution’s flexibility allows users to interact with the system through their preferred interfaces while maintaining standardized processes.

The configurable pipeline architecture can be adapted to meet different organizational requirements. The example implementation focuses on Amazon Virtual Private Cloud (Amazon VPC) and Amazon Simple Storage Service (Amazon S3) provisioning. The pattern effectively addresses common cloud resources management challenges by standardizing requests across the organization and providing consistent integration points. This approach makes it easier for teams to request and manage resources while ensuring standardization.

Prerequisites and limitations

Prerequisites

An active AWS account

An active GitHub account with access to the configured repository

Limitations

New resources require manual addition of

terragrunt.hclfiles to the repository configuration.Some AWS services aren’t available in all AWS Regions. For Region availability, see AWS Services by Region

. For specific endpoints, see Service endpoints and quotas, and choose the link for the service.

Architecture

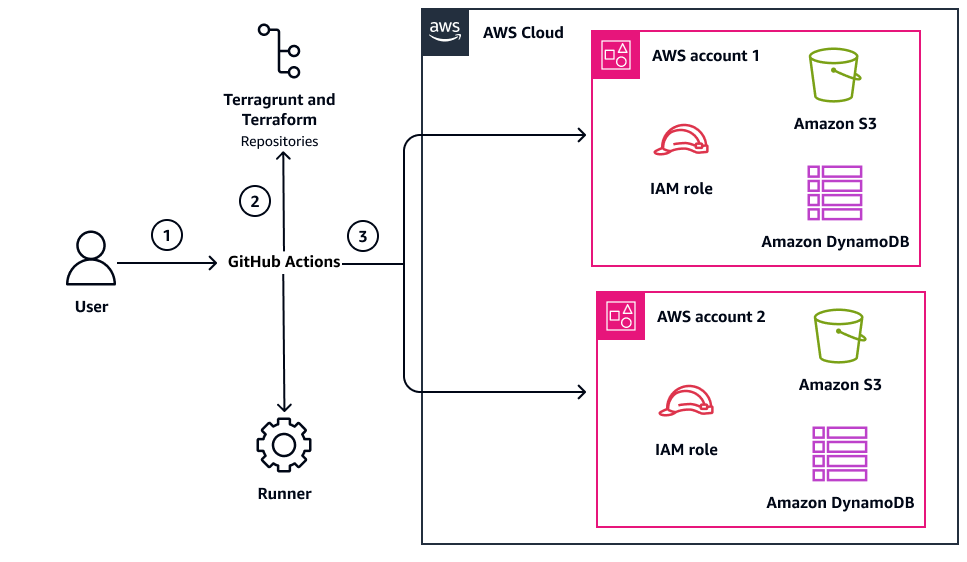

The following diagram shows the components and workflow of this pattern.

The architecture diagram shows the following actions:

The user submits a JSON payload to GitHub Actions, triggering the automation pipeline.

The GitHub Actions pipeline retrieves the required resources code from the Terragrunt and Terraform repositories, based on the payload specifications.

The pipeline assumes the appropriate AWS Identity and Access Management (IAM) role using the specified AWS account ID. Then, the pipeline deploys the resources to the target AWS account and manages Terraform state using the account-specific Amazon S3 bucket and Amazon DynamoDB table.

Each AWS account contains IAM roles for secure access, an Amazon S3 bucket for Terraform state storage, and a DynamoDB table for state locking. This design enables controlled, automated resource deployment across AWS accounts. The deployment process maintains proper state management and access control through dedicated Amazon S3 buckets and IAM roles in each account.

Tools

AWS services

Amazon DynamoDB is a fully managed NoSQL database service that provides fast, predictable, and scalable performance.

AWS Identity and Access Management (IAM) helps you securely manage access to your AWS resources by controlling who is authenticated and authorized to use them.

Amazon Simple Storage Service (Amazon S3) is a cloud-based object storage service that helps you store, protect, and retrieve any amount of data.

Amazon Virtual Private Cloud (Amazon VPC) helps you launch AWS resources into a virtual network that you’ve defined. This virtual network resembles a traditional network that you’d operate in your own data center, with the benefits of using the scalable infrastructure of AWS.

Other tools

GitHub Actions

is a continuous integration and continuous delivery (CI/CD) platform that’s tightly integrated with GitHub repositories. You can use GitHub Actions to automate your build, test, and deployment pipeline. Terraform

is an infrastructure as code (IaC) tool from HashiCorp that helps you create and manage cloud and on-premises resources. Terragrunt

is an orchestration tool that extends both OpenTofu and Terraform capabilities. It manages how generic infrastructure patterns are applied, making it easier to scale and maintain large infrastructure estates.

Code repository

The code for this pattern is available in the GitHub sample-aws-orchestration-pipeline-terraform

Best practices

Store AWS credentials and sensitive data using GitHub repository secrets for secure access.

Configure the OpenID Connect (OIDC) provider for GitHub Actions to assume the IAM role, avoiding static credentials.

Follow the principle of least privilege and grant the minimum permissions required to perform a task. For more information, see Grant least privilege and Security best practices in the IAM documentation.

Epics

| Task | Description | Skills required |

|---|---|---|

Initialize the GitHub repository. | To initialize the GitHub repository, use the following steps:

| DevOps engineer |

Configure the IAM roles and permissions. | To configure the IAM roles and permissions, use the following steps:

| DevOps engineer |

Set up GitHub secrets and variables. | For instructions about how to set up repository secrets and variables in the GitHub repository, see Creating configuration variables for a repository

| DevOps engineer |

Create the repository structure. | To create the repository structure, use the following steps:

| DevOps engineer |

| Task | Description | Skills required |

|---|---|---|

Execute the pipeline using curl. | To execute the pipeline by using curl

For more information about the pipeline execution process, see Additional information. | DevOps engineer |

Validate results of the pipeline execution | To validate the results, use the following steps:

You can also cross-verify the created resources by using the | DevOps engineer |

| Task | Description | Skills required |

|---|---|---|

Submit a cleanup request. | To delete resources that are no longer required, use the following steps:

| DevOps engineer |

Related resources

AWS Blogs

AWS service documentation

GitHub resources

Additional information

Pipeline execution process

Following are the steps of the pipeline execution:

Validates JSON payload format - Ensures that the incoming JSON configuration is properly structured and contains all required parameters

Assumes specified IAM role - Authenticates and assumes the required IAM role for AWS operations

Downloads required Terraform and Terragrunt code - Retrieves the specified version of resource code and dependencies

Executes resource deployment - Applies the configuration to deploy or update AWS resources in the target environment

Sample payload used for VPC creation

Following is example code for Terraform backend state bucket creation:

state_bucket_name = "${local.payload.ApplicationName}-${local.payload.EnvironmentId}-tfstate"

lock_table_name = "${local.payload.ApplicationName}-${local.payload.EnvironmentId}-tfstate-lock"

Following is an example payload for creating a VPC with Amazon VPC, where vpc_cidr defines the CIDR block specifications for the VPC. The Terraform state bucket is mapped to a variable defined in the terraform files. The ref parameter contains the branch name of the code to execute.

{ "ref": "main", "inputs": { "RequestParameters": { "RequestId": "1111111", "RequestType": "create", "ResourceType": "vpc", "AccountId": "1234567890", "AccountAlias": "account-alias", "RegionId": "us-west-2", "ApplicationName": "myapp", "DivisionName": "division-name", "EnvironmentId": "dev", "Suffix": "poc" }, "ResourceParameters": [ { "VPC": { "vpc_cidr": "10.0.0.0/16" } } ] } }

RequestParameters are used to track the request status in the pipeline section and tfstate is created based on this information. The following parameters contain metadata and control information:

RequestId– Unique identifier for the requestRequestType– Type of operation (create, update, or delete)ResourceType– Type of resource to be provisionedAccountId– Target AWS account for deploymentAccountAlias– Friendly name for the AWS accountRegionId– AWS Region for resource deploymentApplicationName– Name of the applicationDivisionName– Organization divisionEnvironmentId– Environment (for example, dev and prod)Suffix– Additional identifier for the resources

ResourceParameters contain resource-specific configuration that maps to variables defined in the Terraform files. Any custom variables that need to be passed to the Terraform modules should be included in ResourceParameters. The parameter vpc_cidr is mandatory for Amazon VPC.