Workloads OU - Application account

| Influence the future of the AWS Security Reference Architecture (AWS SRA) by taking a short survey |

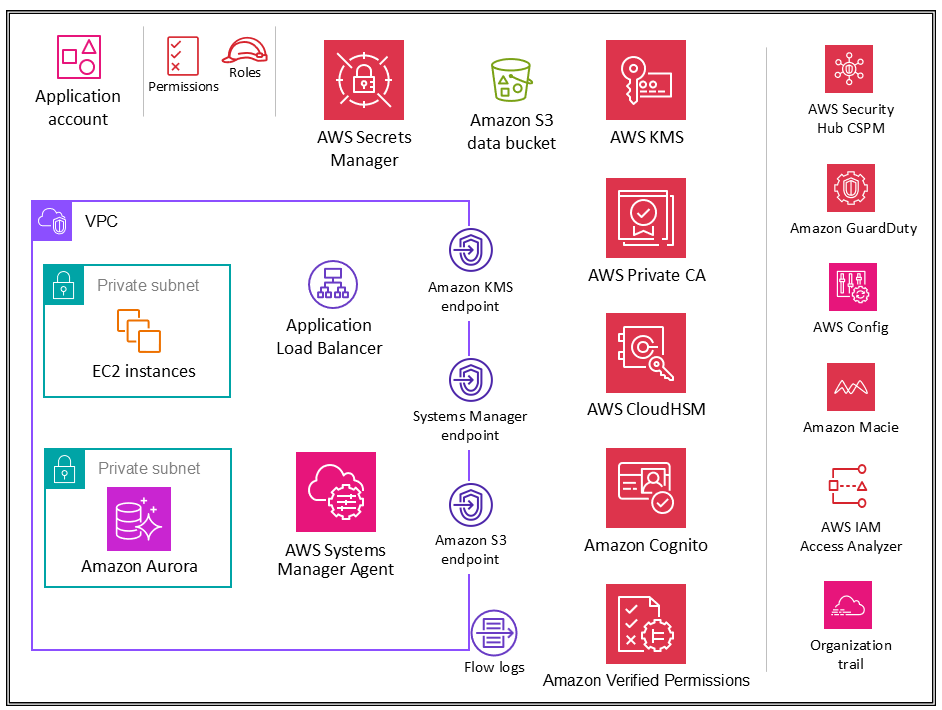

The following diagram illustrates the AWS security services that are configured in the Application account (along with the application itself).

The Application account hosts the primary infrastructure and services to run and maintain an enterprise application. The Application account and Workloads OU serve a few primary security objectives. First, you create a separate account for each application to provide boundaries and controls between workloads so that you can avoid issues of comingling roles, permissions, data, and encryption keys. You want to provide a separate account container where the application team can be given broad rights to manage their own infrastructure without affecting others. Next, you add a layer of protection by providing a mechanism for the security operations team to monitor and collect security data. Employ an organization trail and local deployments of account security services (Amazon GuardDuty, AWS Config, AWS Security Hub CSPM, Amazon EventBridge, AWS IAM Access Analyzer), which are configured and monitored by the security team. Finally, you enable your enterprise to set controls centrally. You align the application account to the broader security structure by making it a member of the Workloads OU through which it inherits appropriate service permissions, constraints, and guardrails.

Design consideration

-

In your organization you are likely to have more than one business application. The Workloads OU is intended to house most of your business-specific workloads, including both production and non-production environments. These workloads can be a mix of commercial off-the-shelf (COTS) applications and your own internally developed custom applications and data services. There are few patterns for organizing different business applications along with their development environments. One pattern is to have multiple child OUs based on your development environment, such as production, staging, test, and development, and to use separate child AWS accounts under those OUs that pertain to different applications. Another common pattern is to have separate child OUs per application and then use separate child AWS accounts for individual development environments. The exact OU and account structure depends on your application design and the teams that manage those applications. Consider the security controls that you want to enforce, whether they are environment-specific or application-specific, because it is easier to implement those controls as SCPs on OUs. For further considerations on organizing workload-oriented OUs, see the Organizing workload-oriented OUs section of the AWS whitepaper Organizing Your AWS Environment Using Multiple Accounts.

Application VPC

The virtual private cloud (VPC) in the Application account needs both inbound access (for the simple web services that you are modeling) and outbound access (for application needs or AWS service needs). By default, resources inside a VPC are routable to one another. There are two private subnets: one to host the EC2 instances (application layer) and the other for Amazon Aurora (database layer). Network segmentation between different tiers, such as the application tier and database tier, is accomplished through VPC security groups, which restrict traffic at the instance level. For resiliency, the workload spans two or more Availability Zones and utilizes two subnets per zone.

Design consideration

-

You can use Traffic Mirroring to copy network traffic from an elastic network interface of EC2 instances. You can then send the traffic to out-of-band security and monitoring appliances for content inspection, threat monitoring, or troubleshooting. For example, you might want to monitor the traffic that is leaving your VPC or the traffic whose source is outside your VPC. In this case, you will mirror all traffic except for the traffic passing within your VPC and send it to a single monitoring appliance. Amazon VPC flow logs do not capture mirrored traffic; they generally capture information from packet headers only. Traffic Mirroring provides deeper insight into the network traffic by allowing you to analyze actual traffic content, including payload. Enable Traffic Mirroring only for the elastic network interface of EC2 instances that might be operating as part of sensitive workloads or for which you expect to need detailed diagnostics in the event of an issue.

VPC endpoints

VPC endpoints provide another layer of security control as well as scalability and reliability. Use these to connect your application VPC to other AWS services. (In the Application account, the AWS SRA employs VPC endpoints for AWS KMS, AWS Systems Manager, and Amazon S3.) Endpoints are virtual devices. They are horizontally scaled, redundant, and highly available VPC components. They allow communication between instances in your VPC and services without imposing availability risks or bandwidth constraints on your network traffic. You can use a VPC endpoint to privately connect your VPC to supported AWS services and VPC endpoint services powered by AWS PrivateLink without requiring an internet gateway, NAT device, VPN connection, or AWS Direct Connect connection. Instances in your VPC do not require public IP addresses to communicate with other AWS services. Traffic between your VPC and the other AWS service does not leave the Amazon network.

Another benefit of using VPC endpoints is to enable the configuration of endpoint policies. A VPC endpoint policy is an IAM resource policy that you attach to an endpoint when you create or modify the endpoint. If you do not attach an IAM policy when you create an endpoint, AWS attaches a default IAM policy for you that allows full access to the service. An endpoint policy does not override or replace IAM policies or service-specific policies (such as S3 bucket policies). It is a separate IAM policy for controlling access from the endpoint to the specified service. In this way, it adds another layer of control over which AWS principals can communicate with resources or services.

Amazon EC2

The Amazon EC2

Use separate VPCs (as subset of account boundaries) to isolate infrastructure by workload segments. Use subnets to isolate the tiers of your application (for example, web, application, and database) within a single VPC. Use private subnets for your instances if they should not be accessed directly from the internet. To call the Amazon EC2 API from your private subnet without using an internet gateway, use AWS PrivateLink. Restrict access to your instances by using security groups. Use VPC Flow Logs to monitor the traffic that reaches your instances. Use Session Manager, a capability of AWS Systems Manager, to access your instances remotely instead of opening inbound SSH ports and managing SSH keys. Use separate Amazon Elastic Block Store (Amazon EBS) volumes for the operating system and your data. You can configure your AWS account to enforce the encryption of the new EBS volumes and snapshot copies that you create.

Implementation example

The AWS SRA

code library

Application Load Balancers

Application Load Balancers

AWS Certificate Manager (ACM) natively integrates with Application Load Balancers, and the AWS SRA uses ACM to generate and manage the necessary X.509 (TLS server) public certificates. You can enforce TLS 1.2 and strong ciphers for front-end connections through the Application Load Balancer security policy. For more information, see the Elastic Load Balancing documentation.

Design considerations

-

For common scenarios such as strictly internal applications that require a private TLS certificate on the Application Load Balancer, you can use ACM within this account to generate a private certificate from AWS Private CA. In the AWS SRA, the ACM root Private CA is hosted in the Security Tooling account and can be shared with the whole AWS organization or with specific AWS accounts to issue end-entity certificates, as described earlier in the Security Tooling account section.

-

For public certificates, you can use ACM to generate those certificates and manage them, including automated rotation. Alternatively, you can generate your own certificates by using SSL/TLS tools to create a certificate signing request (CSR), get the CSR signed by a certificate authority (CA) to produce a certificate, and then import the certificate into ACM or upload the certificate to IAM for use with the Application Load Balancer. If you import a certificate into ACM, you must monitor the expiration date of the certificate and renew it before it expires.

-

For additional layers of defense, you can deploy AWS WAF policies to protect the Application Load Balancer. Having edge policies, application policies, and even private or internal policy enforcement layers adds to the visibility of communication requests and provides unified policy enforcement. For more information, see the blog post Deploying defense in depth using AWS Managed Rules for AWS WAF

.

AWS Private CA

AWS Private Certificate Authority (AWS Private CA) is used in the Application account to generate private certificates to be used with an Application Load Balancer. It is a common scenario for Application Load Balancers to serve secure content over TLS. This requires TLS certificates to be installed on the Application Load Balancer. For applications that are strictly internal, private TLS certificates can provide the secure channel.

In the AWS SRA, AWS Private CA is hosted in the Security Tooling account and is shared out to the Application account by using AWS RAM. This allows developers in an Application account to request a certificate from a shared private CA. Sharing CAs across your organization or across AWS accounts helps reduce the cost and complexity of creating and managing duplicate CAs in all your AWS accounts. When you use ACM to issue private certificates from a shared CA, the certificate is generated locally in the requesting account, and ACM provides full lifecycle management and renewal.

Amazon Inspector

The AWS SRA uses Amazon Inspector

Amazon Inspector is placed in the Application account, because it provides vulnerability management services to EC2 instances in this account. Additionally, Amazon Inspector reports on unwanted network paths to and from EC2 instances.

Amazon Inspector in member accounts is centrally managed by the delegated administrator account. In the AWS SRA, the Security Tooling account is the delegated administrator account. The delegated administrator account can manage findings data and certain settings for members of the organization. This includes viewing aggregated findings details for all member accounts, enabling or disabling scans for member accounts, and reviewing scanned resources within the AWS organization.

Design consideration

-

You can use Patch Manager, a capability of AWS Systems Manager, to trigger on-demand patching to remediate Amazon Inspector zero-day or other critical security vulnerabilities. Patch Manager helps you patch those vulnerabilities without having to wait for your normal patching schedule. The remediation is carried out by using the Systems Manager Automation runbook. For more information, see the two part blog series Automate vulnerability management and remediation in AWS using Amazon Inspector and AWS Systems Manager

.

Amazon Systems Manager

AWS Systems Manager

In addition to these general automation capabilities, Systems Manager supports a number of preventive, detective, and responsive security features. AWS Systems Manager Agent (SSM Agent) is Amazon software that can be installed and configured on an EC2 instance, an on-premises server, or a virtual machine (VM). SSM Agent makes it possible for Systems Manager to update, manage, and configure these resources. Systems Manager helps you maintain security and compliance by scanning these managed instances and reporting (or taking corrective action) on any violations it detects in your patch, configuration, and custom policies.

The AWS SRA uses Session Manager, a capability of Systems Manager, to provide an interactive, browser-based shell and CLI experience. This provides secure and auditable instance management without the need to open inbound ports, maintain bastion hosts, or manage SSH keys. The AWS SRA uses Patch Manager, a capability of Systems Manager, to apply patches to EC2 instances for both operating systems and applications.

The AWS SRA also uses Automation, a capability of Systems Manager, to simplify common maintenance and deployment tasks of Amazon EC2 instances and other AWS resources. Automation can simplify common IT tasks such as changing the state of one or more nodes (using an approval automation) and managing node states according to a schedule. Systems Manager includes features that help you target large groups of instances by using tags, and velocity controls that help you roll out changes according to the limits you define. Automation offers one-click automations for simplifying complex tasks such as creating golden Amazon Machine Images (AMIs) and recovering unreachable EC2 instances. Additionally, you can enhance operational security by giving IAM roles access to specific runbooks to perform certain functions, without directly giving permissions to those roles. For example, if you want an IAM role to have permissions to restart specific EC2 instances after patch updates, but you don't want to grant the permission directly to that role, you can instead create an Automation runbook and give the role permissions to only run the runbook.

Design considerations

-

Systems Manager relies on EC2 instance metadata to function correctly. Systems Manager can access instance metadata by using either version 1 or version 2 of the Instance Metadata Service (IMDSv1 and IMDSv2).

-

SSM Agent has to communicate with different AWS services and resources such as Amazon EC2 messages, Systems Manager, and Amazon S3. For this communication to happen, the subnet requires either outbound internet connectivity or provisioning of appropriate VPC endpoints. The AWS SRA uses VPC endpoints for the SSM Agent to establish private network paths to various AWS services.

-

Using Automation, you can share best practices with the rest of your organization. You can create best practices for resource management in runbooks and share the runbooks across AWS Regions and groups. You can also constrain the allowed values for runbook parameters. For these use cases, you might have to create Automation runbooks in a central account such as Security Tooling or Shared Services and share them with the rest of the AWS organization. Common use cases include the capability to centrally implement patching and security updates, remediate drift on VPC configurations or S3 bucket policies, and manage EC2 instances at scale. For implementation details, see the Systems Manager documentation.

Amazon Aurora

In the AWS SRA, Amazon Aurora

Design consideration

-

As in many database services, security for Aurora is managed at three levels. To control who can perform Amazon Relational Database Service (Amazon RDS) management actions on Aurora DB clusters and DB instances, you use IAM. To control which devices and EC2 instances can open connections to the cluster endpoint and port of the DB instance for Aurora DB clusters in a VPC, you use a VPC security group. To authenticate logins and permissions for an Aurora DB cluster, you can take the same approach as with a stand-alone DB instance of MySQL or PostgreSQL, or you can use IAM database authentication for Aurora MySQL-Compatible Edition. With this latter approach, you authenticate to your Aurora MySQL-Compatible DB cluster by using an IAM role and an authentication token.

Amazon S3

Amazon S3

AWS KMS

The AWS SRA illustrates the recommended distribution model for key management, where the KMS key resides within the same AWS account as the resource to be encrypted. For this reason, AWS KMS is used in the Application account in addition to being included in the Security Tooling account. In the Application account, AWS KMS is used to manage keys that are specific to the application resources. You can implement a separation of duties by using key policies to grant key usage permissions to local application roles and to restrict management and monitoring permissions to your key custodians.

Design consideration

-

In a distributed model, the AWS KMS key management responsibility resides with the application team. However, your central security team can be responsible for the governance and monitoring of important cryptographic events such as the following:

-

The imported key material in a KMS key is nearing its expiration date.

-

The key material in a KMS key was automatically rotated.

-

A KMS key was deleted.

-

There is a high rate of decryption failure.

-

AWS CloudHSM

AWS CloudHSM

Design consideration

-

If you have a hard requirement for FIPS 140-2 level 3, you can also choose to configure AWS KMS to use the CloudHSM cluster as a custom key store rather than using the native KMS key store. By doing this, you benefit from the integration between AWS KMS and AWS services that encrypt your data, while being responsible for the HSMs that protect your KMS keys. This combines single-tenant HSMs under your control with the ease of use and integration of AWS KMS. To manage your CloudHSM infrastructure, you have to employ a public key infrastructure (PKI) and have a team that has experience managing HSMs.

AWS Secrets Manager

AWS Secrets Manager

With Secrets Manager, you can manage access to secrets by using fine-grained IAM policies and resource-based policies. You can help secure secrets by encrypting them with encryption keys that you manage by using AWS KMS. Secrets Manager also integrates with AWS logging and monitoring services for centralized auditing.

Secrets Manager uses envelope encryption with AWS KMS keys and data keys to protect each secret value. When you create a secret, you can choose any symmetric customer managed key in the AWS account and Region, or you can use the AWS managed key for Secrets Manager.

As a best practice, you can monitor your secrets to log any changes to them. This helps you ensure that any unexpected usage or change can be investigated. Unwanted changes can be rolled back. Secrets Manager currently supports two AWS services that enable you to monitor your organization and activity: AWS CloudTrail and AWS Config. CloudTrail captures all API calls for Secrets Manager as events, including calls from the Secrets Manager console and from code calls to the Secrets Manager APIs. In addition, CloudTrail captures other related (non-API) events that might have a security or compliance impact on your AWS account or might help you troubleshoot operational problems. These include certain secrets rotation events and deletion of secret versions. AWS Config can provide detective controls by tracking and monitoring changes to secrets in Secrets Manager. These changes include a secret's description, rotation configuration, tags, and relationship to other AWS sources such as the KMS encryption key or the AWS Lambda functions used for secret rotation. You can also configure Amazon EventBridge, which receives configuration and compliance change notifications from AWS Config, to route particular secrets events for notification or remediation actions.

In the AWS SRA, Secrets Manager is located in the Application account to support local application use cases and to manage secrets close to their usage. Here, an instance profile is attached to the EC2 instances in the Application account. Separate secrets can then be configured in Secrets Manager to allow that instance profile to retrieve secrets—for example, to join the appropriate Active Directory or LDAP domain and to access the Aurora database. Secrets Manager integrates with Amazon RDS to manage user credentials when you create, modify, or restore an Amazon RDS DB instance or Multi-AZ DB cluster. This helps you manage the creation and rotation of keys and replaces the hardcoded credentials in your code with programmatic API calls to Secrets Manager.

Design consideration

-

In general, configure and manage Secrets Manager in the account that is closest to where the secrets will be used. This approach takes advantage of the local knowledge of the use case and provides speed and flexibility to application development teams. For tightly controlled information where an additional layer of control might be appropriate, secrets can be centrally managed by Secrets Manager in the Security Tooling account.

Amazon Cognito

Amazon Cognito

Amazon Cognito provides a built-in and customizable UI for user sign-up and sign-in. You can use Android, iOS, and JavaScript SDKs for Amazon Cognito to add user sign-up and sign-in pages to your apps. Amazon Cognito Sync is an AWS service and client library that enables cross-device syncing of application-related user data.

Amazon Cognito supports multi-factor authentication and encryption of data at rest and data in transit. Amazon Cognito user pools provide advanced security features to help protect access to accounts in your application. These advanced security features provide risk-based adaptive authentication and protection from the use of compromised credentials.

Design considerations

-

You can create an AWS Lambda function and then trigger that function during user pool operations such as user sign-up, confirmation, and sign-in (authentication) with an AWS Lambda trigger. You can add authentication challenges, migrate users, and customize verification messages. For common operations and user flow, see the Amazon Cognito documentation. Amazon Cognito calls Lambda functions synchronously.

-

You can use Amazon Cognito user pools to secure small, multi-tenant applications. A common use case of multi-tenant design is to run workloads to support testing multiple versions of an application. Multi-tenant design is also useful for testing a single application with different datasets, which allows full use of your cluster resources. However, make sure that the number of tenants and expected volume align with the related Amazon Cognito service quotas. These quotas are shared across all tenants in your application.

Amazon Verified Permissions

Amazon Verified

Permissions

You can connect your application to the service through the API to authorize user access

requests. For each authorization request, the service retrieves the relevant policies and

evaluates those policies to determine whether a user is permitted to take an action on a

resource, based on context inputs such as users, roles, group membership, and attributes. You

can configure and connect Verified Permissions to send your policy management and

authorization logs to AWS CloudTrail. If you use Amazon Cognito as your identity store, you

can integrate with Verified Permissions and use the ID and access tokens that Amazon Cognito

returns in the authorization decisions in your applications. You provide Amazon Cognito tokens

to Verified Permissions, which uses the attributes that the tokens contain to represent the

principal and identify the principal’s entitlements. For more information about this

integration, see the AWS blog post Simplifying fine-grained authorization with Amazon Verified Permissions and Amazon

Cognito

Verified Permissions helps you define policy-based access control (PBAC). PBAC is an

access control model that uses permissions that are expressed as policies to determine who can

access which resources in an application. PBAC brings together role-based access control

(RBAC) and attribute-based access control (ABAC), resulting in a more powerful and flexible

access control model. To learn more about PBAC and how you can design an authorization model

by using Verified Permissions, see the AWS blog post Policy-based access control in application development with Amazon Verified

Permissions

In the AWS SRA, Verified Permissions is located in the Application account to support permission management for applications through its integration with Amazon Cognito.

Layered defense

The Application account provides an opportunity to illustrate layered defense principals that AWS enables. Consider the security of the EC2 instances that make up the core of a simple example application represented in the AWS SRA and you can see the way AWS services work together in a layered defense. This approach aligns to the structural view of AWS security services, as described in the section Apply security services across your AWS organization earlier in this guide.

-

The innermost layer is the EC2 instances. As mentioned earlier, EC2 instances include many native security features either by default or as options. Examples include IMDSv2, the Nitro system

, and Amazon EBS storage encryption. -

The second layer of protection focuses on the operating system and software running on the EC2 instances. Services such as Amazon Inspector

and AWS Systems Manager enable you to monitor, report, and take corrective action on these configurations. Inspector monitors your software for vulnerabilities and Systems Manager helps you work to maintain security and compliance by scanning managed instances for their patch and configuration status, and then reporting and taking any corrective actions you specify. -

The instances, and the software running on these instances, sit with your AWS networking infrastructure. In addition to using the security features of Amazon VPC, the AWS SRA also makes use of VPC endpoints to provide private connectivity between the VPC and supported AWS services, and to provide a mechanism to place access policies at the network boundary.

-

The activity and configuration of the EC2 instances, software, network, and IAM roles and resources are further monitored by AWS account-focused services such as AWS Security Hub CSPM, Amazon GuardDuty, AWS CloudTrail, AWS Config, AWS IAM Access Analyzer, and Amazon Macie.

-

Finally, beyond the Application account, AWS RAM helps control which resources are shared with other accounts, and IAM service control policies help you enforce consistent permissions across the AWS organization.