Las traducciones son generadas a través de traducción automática. En caso de conflicto entre la traducción y la version original de inglés, prevalecerá la version en inglés.

Implemente un caso de uso de RAG AWS mediante Terraform y Amazon Bedrock

Martin Maritsch, Nicolas Jacob Baer, Olivier Brique, Julian Ferdinand Grueber, Alice Morano y Nicola D Orazio, Amazon Web Services

Resumen

AWS ofrece varias opciones para desarrollar sus casos de uso de IA generativa habilitados para la generación aumentada de recuperación

El usuario carga manualmente un archivo en un bucket de Amazon Simple Storage Service (Amazon S3), como un archivo de Microsoft Excel o un documento PDF. (Para obtener más información sobre los tipos de archivos compatibles, consulte la documentación no estructurada

). El contenido del archivo se extrae y se incrusta en una base de datos de conocimiento basada en Aurora PostgreSQL, sin servidor, compatible con PostgreSQL, que permite la ingesta de documentos casi en tiempo real en el almacén vectorial. Este enfoque permite al modelo RAG acceder a la información relevante y recuperarla para casos de uso en los que las bajas latencias son importantes.

Cuando el usuario utiliza el modelo de generación de texto, mejora la interacción al recuperar y aumentar el contenido relevante de los archivos cargados anteriormente.

El patrón utiliza Amazon Titan Text Embeddings v2 como modelo de incrustación y Anthropic Claude 3 Sonnet

Requisitos previos y limitaciones

Requisitos previos

Cuenta de AWS Un activo.

AWS Command Line Interface (AWS CLI) instalado y configurado con su Cuenta de AWS. Para obtener instrucciones de instalación, consulte Instalar o actualizar a la última versión de AWS CLI en la AWS CLI documentación. Para revisar sus AWS credenciales y el acceso a su cuenta, consulte los ajustes de configuración y del archivo de credenciales en la AWS CLI documentación.

Acceso al modelo que está habilitado para los modelos de idiomas grandes requeridos (LLMs) en la consola Amazon Bedrock de su Cuenta de AWS. Este patrón requiere lo siguiente: LLMs

amazon.titan-embed-text-v2:0anthropic.claude-3-sonnet-20240229-v1:0

Limitaciones

Esta arquitectura de ejemplo no incluye una interfaz para responder preguntas mediante programación con la base de datos vectorial. Si su caso de uso requiere una API, considere añadir Amazon API Gateway con una AWS Lambda función que ejecute tareas de recuperación y respuesta a preguntas.

Este ejemplo de arquitectura no incluye funciones de monitoreo para la infraestructura implementada. Si su caso de uso requiere supervisión, considere la posibilidad de añadir servicios AWS de supervisión.

Si carga muchos documentos en poco tiempo al bucket de Amazon S3, es posible que la función Lambda encuentre límites de velocidad. Como solución, puede desacoplar la función Lambda de una cola de Amazon Simple Queue Service (Amazon SQS) donde puede controlar la frecuencia de las invocaciones de Lambda.

Algunas no están disponibles en todas. Servicios de AWS Regiones de AWS Para ver la disponibilidad por región, consulta Servicios de AWS por región

. Para ver puntos de enlace específicos, consulta Puntos de enlace y cuotas del servicio y elige el enlace para el servicio.

Versiones de producto

Arquitectura

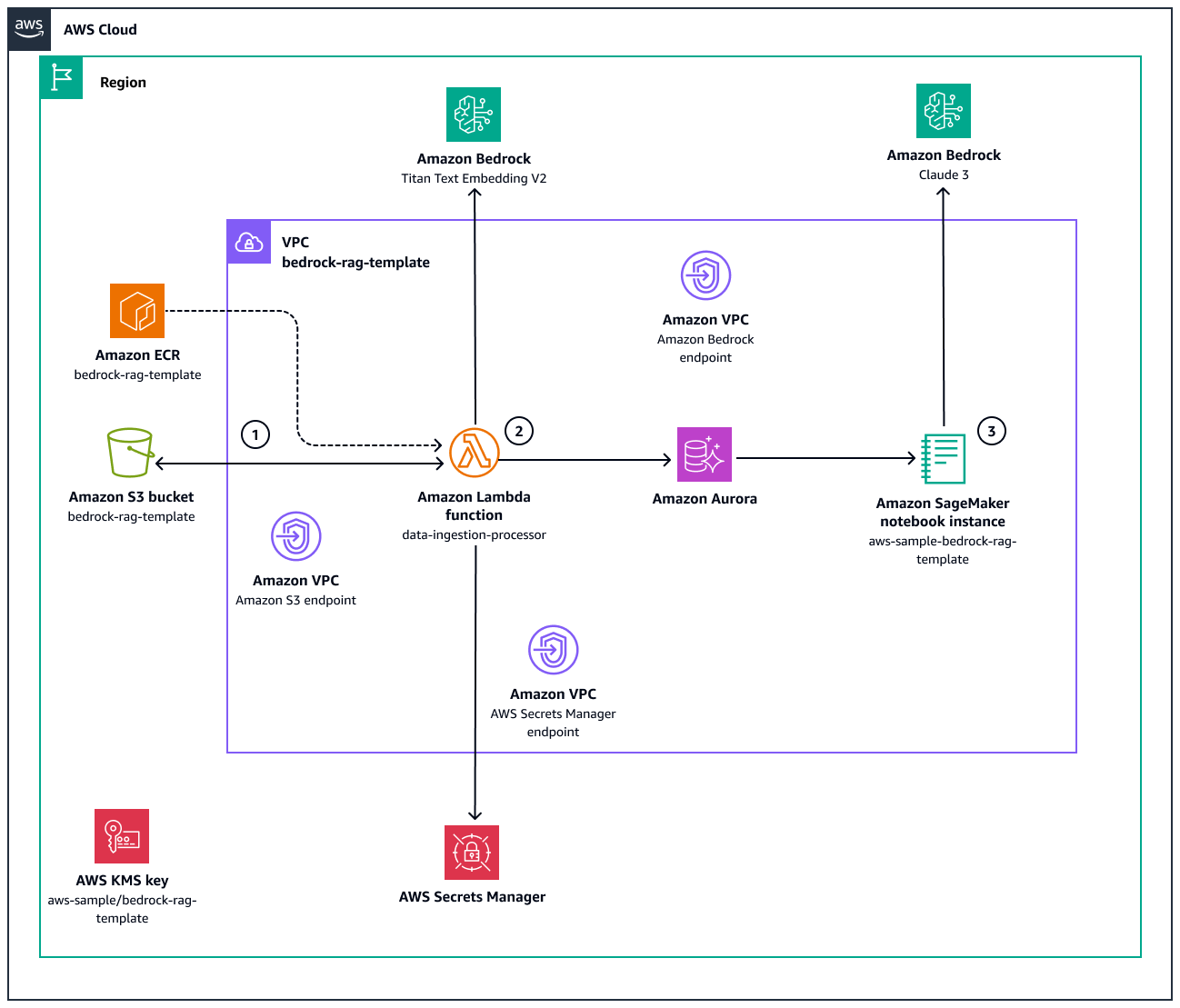

En el siguiente diagrama se muestran los componentes de la arquitectura y el flujo de trabajo de esta aplicación.

Este diagrama ilustra lo siguiente:

Cuando se crea un objeto en el bucket de Amazon S3

bedrock-rag-template-<account_id>, una notificación de Amazon S3 invoca la funcióndata-ingestion-processorLambda.La función Lambda

data-ingestion-processorse basa en una imagen de Docker almacenada en el repositorio de Amazon Elastic Container Registry (Amazon ECR).bedrock-rag-templateLa función usa el LangChain S3 FileLoader

para leer el archivo como un documento. LangChain Luego, divide LangChain RecursiveCharacterTextSplitter cada documento, con una CHUNK_SIZEy unaCHUNK_OVERLAPque dependen del tamaño máximo del token del modelo de incrustación de Amazon Titan Text Embedding V2. A continuación, la función Lambda invoca el modelo de incrustación de Amazon Bedrock para incrustar los fragmentos en representaciones vectoriales numéricas. Por último, estos vectores se almacenan en la base de datos PostgreSQL de Aurora. Para acceder a la base de datos, la función Lambda recupera primero el nombre de usuario y la contraseña de. AWS Secrets ManagerEn la instancia de Amazon SageMaker AI notebook

aws-sample-bedrock-rag-template, el usuario puede escribir un mensaje de pregunta. El código invoca a Claude 3 en Amazon Bedrock y añade la información de la base de conocimientos al contexto de la solicitud. Como resultado, Claude 3 proporciona respuestas utilizando la información de los documentos.

El enfoque de este patrón con respecto a las redes y la seguridad es el siguiente:

La función Lambda

data-ingestion-processorse encuentra en una subred privada dentro de la nube privada virtual (VPC). La función Lambda no puede enviar tráfico a la Internet pública debido a su grupo de seguridad. Como resultado, el tráfico a Amazon S3 y Amazon Bedrock se enruta únicamente a través de los puntos de enlace de la VPC. Por lo tanto, el tráfico no atraviesa la red pública de Internet, lo que reduce la latencia y añade una capa adicional de seguridad a nivel de red.Todos los recursos y datos se cifran siempre que sea posible mediante la clave AWS Key Management Service (AWS KMS) con el alias

aws-sample/bedrock-rag-template.

Automatizar y escalar

Este patrón utiliza Terraform para implementar la infraestructura del repositorio de código en un Cuenta de AWS.

Herramientas

Servicios de AWS

La edición de Amazon Aurora compatible con PostgreSQL es un motor de base de datos relacional compatible con ACID, completamente administrado que le permite configurar, utilizar y escalar implementaciones de PostgreSQL. En este patrón, Aurora, compatible con PostgreSQL, utiliza el complemento pgvector como base de datos vectorial.

Amazon Bedrock es un servicio totalmente gestionado que pone a su disposición modelos básicos de alto rendimiento (FMs) de las principales empresas emergentes de IA y Amazon a través de una API unificada.

AWS Command Line Interface (AWS CLI) es una herramienta de código abierto que le ayuda a interactuar Servicios de AWS mediante comandos en su consola de línea de comandos.

Amazon Elastic Container Registry (Amazon ECR) es un servicio de registro de imágenes de contenedor administrado que es seguro, escalable y fiable. En este patrón, Amazon ECR aloja la imagen de Docker para la función Lambda

data-ingestion-processor.AWS Identity and Access Management (IAM) le ayuda a administrar de forma segura el acceso a sus AWS recursos al controlar quién está autenticado y autorizado a usarlos.

AWS Key Management Service (AWS KMS) le ayuda a crear y controlar claves criptográficas para proteger sus datos.

AWS Lambda es un servicio de computación que ayuda a ejecutar código sin necesidad de aprovisionar ni administrar servidores. Ejecuta el código solo cuando es necesario y amplía la capacidad de manera automática, por lo que solo pagará por el tiempo de procesamiento que utilice. En este patrón, Lambda ingiere datos en el almacén de vectores.

Amazon SageMaker AI es un servicio de aprendizaje automático (ML) gestionado que le ayuda a crear y entrenar modelos de aprendizaje automático para luego implementarlos en un entorno hospedado listo para la producción.

AWS Secrets Manager lo ayuda a reemplazar las credenciales codificadas en su código, incluidas contraseñas, con una llamada a la API de Secrets Manager para recuperar el secreto mediante programación.

Amazon Simple Storage Service (Amazon S3) es un servicio de almacenamiento de objetos basado en la nube que le ayuda a almacenar, proteger y recuperar cualquier cantidad de datos.

Amazon Virtual Private Cloud (Amazon VPC) le ayuda a lanzar AWS recursos en una red virtual que haya definido. Esa red virtual es similar a la red tradicional que utiliza en su propio centro de datos, con los beneficios de usar la infraestructura escalable de AWS. La VPC incluye subredes y tablas de enrutamiento para controlar el flujo de tráfico.

Otras herramientas

Docker

es un conjunto de productos de plataforma como servicio (PaaS) que utiliza la virtualización a nivel del sistema operativo para entregar software en contenedores. HashiCorp Terraform

es una herramienta de infraestructura como código (IaC) que le ayuda a usar el código para aprovisionar y administrar la infraestructura y los recursos de la nube. Poetry

es una herramienta para la gestión y el empaquetado de dependencias en Python. Python

es un lenguaje de programación informático de uso general.

Repositorio de código

El código de este patrón está disponible en el repositorio GitHub terraform-rag-template-using-amazon-bedrock

Prácticas recomendadas

Aunque este ejemplo de código se puede implementar en cualquier Región de AWS lugar, le recomendamos que utilice US East (North Virginia)

us-east-1o US West (North California).us-west-1Esta recomendación se basa en la disponibilidad de modelos de base e incrustación en Amazon Bedrock en el momento de la publicación de este patrón. Para obtener una up-to-date lista del soporte del modelo base de Amazon Bedrock en Regiones de AWS, consulte Soporte de modelos Región de AWS en la documentación de Amazon Bedrock. Para obtener información sobre cómo implementar este ejemplo de código en otras regiones, consulte la información adicional.Este patrón solo proporciona una demostración proof-of-concept (PoC) o piloto. Si desea llevar el código a la fase de producción, asegúrese de seguir las siguientes prácticas recomendadas:

Habilite el registro de acceso al servidor para Amazon S3.

Configure la supervisión y las alertas de la función Lambda.

Si su caso de uso requiere una API, considere añadir Amazon API Gateway con una función Lambda que ejecute tareas de recuperación y respuesta a preguntas.

Siga el principio de privilegios mínimos y conceda los permisos mínimos necesarios para realizar una tarea. Para obtener más información, consulte Otorgar privilegio mínimo y Prácticas recomendadas de seguridad en la documentación de IAM.

Epics

| Tarea | Descripción | Habilidades requeridas |

|---|---|---|

Clonar el repositorio. | Para clonar el GitHub repositorio proporcionado con este patrón, utilice el siguiente comando:

| AWS DevOps |

Configure las variables. | Para configurar los parámetros de este patrón, haga lo siguiente:

| AWS DevOps |

Implemente la solución. | Para implementar la solución, haga lo siguiente:

La implementación de la infraestructura aprovisiona una instancia de SageMaker IA dentro de la VPC y con los permisos para acceder a la base de datos PostgreSQL de Aurora. | AWS DevOps |

| Tarea | Descripción | Habilidades requeridas |

|---|---|---|

Ejecuta la demostración. | Una vez que la implementación anterior de la infraestructura se haya realizado correctamente, siga los siguientes pasos para ejecutar la demostración en un cuaderno de Jupyter:

El cuaderno Jupyter le guía a través del siguiente proceso:

| AWS general |

| Tarea | Descripción | Habilidades requeridas |

|---|---|---|

Limpie la infraestructura. | Para eliminar todos los recursos que creó cuando ya no sean necesarios, utilice el siguiente comando:

| AWS DevOps |

Recursos relacionados

AWS resources

Otros recursos

Información adicional

Implementación de una base de datos vectorial

Este patrón utiliza Aurora, compatible con PostgreSQL, para implementar una base de datos vectorial para RAG. Como alternativa a Aurora PostgreSQL AWS , ofrece otras capacidades y servicios para RAG, como Amazon Bedrock Knowledge Bases y Amazon Service. OpenSearch Puede elegir la solución que mejor se adapte a sus requisitos específicos:

Amazon OpenSearch Service proporciona motores de búsqueda y análisis distribuidos que puede utilizar para almacenar y consultar grandes volúmenes de datos.

Las bases de conocimiento de Amazon Bedrock están diseñadas para crear e implementar bases de conocimiento como una abstracción adicional para simplificar el proceso de ingesta y recuperación de RAG. Las bases de conocimiento de Amazon Bedrock funcionan tanto con Aurora PostgreSQL como con Amazon Service. OpenSearch

¿Implementar en otros Regiones de AWS

Como se describe en Arquitectura, le recomendamos que utilice la región EE.UU. Este (Norte de Virginia) us-east-1 o EE.UU. Oeste (Norte de California) us-west-1 para implementar este ejemplo de código. Sin embargo, hay dos formas posibles de implementar este ejemplo de código en regiones distintas de us-east-1 yus-west-1. Puede configurar la región de despliegue en el commons.tfvars archivo. Para acceder al modelo básico entre regiones, tenga en cuenta las siguientes opciones:

Atravesar la Internet pública: si el tráfico puede atravesar la Internet pública, añada puertas de enlace de Internet a la VPC. A continuación, ajuste el grupo de seguridad asignado a la función Lambda

data-ingestion-processory a la instancia de SageMaker AI notebook para permitir el tráfico saliente a la Internet pública.No utilizar la red pública de Internet: para implementar este ejemplo en cualquier otra región que no sea

us-west-1, haga lous-east-1siguiente:

En la

us-west-1regiónus-east-1o en la región, cree una VPC adicional que incluya un punto de enlace de VPC para.bedrock-runtimeCree una conexión de emparejamiento mediante el emparejamiento de VPC o una puerta de enlace de tránsito a la VPC de la aplicación.

Al configurar el cliente

bedrock-runtimeboto3 en cualquier función de Lambda fueraus-east-1ous-west-1, pase el nombre de DNS privado del punto final de la VPC para inbedrock-runtimeous-east-1us-west-1 como tal al cliente boto3.endpoint_url