Infrastructure OU - Network account

| Influence the future of the AWS Security Reference Architecture (AWS SRA) by taking a short survey |

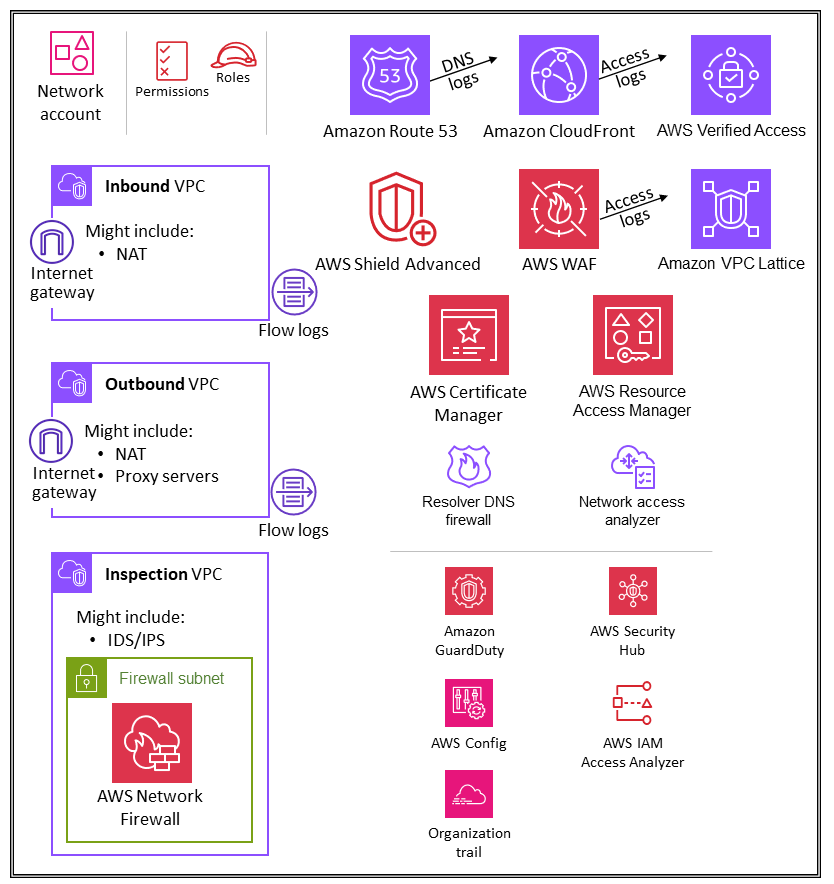

The following diagram illustrates the AWS security services that are configured in the Network account.

The Network account manages the gateway between your application and the broader internet. It is important to protect that two-way interface. The Network account isolates the networking services, configuration, and operation from the individual application workloads, security, and other infrastructure. This arrangement not only limits connectivity, permissions, and data flow, but also supports separation of duties and least privilege for the teams that need to operate in these accounts. By splitting network flow into separate inbound and outbound virtual private clouds (VPCs), you can protect sensitive infrastructure and traffic from undesired access. The inbound network is generally considered higher risk and deserves appropriate routing, monitoring, and potential issue mitigations. These infrastructure accounts will inherit permission guardrails from the Org Management account and the Infrastructure OU. Networking (and security) teams manage the majority of the infrastructure in this account.

Network architecture

Although network design and specifics are beyond the scope of this document, we recommend these three options for network connectivity between the various accounts: VPC peering, AWS PrivateLink, and AWS Transit Gateway. Important considerations in choosing among these are operational norms, budgets, and specific bandwidth needs.

-

VPC peering ‒ The simplest way to connect two VPCs is to use VPC peering. A connection enables full bidirectional connectivity between the VPCs. VPCs that are in separate accounts and AWS Regions can also be peered together. At scale, when you have tens to hundreds of VPCs, interconnecting them with peering results in a mesh of hundreds to thousands of peering connections, which can be challenging to manage and scale. VPC peering is best used when resources in one VPC must communicate with resources in another VPC, the environment of both VPCs is controlled and secured, and the number of VPCs to be connected is fewer than 10 (to allow for the individual management of each connection).

-

AWS PrivateLink

‒ PrivateLink provides private connectivity between VPCs, services, and applications. You can create your own application in your VPC and configure it as a PrivateLink-powered service (referred to as an endpoint service). Other AWS principals can create a connection from their VPC to your endpoint service by using an interface VPC endpoint or a Gateway Load Balancer endpoint, depending on the type of service. When you use PrivateLink, service traffic doesn't pass across a publicly routable network. Use PrivateLink when you have a client-server setup where you want to give one or more consumer VPCs unidirectional access to a specific service or set of instances in the service provider VPC. This is also a good option when clients and servers in the two VPCs have overlapping IP addresses, because PrivateLink uses elastic network interfaces within the client VPC so that there are no IP conflicts with the service provider. -

AWS Transit Gateway

‒ Transit Gateway provides a hub-and-spoke design for connecting VPCs and on-premises networks as a fully managed service without requiring you to provision virtual appliances. AWS manages high availability and scalability. A transit gateway is a regional resource and can connect thousands of VPCs within the same AWS Region. You can attach your hybrid connectivity (VPN and AWS Direct Connect connections) to a single transit gateway, thereby consolidating and controlling your AWS organization's entire routing configuration in one place. A transit gateway solves the complexity involved with creating and managing multiple VPC peering connections at scale. It is the default for most network architectures, but specific needs around cost, bandwidth, and latency might make VPC peering a better fit for your needs.

Inbound (ingress) VPC

The inbound VPC is intended to accept, inspect, and route network connections initiated outside the application. Depending on the specifics of the application, you can expect to see some network address translation (NAT) in this VPC. Flow logs from this VPC are captured and stored in the Log Archive account.

Outbound (egress) VPC

The outbound VPC is intended to handle network connections initiated from within the application. Depending on the specifics of the application, you can expect to see traffic NAT, AWS service-specific VPC endpoints, and hosting of external API endpoints in this VPC. Flow logs from this VPC are captured and stored in the Log Archive account.

Inspection VPC

A dedicated inspection VPC provides a simplified and central approach for managing inspections between VPCs (in the same or in different AWS Regions), the internet, and on-premises networks. For the AWS SRA, ensure that all traffic between VPCs passes through the inspection VPC, and avoid using the inspection VPC for any other workload.

AWS Network Firewall

AWS Network Firewall

You use a firewall on a per-Availability Zone basis in your VPC. For each Availability Zone, you choose a subnet to host the firewall endpoint that filters your traffic. The firewall endpoint in an Availability Zone can protect all the subnets inside the zone except for the subnet where it's located. Depending on the use case and deployment model, the firewall subnet could be either public or private. The firewall is completely transparent to the traffic flow and does not perform network address translation (NAT). It preserves the source and destination address. In this reference architecture, the firewall endpoints are hosted in an inspection VPC. All traffic from the inbound VPC and to the outbound VPC is routed through this firewall subnet for inspection.

Network Firewall makes firewall activity visible in real time through Amazon CloudWatch

metrics, and offers increased visibility of network traffic by sending logs to Amazon Simple

Storage Service (Amazon S3), CloudWatch, and Amazon Data Firehose. Network Firewall is interoperable with

your existing security approach, including technologies from AWS Partners

In the AWS SRA, Network Firewall is used within the network account because the network control-focused functionality of the service aligns with the intent of the account.

Design considerations

-

AWS Firewall Manager supports Network Firewall, so you can centrally configure and deploy Network Firewall rules across your organization. (For details, see AWS Network Firewall policies in the AWS documentation.) When you configure Firewall Manager, it automatically creates a firewall with sets of rules in the accounts and VPCs that you specify. It also deploys an endpoint in a dedicated subnet for every Availability Zone that contains public subnets. At the same time, any changes to the centrally configured set of rules are automatically updated downstream on the deployed Network Firewall firewalls.

-

There are multiple deployment models

available with Network Firewall. The right model depends on your use case and requirements. Examples include the following: -

A distributed deployment model where Network Firewall is deployed into individual VPCs.

-

A centralized deployment model where Network Firewall is deployed into a centralized VPC for east-west (VPC-to-VPC) or north-south (internet egress and ingress, on-premises) traffic.

-

A combined deployment model where Network Firewall is deployed into a centralized VPC for east-west and a subset of north-south traffic.

-

-

As a best practice, do not use the Network Firewall subnet to deploy any other services. This is because Network Firewall cannot inspect traffic from sources or destinations within the firewall subnet.

Network Access Analyzer

Network Access Analyzer is a feature of Amazon VPC that identifies unintended network access to your resources. You can use Network Access Analyzer to validate network segmentation, identify resources that are accessible from the internet or accessible only from trusted IP address ranges, and validate that you have appropriate network controls on all network paths.

Network Access Analyzer uses automated reasoning algorithms to analyze the network paths that a packet can take between resources in an AWS network, and produces findings for paths that match your defined Network Access Scope. Network Access Analyzer performs a static analysis of a network configuration, meaning that no packets are transmitted in the network as part of this analysis.

The Amazon Inspector Network Reachability rules provide a related feature. The findings

generated by these rules are used in the Application account. Both Network Access Analyzer and

Network Reachability use the latest technology from the AWS Provable Security

initiative

The network account defines the critical network infrastructure that controls the traffic in and out of your AWS environment. This traffic needs to be tightly monitored. In the AWS SRA, Network Access Analyzer is used within the Network account to help identify unintended network access, identify internet-accessible resources through internet gateways, and verify that appropriate network controls such as network firewalls and NAT gateways are present on all network paths between resources and internet gateways.

Design consideration

-

Network Access Analyzer is a feature of Amazon VPC, and it can be used in any AWS account that has a VPC. Network administrators can get tightly scoped, cross-account IAM roles to validate that approved network paths are enforced within each AWS account.

AWS RAM

AWS Resource Access Manager

AWS RAM enables you to share resources that do not support IAM resource-based policies, such as VPC subnets and Route 53 rules. Furthermore, with AWS RAM, the owners of a resource can see which principals have access to individual resources that they have shared. IAM entities can retrieve the list of resources shared with them directly, which they can't do with resources shared by IAM resource policies. If AWS RAM is used to share resources outside your AWS organization, an invitation process is initiated. The recipient must accept the invitation before access to the resources is granted. This provides additional checks and balances.

AWS RAM is invoked and managed by the resource owner, in the account where the shared

resource is deployed. One common use case for AWS RAM illustrated in the AWS SRA is for

network administrators to share VPC subnets and transit gateways with the entire AWS

organization. This provides the ability to decouple AWS account and network management

functions and helps achieve separation of duties. For more information about VPC sharing, see

the AWS blog post VPC sharing: A new approach to multiple accounts and VPC management

Design consideration

-

Although AWS RAM as a service is deployed only within the Network account in the AWS SRA, it would typically be deployed in more than one account. For example, you can centralize your data lake management to a single data lake account, and then share the AWS Lake Formation data catalog resources (databases and tables) with other accounts in your AWS organization. For more information, see the AWS Lake Formation documentation and the AWS blog post Securely share your data across AWS accounts using AWS Lake Formation

.. Additionally, security administrators can use AWS RAM to follow best practices when they build an AWS Private CA hierarchy. CAs can be shared with external third parties, who can issue certificates without having access to the CA hierarchy. This allows origination organizations to limit and revoke third-party access.

AWS Verified Access

AWS Verified Access

Verified Access supports two common corporate application patterns: internal and internet-facing. Verified Access integrates with applications by using Application Load Balancers or elastic network interfaces. If you’re using an Application Load Balancer, Verified Access requires an internal load balancer. Because Verified Access supports AWS WAF at the instance level, an existing application that has AWS WAF integration with an Application Load Balancer can move policies from the load balancer to the Verified Access instance. A corporate application is represented as a Verified Access endpoint. Each endpoint is associated with a Verified Access group and inherits the access policy for the group. A Verified Access group is a collection of Verified Access endpoints and a group-level Verified Access policy. Groups simplify policy management and enable IT administrators to set up baseline criteria. Application owners can further define granular policies depending on the sensitivity of the application.

In the AWS SRA, Verified Access is hosted within the Network account. The central IT team sets up centrally managed configurations. For example, they might connect trust providers such as identity providers (for example, Okta) and device trust providers (for example, Jamf), create groups, and determine the group-level policy. These configurations can then be shared with tens, hundreds, or thousands of workload accounts by using AWS Resource Access Manager (AWS RAM). This enables application teams to manage the underlying endpoints that manage their applications without overhead from other teams. AWS RAM provides a scalable way to leverage Verified Access for corporate applications that are hosted in different workload accounts.

Design consideration

-

You can group endpoints for applications that have similar security requirements to simplify policy administration, and then share the group with application accounts. All applications in the group share the group policy. If an application in the group requires a specific policy because of an edge case, you can apply application-level policy for that application.

Amazon VPC Lattice

Amazon VPC Lattice

VPC Lattice integrates with AWS Resource Access Manager (AWS RAM) to enable sharing of

services and service networks. AWS SRA depicts a distributed architecture where developers or

service owners create VPC Lattice services in their Application account. Service owners define

the listeners, routing rules, and target groups along with auth policies. They then share the

services with other accounts, and associate the services with VPC Lattice service networks.

These networks are created by network administrators in the Network account and shared with

the Application account. Network administrators configure service network-level auth policies

and monitoring. Administrators associate VPCs and VPC Lattice services with one or more

service networks. For a detailed walkthrough of this distributed architecture, see the AWS

blog post Build secure multi-account multi-VPC connectivity for your applications with Amazon VPC

Lattice

Design consideration

-

Depending on your organization’s operating model of service or service network visibility, network administrators can share their service networks and can give service owners the control to associate their services and VPCs with these service networks. Or, service owners can share their services, and network administrators can associate the services with service networks.

A client can send requests to services that are associated with a service network only if the client is in a VPC that's associated with the same service network. Client traffic that traverses a VPC peering connection or a transit gateway is denied.

Edge security

Edge security generally entails three types of protections: secure content delivery, network and application-layer protection, and distributed denial of service (DDoS) mitigation. Content such as data, videos, applications, and APIs have to be delivered quickly and securely, using the recommended version of TLS to encrypt communications between endpoints. The content should also have access restrictions through signed URLs, signed cookies, and token authentication. Application-level security should be designed to control bot traffic, block common attack patterns such as SQL injection or cross-site scripting (XSS), and provide web traffic visibility. At the edge, DDoS mitigation provides an important defense layer that ensures continued availability of mission-critical business operations and services. Applications and APIs should be protected from SYN floods, UDP floods, or other reflection attacks, and have inline mitigation to stop basic network-layer attacks.

AWS offers several services to help provide a secure environment, from the core cloud to

the edge of the AWS network. Amazon CloudFront, AWS Certificate Manager (ACM), AWS Shield, AWS

WAF, and Amazon Route 53 work together to help create a flexible, layered security perimeter.

With Amazon CloudFront, content, APIs, or applications can be delivered over HTTPS by using

TLSv1.3 to encrypt and secure communication between viewer clients and CloudFront. You can use

ACM to create a custom SSL

certificate

Amazon CloudFront

Amazon CloudFront

CloudFront provides multiple options to secure and restrict access to your content. For example, it can restrict access to your Amazon S3 origin by using signed URLs and signed cookies. For more information, see Configuring secure access and restricting access to content in the CloudFront documentation.

The AWS SRA illustrates centralized CloudFront distributions in the Network account because they align with the centralized network pattern that’s implemented by using Transit Gateway. By deploying and managing CloudFront distributions in the Network account, you gain the benefits of centralized controls. You can manage all CloudFront distributions in a single place, which makes it easier to control access, configure settings, and monitor usage across all accounts. Additionally, you can manage the ACM certificates, DNS records, and CloudFront logging from one centralized account. The CloudFront security dashboard provides AWS WAF visibility and controls directly in your CloudFront distribution. You get visibility into your application’s top security trends, allowed and blocked traffic, and bot activity. You can use investigative tools such as visual log analyzers and built-in blocking controls to isolate traffic patterns and block traffic without querying logs or writing security rules.

Design considerations

-

Alternatively, you can deploy CloudFront as part of the application in the Application account. In this scenario, the application team makes decisions such as how the CloudFront distributions are deployed, determines the appropriate cache policies, and takes responsibility for governance, auditing, and monitoring of the CloudFront distributions. By spreading CloudFront distributions across multiple accounts, you can benefit from additional service quotas. As another benefit, you can use CloudFront’s inherent and automated origin access identity (OAI) and origin access control (OAC) configuration to restrict access to Amazon S3 origins.

-

When you deliver web content through a CDN such as CloudFront, you have to prevent viewers from bypassing the CDN and accessing your origin content directly. To achieve this origin access restriction, you can use CloudFront and AWS WAF to add custom headers and verify the headers before you forward requests to your custom origin. For a detailed explanation of this solution, see the AWS security blog post How to enhance Amazon CloudFront origin security with AWS WAF and AWS Secrets Manager

. An alternate method is to limit only the CloudFront prefix list in the security group that’s associated with the Application Load Balancer. This will help ensure that only a CloudFront distribution can access the load balancer.

AWS WAF

AWS WAF

AWS WAF uses web access control

lists (ACLs) to protect a set of AWS resources. A web ACL is a set of rules that defines the inspection criteria, and an associated action to take

(block, allow, count, or run bot control) if a web request meets the criteria. AWS WAF

provides a set of managed

rules

AWS WAF provides a set of intelligent tier-managed rules for common and targeted bots and account takeover protection (ATP). You are charged a subscription fee and a traffic inspection fee when you use the bot control and ATP rule groups. Therefore, we recommend that you monitor your traffic first and then decide what to use. You can use the bot management and account takeover dashboards that are available for free on the AWS WAF console to monitor these activities and then decide whether you need an intelligent tier AWS WAF rule group.

In the AWS SRA, AWS WAF is integrated with CloudFront in the Network account. In this configuration, WAF rule processing happens at the edge locations instead of within the VPC. This enables filtering of malicious traffic closer to the end user who requested the content, and helps restrict malicious traffic from entering your core network.

You can send full AWS WAF logs to an S3 bucket in the Log Archive account by configuring

cross-account access to the S3 bucket. For more information, see the AWS re:Post

article

Design considerations

-

As an alternative to deploying AWS WAF centrally in the Network account, some use cases are better met by deploying AWS WAF in the Application account. For example, you might choose this option when you deploy your CloudFront distributions in your Application account or have public-facing Application Load Balancers, or if you’re using Amazon API Gateway in front of your web applications. If you decide to deploy AWS WAF in each Application account, use AWS Firewall Manager to manage the AWS WAF rules in these accounts from the centralized Security Tooling account.

-

You can also add general AWS WAF rules at the CloudFront layer and additional application-specific AWS WAF rules at a Regional resource such as the Application Load Balancer or the API gateway.

AWS Shield

AWS Shield

You can use the Shield Advanced automatic application layer DDoS mitigation feature to configure Shield Advanced to respond automatically to mitigate application layer (layer 7) attacks against your protected CloudFront distributions and Application Load Balancers. When you enable this feature, Shield Advanced automatically generates custom AWS WAF rules to mitigate DDoS attacks. Shield Advanced also gives you access to the AWS Shield Response Team (SRT). You can contact SRT at any time to create and manage custom mitigations for your application or during an active DDoS attack. If you want SRT to proactively monitor your protected resources and contact you during a DDoS attempt, consider enabling the proactive engagement feature.

Design considerations

-

If you are have any workloads that are fronted by internet-facing resources in the Application account, such as Amazon CloudFront, an Application Load Balancer, or a Network Load Balancer, configure Shield Advanced in the Application account and add those resources to Shield protection. You can use AWS Firewall Manager to configure these options at scale.

-

If you have multiple resources in the data flow, such as a CloudFront distribution in front of an Application Load Balancer, only use the entry-point resource as the protected resource. This will ensure that you are not paying Shield Data Transfer Out (DTO) fees

twice for two resources. -

Shield Advanced records metrics that you can monitor in Amazon CloudWatch. (For more information, see AWS Shield Advanced metrics and alarms in the AWS documentation.) Set up CloudWatch alarms to receive SNS notifications to your security center when a DDoS event is detected. In a suspected DDoS event, contact the AWS Enterprise Support team

by filing a support ticket and assigning it the highest priority. The Enterprise Support team will include the Shield Response Team (SRT) when handling the event. In addition, you can preconfigure the AWS Shield engagement Lambda function to create a support ticket and send an email to the SRT team.

AWS Certificate Manager

AWS Certificate Manager

(ACM)

ACM is used in the Network account to generate a public TLS certificate, which, in turn, is used by CloudFront distributions to establish the HTTPS connection between viewers and CloudFront. For more information, see the CloudFront documentation.

Design consideration

-

For externally facing certificates, ACM must reside in the same account as the resources for which it provisions certificates. Certificates cannot be shared across accounts.

Amazon Route 53

Amazon Route 53

You can use Route 53 as a DNS service to map domain names to your EC2 instances, S3 buckets, CloudFront distributions, and other AWS resources. The distributed nature of the AWS DNS servers helps ensure that your end users are routed to your application consistently. Features such as Route 53 traffic flow and routing control help you improve reliability. If your primary application endpoint becomes unavailable, you can configure your failover to reroute your users to an alternate location. Route 53 Resolver provides recursive DNS for your VPC and on-premises networks over AWS Direct Connect or AWS managed VPN.

By using the AWS Identity and Access Management (IAM) service with Route 53, you get fine-grained control over who can update your DNS data. You can enable DNS Security Extensions (DNSSEC) signing to let DNS resolvers validate that a DNS response came from Route 53 and has not been tampered with.

Route 53 Resolver DNS Firewall provides protection for outbound DNS requests from your VPCs. These requests go through Route 53 Resolver for domain name resolution. A primary use of DNS Firewall protections is to help prevent DNS exfiltration of your data. With DNS Firewall, you can monitor and control the domains that your applications can query. You can deny access to the domains that you know are bad, and allow all other queries to pass through. Alternately, you can deny access to all domains except for the ones that you explicitly trust. You can also use DNS Firewall to block resolution requests to resources in private hosted zones (shared or local), including VPC endpoint names. It can also block requests for public or private EC2 instance names.

Route 53 resolvers are created by default as part of every VPC. In the AWS SRA, Route 53 is used in the Network account primarily for the DNS firewall capability.

Design consideration

-

DNS Firewall and AWS Network Firewall both offer domain name filtering, but for different types of traffic. You can use DNS Firewall and Network Firewall together to configure domain-based filtering for application-layer traffic over two different network paths.

-

DNS Firewall provides filtering for outbound DNS queries that pass through the Route 53 Resolver from applications within your VPCs. You can also configure DNS Firewall to send custom responses for queries to blocked domain names.

-

Network Firewall provides filtering for both network-layer and application-layer traffic, but does not have visibility into queries made by Route 53 Resolver.

-